Curl doesn't work. Hone your skills with cURL

Those who use cURL after updates to 5.6.1, 5.5.17 faced the fact that the cURL module stopped working. The problem has not disappeared since then. Even in the latest version of PHP 5.6.4, this problem persisted.

How do you know if cURL is working for you?

Create php file and copy there:

Open it from the server. If the output is something like:

Array ( => 468736 => 3 => 3997 => 0 => 7.39.0 => x86_64-pc-win32 => OpenSSL/1.0.1j => 1.2.7.3 => Array ( => dict => file => ftp => ftps => gopher => http => https => imap => imaps => ldap => pop3 => pop3s => rtsp => scp => sftp => smtp => smtps => telnet => tftp) )

So cURL is fine if instead PHP error means there is a problem.

First, of course, check the php.ini file, find the line there

Extension=php_curl.dll

And make sure it's not preceded by a semicolon.

If this is the case, and cURL is not working, then another test can be performed to confirm the unusual situation. Create another php file with content:

Search for cURL in the browser, if there is only one match, then the cURL module is not loaded:

At the same time, both Apache and PHP work as usual.

Three solutions:

- Method one (not kosher). If you have PHP 5.6.*, then take the version of PHP 5.6.0, from there take old file php_curl.dll and replace it with your new one from a version such as PHP 5.6.4. For those with PHP 5.5.17 and above, you need to take the same file from PHP 5.5.16 and replace it as well. The only problem here is to find these old versions. You can, of course, poke around in http://windows.php.net/downloads/snaps/php-5.6 , but personally I did not find what I needed there. And the decision itself is somehow not quite kosher.

- The second method (very fast, but also not kosher). Copy the libssh2.dll file from the PHP directory to the Apache24bin directory and restart Apache.

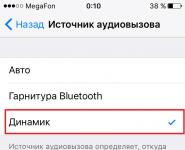

- Method three (kosher - kosher people applaud standing). You need to add your PHP directory in PATH. How to do this is very well described in the official documentation.

We check:

Voila, the cURL section is in place.

Why is that? Where did this problem come from? There is no answer to this question, although the mechanism of its occurrence has already been described.

The problem seems to be related to the fact that 5.6.1 was supposed to be released with an updated libcurl 7.38.0. But this is not known for certain, the authors of PHP nod at Apache, saying that there are some bugs there.

Mechanism of the problem: if the PHP directory is not included in the system PATH, then when the Apache service starts, it is not able to find a new dll (libssh2.dll), which is a dependency for php_curl.

Relevant bug reports:

Fatal error: Call to undefined function curl_multi_init() in ...

In general, there were problems with cURL in PHP, it seems, if not always, then very often. In the process of googling my problem, I came across threads, some of which were more than a dozen years old.

In addition, googling gave a few more conclusions:

There are enough "instructions for morons" on the Internet, in which they tell in detail, with pictures, how to uncomment the extension=php_curl.dll line in the php.ini file.

On the official PHP site, in the section on installing cURL, there are only two suggestions regarding the Windows system:

To work with this module in Windows files libeay32.dll and ssleay32.dll must exist in the system environment variable PATH. You don't need the libcurl.dll file from the cURL site.

I have read them ten times. Switched to English language and read it a few more times in English. Each time I am more and more convinced that these two sentences were written by animals, or someone just jumped on the keyboard - I do not understand their meaning.

There are also some crazy tips and instructions (I even managed to try some).

On the PHP bug report site, I have already come close to unraveling the need to include the directory with PHP in the PATH system variable.

In general, for those who have a problem with cURL and who need to “include a directory with PHP in the PATH system variable”, go to the instruction already mentioned above http://php.net/manual/ru/faq.installation.php#faq .installation.addtopath . Everything is simple there, and, most importantly, what needs to be done is written in human language.

cURL is a special tool that is designed to transfer files and data using the URL syntax. This technology supports many protocols such as HTTP, FTP, TELNET and many others. cURL was originally designed to be a tool command line. Luckily for us, the cURL library is supported by the language PHP programming. In this article, we will look at some of the advanced cURL features, as well as touch on the practical application of the acquired knowledge using PHP.

Why cURL?

In fact, there are many alternative ways fetching the content of a web page. In many cases, mostly out of laziness, I have used simple PHP functions instead of cURL:

$content = file_get_contents("http://www.nettuts.com"); // or $lines = file("http://www.nettuts.com"); // or readfile("http://www.nettuts.com");

However, these functions have virtually no flexibility and contain a huge number of shortcomings in terms of error handling and so on. In addition, there are certain tasks that you simply cannot solve with these standard functions: interacting with cookies, authentication, submitting a form, uploading files, and so on.

cURL is a powerful library that supports many different protocols, options, and provides detailed information about URL requests.

Basic structure

- Initialization

- Assigning parameters

- Execution and fetching the result

- Freeing up memory

// 1. initialization $ch = curl_init(); // 2. specify options, including url curl_setopt($ch, CURLOPT_URL, "http://www.nettuts.com"); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); curl_setopt($ch, CURLOPT_HEADER, 0); // 3. get HTML as result $output = curl_exec($ch); // 4. close the connection curl_close($ch);

Step #2 (that is, calling curl_setopt()) will be discussed in this article much more than all the other steps, because. at this stage, all the most interesting and useful things that you need to know happen. There are a huge number of different options in cURL that must be specified in order to be able to configure a URL request in the most thorough manner. We will not consider the entire list as a whole, but will focus only on what I consider necessary and useful for this lesson. Everything else you can explore yourself if this topic interests you.

Error Check

Additionally, you can also use conditional statements to check if the operation was successful:

// ... $output = curl_exec($ch); if ($output === FALSE) ( echo "cURL Error: " . curl_error($ch); ) // ...

Here I ask you to note a very important point for yourself: we must use “=== false” for comparison, instead of “== false”. For those who are not in the know, this will help us distinguish between an empty result and a false boolean value, which will indicate an error.

Receiving the information

Another additional step is to get data about the cURL request after it has been executed.

// ... curl_exec($ch); $info = curl_getinfo($ch); echo "Took" . $info["total_time"] . " seconds for url " . $info["url"]; // ...

The returned array contains the following information:

- "url"

- "content_type"

- http_code

- “header_size”

- "request_size"

- “filetime”

- “ssl_verify_result”

- “redirect_count”

- “total_time”

- “namelookup_time”

- “connect_time”

- "pretransfer_time"

- "size_upload"

- size_download

- “speed_download”

- “speed_upload”

- "download_content_length"

- “upload_content_length”

- "starttransfer_time"

- "redirect_time"

Redirect detection depending on the browser

In this first example, we will write code that can detect URL redirects based on various settings browser. For example, some websites redirect browsers cell phone, or any other device.

We're going to use the CURLOPT_HTTPHEADER option to determine our outgoing HTTP headers, including the user's browser name and available languages. Eventually, we will be able to determine which sites are redirecting us to different URLs.

// test URL $urls = array("http://www.cnn.com", "http://www.mozilla.com", "http://www.facebook.com"); // testing browsers $browsers = array("standard" => array ("user_agent" => "Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.1.6) Gecko/20091201 Firefox/3.5 .6 (.NET CLR 3.5.30729)", "language" => "en-us,en;q=0.5"), "iphone" => array ("user_agent" => "Mozilla/5.0 (iPhone; U ; CPU like Mac OS X; en) AppleWebKit/420+ (KHTML, like Gecko) Version/3.0 Mobile/1A537a Safari/419.3", "language" => "en"), "french" => array ("user_agent" => "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; GTB6; .NET CLR 2.0.50727)", "language" => "fr,fr-FR;q=0.5")); foreach ($urls as $url) ( echo "URL: $url\n"; foreach ($browsers as $test_name => $browser) ( $ch = curl_init(); // specify url curl_setopt($ch, CURLOPT_URL, $url); // set browser headers curl_setopt($ch, CURLOPT_HTTPHEADER, array("User-Agent: ($browser["user_agent"])", "Accept-Language: ($browser["language"])" )); // we don't need page content curl_setopt($ch, CURLOPT_NOBODY, 1); // we need to get HTTP headers curl_setopt($ch, CURLOPT_HEADER, 1); // return results instead of output curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); $output = curl_exec($ch); curl_close($ch); // Was there an HTTP redirect? if (preg_match("!Location: (.*)!", $output, $matches)) ( echo " $test_name: redirects to $matches\n"; ) else ( echo "$test_name: no redirection\n"; ) ) echo "\n\n"; )

First, we specify a list of URLs of sites that we will check. More precisely, we need the addresses of these sites. Next, we need to define browser settings in order to test each of these URLs. After that, we will use a loop in which we will run through all the results obtained.

The trick we use in this example to set the cURL settings will allow us to get not the content of the page, but only the HTTP headers (stored in $output). Next, using a simple regex, we can determine if the string “Location:” was present in the received headers.

When you run this code, you should get something like this:

Making a POST request to a specific URL

When forming GET request the transmitted data can be passed to the URL via a "query string". For example, when you do a Google search, the search term is placed in the address bar of the new URL:

http://www.google.com/search?q=ruseller

In order to imitate given request, you don't need to use cURL. If laziness finally overcomes you, use the “file_get_contents ()” function in order to get the result.

But the thing is, some HTML forms send POST requests. The data of these forms is transported through the body of the HTTP request, and not as in the previous case. For example, if you filled out a form on a forum and clicked on the search button, then most likely a POST request will be made:

http://codeigniter.com/forums/do_search/

We can write PHP script, which can mimic this kind of request URL. First, let's create a simple file to accept and display POST data. Let's call it post_output.php:

Print_r($_POST);

We then create a PHP script to execute the cURL request:

$url = "http://localhost/post_output.php"; $post_data = array("foo" => "bar", "query" => "Nettuts", "action" => "Submit"); $ch = curl_init(); curl_setopt($ch, CURLOPT_URL, $url); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); // indicate that we have a POST request curl_setopt($ch, CURLOPT_POST, 1); // add variables curl_setopt($ch, CURLOPT_POSTFIELDS, $post_data); $output = curl_exec($ch); curl_close($ch); echo $output;

When you run this script, you should get a similar result:

Thus, the POST request was sent to the post_output.php script, which in turn outputted the $_POST superglobal array, the contents of which we obtained using cURL.

File upload

First, let's create a file in order to form it and send it to the upload_output.php file:

Print_r($_FILES);

And here is the script code that performs the above functionality:

$url = "http://localhost/upload_output.php"; $post_data = array ("foo" => "bar", // file to upload "upload" => "@C:/wamp/www/test.zip"); $ch = curl_init(); curl_setopt($ch, CURLOPT_URL, $url); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); curl_setopt($ch, CURLOPT_POST, 1); curl_setopt($ch, CURLOPT_POSTFIELDS, $post_data); $output = curl_exec($ch); curl_close($ch); echo $output;

When you want to upload a file, all you have to do is pass it in as a regular post variable, preceded by the @ symbol. When you run the written script, you will get the following result:

Multiple cURL

One of the most strengths cURL is the ability to create "multiple" cURL handlers. This allows you to open a connection to multiple URLs at the same time and asynchronously.

In the classic version of the cURL request, the execution of the script is suspended, and the URL request operation is expected to complete, after which the script can continue. If you intend to interact with a whole lot of URLs, this will be quite time consuming, since in the classic case you can only work with one URL at a time. However, we can fix this situation by using special handlers.

Let's take a look at the code example I took from php.net:

// create some cURL resources $ch1 = curl_init(); $ch2 = curl_init(); // specify URL and other parameters curl_setopt($ch1, CURLOPT_URL, "http://lxr.php.net/"); curl_setopt($ch1, CURLOPT_HEADER, 0); curl_setopt($ch2, CURLOPT_URL, "http://www.php.net/"); curl_setopt($ch2, CURLOPT_HEADER, 0); //create a multiple cURL handler $mh = curl_multi_init(); //adding multiple handlers curl_multi_add_handle($mh,$ch1); curl_multi_add_handle($mh,$ch2); $active = null; //execution do ( $mrc = curl_multi_exec($mh, $active); ) while ($mrc == CURLM_CALL_MULTI_PERFORM); while ($active && $mrc == CURLM_OK) ( if (curl_multi_select($mh) != -1) ( do ( $mrc = curl_multi_exec($mh, $active); ) while ($mrc == CURLM_CALL_MULTI_PERFORM); ) ) //close curl_multi_remove_handle($mh, $ch1); curl_multi_remove_handle($mh, $ch2); curl_multi_close($mh);

The idea is that you can use multiple cURL handlers. Using a simple loop, you can keep track of which requests have not yet been completed.

In this example, there are two main loops. The first do-while loop calls the curl_multi_exec() function. This feature is non-blocking. It executes as fast as it can and returns the state of the request. As long as the returned value is the constant 'CURLM_CALL_MULTI_PERFORM', this means that the work has not yet been completed (for example, in this moment http headers are sent to the URL); That is why we keep checking this return value until we get a different result.

In the next loop, we check the condition while $active = "true". It is the second parameter to the curl_multi_exec() function. The value of this variable will be "true" as long as any of the existing changes is active. Next, we call the curl_multi_select() function. Its execution "blocks" as long as there is at least one active connection, until a response is received. When this happens, we return to the main loop to continue executing queries.

Now let's apply what we learned with an example that will be really useful for a large number of people.

Checking Links in WordPress

Imagine a blog with a huge number of posts and messages, each of which has links to external Internet resources. Some of these links might already be "dead" for various reasons. Perhaps the page has been deleted or the site is not working at all.

We're going to create a script that will parse all links and find websites that aren't loading and 404 pages, and then provide us with a very detailed report.

I will say right away that this is not an example of creating a plugin for WordPress. This is just about everything a good testing ground for us.

Let's finally get started. First we have to fetch all links from the database:

// configuration $db_host = "localhost"; $db_user = "root"; $db_pass = ""; $db_name = "wordpress"; $excluded_domains = array("localhost", "www.mydomain.com"); $max_connections = 10; // variable initialization $url_list = array(); $working_urls = array(); $dead_urls = array(); $not_found_urls = array(); $active = null; // connect to MySQL if (!mysql_connect($db_host, $db_user, $db_pass)) ( die("Could not connect: " . mysql_error()); ) if (!mysql_select_db($db_name)) ( die("Could not select db: " . mysql_error()); ) // select all published posts with links $q = "SELECT post_content FROM wp_posts WHERE post_content LIKE "%href=%" AND post_status = "publish" AND post_type = "post ""; $r = mysql_query($q) or die(mysql_error()); while ($d = mysql_fetch_assoc($r)) ( // fetch links using regular expressions if (preg_match_all("!href=\"(.*?)\"!", $d["post_content"], $matches)) ( foreach ($matches as $url) ( $tmp = parse_url($url) ; if (in_array($tmp["host"], $excluded_domains)) ( continue; ) $url_list = $url; ) ) ) // remove duplicates $url_list = array_values(array_unique($url_list)); if (!$url_list) ( die("No URL to check"); )

First, we generate configuration data for interacting with the database, then we write a list of domains that will not participate in the check ($excluded_domains). We also define a number that characterizes the number of maximum simultaneous connections that we will use in our script ($max_connections). We then join the database, select the posts that contain links, and accumulate them into an array ($url_list).

The following code is a bit complex, so understand it from start to finish:

// 1. multiple handler $mh = curl_multi_init(); // 2. add a lot of URLs for ($i = 0; $i< $max_connections; $i++) { add_url_to_multi_handle($mh, $url_list); } // 3. инициализация выполнения do { $mrc = curl_multi_exec($mh, $active); } while ($mrc == CURLM_CALL_MULTI_PERFORM); // 4. основной цикл while ($active && $mrc == CURLM_OK) { // 5. если всё прошло успешно if (curl_multi_select($mh) != -1) { // 6. делаем дело do { $mrc = curl_multi_exec($mh, $active); } while ($mrc == CURLM_CALL_MULTI_PERFORM); // 7. если есть инфа? if ($mhinfo = curl_multi_info_read($mh)) { // это значит, что запрос завершился // 8. извлекаем инфу $chinfo = curl_getinfo($mhinfo["handle"]); // 9. мёртвая ссылка? if (!$chinfo["http_code"]) { $dead_urls = $chinfo["url"]; // 10. 404? } else if ($chinfo["http_code"] == 404) { $not_found_urls = $chinfo["url"]; // 11. рабочая } else { $working_urls = $chinfo["url"]; } // 12. чистим за собой curl_multi_remove_handle($mh, $mhinfo["handle"]); // в случае зацикливания, закомментируйте данный вызов curl_close($mhinfo["handle"]); // 13. добавляем новый url и продолжаем работу if (add_url_to_multi_handle($mh, $url_list)) { do { $mrc = curl_multi_exec($mh, $active); } while ($mrc == CURLM_CALL_MULTI_PERFORM); } } } } // 14. завершение curl_multi_close($mh); echo "==Dead URLs==\n"; echo implode("\n",$dead_urls) . "\n\n"; echo "==404 URLs==\n"; echo implode("\n",$not_found_urls) . "\n\n"; echo "==Working URLs==\n"; echo implode("\n",$working_urls); function add_url_to_multi_handle($mh, $url_list) { static $index = 0; // если у нас есть ещё url, которые нужно достать if ($url_list[$index]) { // новый curl обработчик $ch = curl_init(); // указываем url curl_setopt($ch, CURLOPT_URL, $url_list[$index]); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); curl_setopt($ch, CURLOPT_FOLLOWLOCATION, 1); curl_setopt($ch, CURLOPT_NOBODY, 1); curl_multi_add_handle($mh, $ch); // переходим на следующий url $index++; return true; } else { // добавление новых URL завершено return false; } }

Here I will try to put everything on the shelves. The numbers in the list correspond to the numbers in the comment.

- 1. Create a multiple handler;

- 2. We will write the add_url_to_multi_handle() function a little later. Each time it is called, a new url will be processed. Initially, we add 10 ($max_connections) URLs;

- 3. In order to get started, we must run the curl_multi_exec() function. As long as it returns CURLM_CALL_MULTI_PERFORM, we still have some work to do. We need this mainly in order to create connections;

- 4. Next comes the main loop, which will be executed as long as we have at least one active connection;

- 5. curl_multi_select() hangs waiting for URL lookup to complete;

- 6. Once again, we need to get cURL to do some work, namely to fetch the returned response data;

- 7. Information is being verified here. As a result of the request, an array will be returned;

- 8. The returned array contains a cURL handler. This is what we'll use to fetch information about a particular cURL request;

- 9. If the link was dead, or the script ran out of time, then we should not look for any http code;

- 10. If the link returned us a 404 page, then the http code will contain the value 404;

- 11. In otherwise, we have a working link in front of us. (You can add additional checks for error code 500, etc...);

- 12. Next, we remove the cURL handler because we don't need it anymore;

- 13. Now we can add another url and run everything we talked about before;

- 14. At this step, the script ends its work. We can remove everything we don't need and generate a report;

- 15. In the end, we will write a function that will add a url to the handler. The static variable $index will be incremented every time given function will be called.

I used given script on my blog (with some broken links added on purpose to test it out) and got the following result:

In my case, the script took just under 2 seconds to run through 40 URLs. The increase in productivity is significant when working with more large quantity URL addresses. If you open ten connections at the same time, the script can run ten times faster.

A few words about other useful cURL options

HTTP Authentication

If the URL has HTTP authentication, then you can easily use the following script:

$url = "http://www.somesite.com/members/"; $ch = curl_init(); curl_setopt($ch, CURLOPT_URL, $url); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); // specify username and password curl_setopt($ch, CURLOPT_USERPWD, "myusername:mypassword"); // if the redirect is allowed curl_setopt($ch, CURLOPT_FOLLOWLOCATION, 1); // then save our data in cURL curl_setopt($ch, CURLOPT_UNRESTRICTED_AUTH, 1); $output = curl_exec($ch); curl_close($ch);

FTP upload

PHP also has a library for working with FTP, but nothing prevents you from using cURL tools here:

// open file $file = fopen("/path/to/file", "r"); // url should contain the following content $url = "ftp://username: [email protected]:21/path/to/new/file"; $ch = curl_init(); curl_setopt($ch, CURLOPT_URL, $url); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); curl_setopt($ch, CURLOPT_UPLOAD, 1); curl_setopt($ch, CURLOPT_INFILE, $fp); curl_setopt($ch, CURLOPT_INFILESIZE, filesize("/path/to/file")); // specify ASCII mod curl_setopt($ch, CURLOPT_FTPASCII, 1); $output = curl_exec ($ch); curl_close($ch);

Using a Proxy

You can make your URL request through a proxy:

$ch = curl_init(); curl_setopt($ch, CURLOPT_URL,"http://www.example.com"); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); // specify the address curl_setopt($ch, CURLOPT_PROXY, "11.11.11.11:8080"); // if you need to provide a username and password curl_setopt($ch, CURLOPT_PROXYUSERPWD,"user:pass"); $output = curl_exec($ch); curl_close($ch);

Callbacks

It is also possible to specify a function that will be triggered before completion cURL works request. For example, while the content of a response is loading, you can start using the data without waiting for it to fully load.

$ch = curl_init(); curl_setopt($ch, CURLOPT_URL,"http://net.tutsplus.com"); curl_setopt($ch, CURLOPT_WRITEFUNCTION,"progress_function"); curl_exec($ch); curl_close($ch); function progress_function($ch,$str) ( echo $str; return strlen($str); )

Such a function MUST return the length of the string, which is a requirement.

Conclusion

Today we got acquainted with how you can use the cURL library for your own selfish purposes. I hope you enjoyed this article.

Thank you! Have a good day!

We have: php 5.2.3, Windows XP, Apache 1.3.33

Problem - the cURL module is not detected if PHP is run from under Apache

In php.ini extension=php_curl.dll is uncommented, extension_dir is set correctly,

libeay32.dll and ssleay32.dll are copied to c:\windows\system32.

However, the phpinfo() function does not show the cURL module among the installed ones, and when Apache starts, the following is written to the log:

PHP Startup: Unable to load dynamic library "c:/php/ext/php_curl.dll" - The specified module could not be found.

If you run php from the command line, then scripts containing commands from cURL work fine, and if you run it from under Apache, they give the following:

Fatal error: Call to undefined function: curl_init() - and regardless of how PHP is installed - as CGI or as a module.

On the Internet, I repeatedly came across a description of this problem - specifically for the cURL module, but the solutions that were proposed there do not help. And I already changed PHP 5.2 to PHP 5.2.3 - it still didn't help.

David Mzareulyan[dossier]

I have one php.ini - I checked it by searching the disk. The fact that the same php.ini is used is also easily confirmed by the fact that changes made to it affect both the launch of scripts from under Apache and from the command line.

Daniil Ivanov[dossier] Better make a file with a call

and open it with a browser.

And then run php -i | grep ini and check the paths to php.ini as php sees them, not by the presence of the file on disk.

Daniil Ivanov[dossier] What does php -i output? The default binary may look for the config elsewhere, depending on the compilation options. It's not the first time I've come across the fact that mod_php.dll and php.exe look at different ini files and what works in one doesn't work in the other.

Vasily Sviridov[dossier]

php -i produces the following:

Configuration File (php.ini) Path => C:\WINDOWS

Loaded Configuration File => C:\PHP\php.ini

Transferring the php.ini file to Windows directory does not change the situation.

Daniil Ivanov[dossier]

How about the rest of the modules? For example php_mysql??? Connecting? Or is it just cURL that's so nasty?

Hmm, it doesn't load for me either... On a very different configuration (Apache 2.2 plus PHP 5.1.6 under Zend Studio). But that's not the point. An experiment with running Apache from the command line (more precisely from FAR) showed something interesting. Without trying to connect Kurl - everything starts in a bundle. When trying to connect Kurl gives an error in ... php5ts.dll.

Hello!

I had a similar problem, I was looking for a solution for a long time, put more new version PHP, eventually found this forum. There was no solution here, so I tried further myself.

I set up a zend studio for myself, and before that there was an earlier version of PHP. Perhaps one of them installed their libraries, and they remained there - outdated.

Thanks for the tips, especially the last one from "Nehxby". I got into C:\windows\system32 and found that the libraries libeay32.dll and ssleay32.dll are not the same size as the original ones. I installed memcached, maybe after that. So, if you added chot, in system32 go :)

had the same problem, used the command php -i | grep ini

showed that the zlib1.dll library is missing

it was in the folder with Apache, I wrote a copy in the folder with PHP

I repeated the command, it showed that the zlib.dll library was not enough, I wrote it in the Apache folder and it all worked.

and all the libraries were also php5ts.dll, so consider the presence of all the necessary libraries.

Decided to add. Because I also faced this problem. I came across this forum through a link on another site. In general, all the proposed options are nothing more than crutches. essence of the solution in Windows. you need to set the PATH variable. specifying where you have PHP. and Hallelujah curl doesn't throw any errors. like other libraries...