Finite impulse response filter. Question

Physically feasible digital filters operate in real time, the following data can be used to generate the output signal at the i-th discrete time point:

1. Values of the output signal at the current time; also a certain number of past samples of the input signal: x(i-1), x(i-2), x(i-m);

2. A number of previous samples of the output signal: y(i-1), y(i-2), y(i-n).

The integers m and n define the order of the digital filter. Filters are classified depending on how information about the past state of the system is used.

Filters with FIR or non-recursive filters, working according to the following algorithm.

M is the filter order.

A non-recursive filter performs a weighting, summation of the previous samples of the input signal. Past samples of the output signal are not used.

H(z) is a system function.

The system function has m zeros and one pole, at z=0.

The algorithm for the operation of a digital filter with FIR is shown in Fig.45.

The main elements of the filter are blocks of delay in readings of values by 1 sampling interval.

Scale blocks that perform numerical multiplication by weights. From the output of the scale blocks, the signal enters the adder, where the output signal is calculated.

This block diagram is not electrical, but serves graphic image signal processing algorithm on a computer. The output and input data for such an algorithm are arrays of numbers.

Applicable to system functions inverse Z - transformation and find the impulse response:

(filter impulse response).

The impulse response of a FIR filter contains a finite number of elements and the filter is always stable.

Find the frequency response by substituting

T=1/fs – sampling interval.

It all started when a friend of a friend of a friend of mine needed help with these same filters. Through Jedi ways, rumors about this reached me, I unsubscribed in the comments to the post at the link. It seems to have helped. Well, I hope.

This story stirred up in me memories of the third, or something, course, when I myself passed the DSP, and inspired me to write an article for all those who are interested in how digital filters work, but who are naturally afraid of the catchy formulas and psychedelic drawings in (I already I'm not talking about textbooks.

In general, in my experience, the situation with textbooks is described by the well-known phrase about the fact that you can’t see the forest behind the trees. And then to say, when they immediately start to scare you with the Z-transform and formulas with the division of polynomials, which are often longer than two boards, interest in the topic dries up extremely quickly. We will start with a simple one, since it is not necessary to write long complex expressions to understand what is happening.

So, for starters, a few simple basic concepts.

1. Impulse response.

Let's say we have a box with four leads. We have no idea what is inside, but we know for sure that the two left conclusions are the entrance, and the two right ones are the exit. Let's try to apply a very short impulse of a very large amplitude to it and see what happens at the output. Well, why, it’s all the same what’s inside this quadripole - it’s unclear, because it’s not clear how to describe it, but we’ll see at least something.

Here it must be said that a short (generally speaking, infinitely short) pulse of large (generally speaking, infinite) amplitude in theory is called a delta function. By the way, the funny thing is that the integral of this endless function is equal to one. Such is the normalization.

So, what we saw at the output of the quadripole, having applied the delta function to the input, is called impulse response this quadrupole. So far, however, it is not clear how it will help us, but now let's just remember the result obtained and move on to the next interesting concept.

2. Convolution.

In short, convolution is a mathematical operation that boils down to integrating the product of functions:

![]()

Denoted, as you can see, with an asterisk. It can also be seen that during convolution, one function is taken in its “direct” order, and we go through the second one “back to front”. Of course, in the more valuable discrete case for humanity, the convolution, like any integral, goes into summation:

It would seem that some dull mathematical abstraction. However, in fact, the convolution is perhaps the most magical phenomenon of this world, surprisingly inferior only to the birth of a person, with the only difference being that where children come from, most people will know at the very least by the age of eighteen, while about what a convolution is and why it is useful and amazing, a huge part of the world's population has absolutely no idea all their lives.

So, the power of this operation lies in the fact that if f is any arbitrary input signal, and g is the impulse response of the quadripole, then the result of the convolution of these two functions will be similar to what we would get by passing the signal f through this quadripole.

That is, the impulse response is a complete cast of all the properties of the quadripole in relation to the input action, and convolution of the input signal with it allows you to restore the corresponding output signal. As for me, it's just amazing!

3. Filters.

You can do a lot of interesting things with impulse response and convolution. For example, if the signal is audio, you can organize reverb, echo, chorus, flanger, and much, much more; you can differentiate and integrate ... In general, create anything. For us now, the most important thing is that, of course, with the help of convolution, filters are also easily obtained.

The actual digital filter is the convolution of the input signal with an impulse response corresponding to the desired filter.

But, of course, the impulse response must be obtained somehow. Of course, we have already figured out how to measure it above, but in such a task there is little sense in this - if we have already assembled a filter, why measure something else, you can use it as is. And, besides, the most important value digital filters is that they can have characteristics that are unattainable (or very difficult to achieve) in reality - for example, a linear phase. So there is no way to measure at all, you just need to count.

4. Obtaining the impulse response.

At this point, in most publications on the topic, the authors begin to dump mountains of Z-transformations and fractions from polynomials on the reader, completely confusing him. I won't do it, I'll just briefly explain what all this is for and why in practice it is not very necessary for the progressive public.

Suppose we have decided what we want from the filter, and made an equation that describes it. Further, to find the impulse response, you can substitute the delta function into the derived equation and get the desired one. The only problem is how to do it, because the delta function in time O th area is given cunning system, and in general there are all sorts of infinities. So at this stage, everything turns out to be terribly difficult.

Here, it happens, and they remember that there is such a thing as the Laplace transform. By itself, it is not a pound of raisins. The only reason why it is tolerated in radio engineering is precisely the fact that in the space of the argument to which this transformation is a transition, some things really become simpler. In particular, the same delta function that gave us so much trouble in the time domain is very easy to express - there it's just one!

The Z-transform (aka Laurent transform) is a version of the Laplace transform for discrete systems.

That is, by applying the Laplace transform (or Z-transform, if necessary) to the function describing the desired filter, substituting unity into the resulting one and converting back, we get the impulse response. Sounds easy, anyone can try it. I will not risk it, because, as already mentioned, the Laplace transform is a harsh thing, especially the reverse. Let's leave it as a last resort, and we ourselves will look for some more simple ways getting what you want. There are several of them.

First, we can recall another amazing fact of nature - the amplitude-frequency and impulse responses are interconnected by a kind and familiar Fourier transform. This means that we can draw any frequency response to our taste, take the inverse Fourier transform from it (either continuous or discrete) and get the impulse response of the system that implements it. It's just amazing!

Here, however, will not do without problems. First, the impulse response we get is likely to be infinite (I won't go into explanations why; that's how the world works), so we'll have to voluntarily cut it off at some point (by setting it to zero beyond that point). But this will not work just like that - as a consequence of this, as expected, there will be distortions in the frequency response of the calculated filter - it will become wavy, and the frequency cut will be blurred.

In order to minimize these effects, various smoothing window functions are applied to the shortened impulse response. As a result, the frequency response is usually blurred even more, but unpleasant (especially in the passband) oscillations disappear.

Actually, after such processing, we get a working impulse response and can build a digital filter.

The second calculation method is even simpler - the impulse responses of the most popular filters have long been expressed in an analytical form for us. It remains only to substitute your values and apply the window function to the result to taste. So you can not even count any transformations.

And, of course, if the goal is to emulate the behavior of a particular circuit, you can get its impulse response in the simulator:

Here, I applied a 100500 volt (yes, 100.5 kV) pulse of 1 µs to the input of the RC circuit and got its impulse response. It is clear that in reality this cannot be done, but in the simulator this method, as you can see, works great.

5. Notes.

The above about shortening impulse response belonged, of course, to the so-called. filters with a finite impulse response (FIR / FIR filters). They have a bunch of valuable properties, including a linear phase (under certain conditions for building an impulse response), which gives no signal distortion during filtering, as well as absolute stability. There are filters with infinite impulse response (IIR / IIR filters). They are less resource-intensive in terms of calculations, but no longer have the listed advantages.

In the next article, I hope to analyze a simple example of the practical implementation of a digital filter.

Lecture #10

"Finite Impulse Response Digital Filters"

Transmission function physically implemented digital filter with a finite impulse response (FIR filter) can be represented as

(10.1).

When replacing in expression (10.1), we obtain the frequency response of the FIR filter in the form

![]() (10.2),

(10.2),

Where ![]() - amplitude-frequency characteristic (AFC) filter,

- amplitude-frequency characteristic (AFC) filter,

![]() - phase-frequency characteristic (PFC) filter.

- phase-frequency characteristic (PFC) filter.

Phase delay filter is defined as

![]() (10.3).

(10.3).

group delay filter is defined as

![]() (10.4).

(10.4).

A distinctive feature of FIR filters is the possibility of implementing constant phase and group delays in them, i.e. linear phase response

(10.5),

where a - constant. Under this condition, the signal passing through the filter does not distort its shape.

To derive conditions that provide a linear phase response, we write the frequency response of the FIR filter, taking into account (10.5)

![]() (10.6).

(10.6).

Equating the real and imaginary parts of this equality, we obtain

(10.7).

(10.7).

Dividing the second equation by the first, we get

(10.8).

(10.8).

Finally, you can write

(10.9).

(10.9).

This equation has two solutions. First at a =0 corresponds to the equation

(10.10).

(10.10).

This equation has a unique solution corresponding to an arbitrary h(0)(sin(0)=0), and h(n)=0 for n >0. This solution corresponds to a filter whose impulse response has a single non-zero sample at the initial time. Such a filter is not of practical interest.

Let's find another solution for . At the same time, having cross-multiplied the numerators and denominators in (10.8), we obtain

(10.11).

Hence we have

![]() (10.12).

(10.12).

Since this equation has the form of a Fourier series, its solution, if it exists, is unique.

It is easy to see that the solution of this equation must satisfy the conditions

(10.13),

(10.14).

It follows from condition (10.13) that for each order of the filter N there is only one phase delay a , at which strict linearity of the PFC can be achieved. From condition (10.14) it follows that the impulse response of the filter must be symmetric about the point for odd N , and relative to the midpoint of the interval (Fig. 10.1).

|

The frequency response of such a filter (for an odd N ) can be written as

(10.15).

Making the substitution in the second sum m = N -1- n , we get

(10.16).

Since h (n)= h (N -1- n ), then the two sums can be combined

(10.17).

(10.17).

Substituting , we get

(10.18).

If we designate

(10.19),

(10.19),

then we can finally write

(10.20).

(10.20).

Thus, for a filter with a linear phase response, we have

(10.21).

(10.21).

For the case of even N similarly we will have

(10.22).

(10.22).

Making a substitution in the second sum , we get

(10.23).

(10.23).

Making the substitution, we get

(10.24).

Denoting

![]() (10.25),

(10.25),

we will finally have

(10.26).

(10.26).

Thus, for a FIR filter with a linear phase response and an even order N can be written

(10.27).

(10.27).

In what follows, for simplicity, we will consider only filters with an odd order.

When synthesizing the filter transfer function, the initial parameters are, as a rule, the requirements for the frequency response. There are many techniques for synthesizing FIR filters. Let's consider some of them.

Since the frequency response of any digital filter is a periodic function of frequency, it can be represented as a Fourier series

![]() (10.28),

(10.28),

where the coefficients of the Fourier series are

(10.29).

(10.29).

It can be seen that the coefficients of the Fourier series h(n ) are the same as the impulse response coefficients of the filter. Therefore, if known analytical description the required frequency response of the filter, then it is easy to determine the coefficients of the impulse response, and from them - the transfer function of the filter. However, this is not feasible in practice, since the impulse response of such a filter has an infinite length. In addition, such a filter is not physically realizable since the impulse response begins at -¥ , and no finite delay will make this filter physically realizable.

One of the possible methods to obtain a FIR filter that approximates a given frequency response is to truncate the infinite Fourier series and the impulse response of the filter, assuming that h(n)=0 for . Then

(10.30).

(10.30).

Physical realizability of the transfer function H(z ) can be achieved by multiplying H (z ) on .

(10.31),

(10.31),

Where

(10.32).

With this modification of the transfer function, the amplitude characteristic of the filter does not change, and the group delay increases by a constant value.

As an example, we calculate the FIR filter low frequencies with a frequency response of the form

(10.33).

(10.33).

In accordance with (10.29), the filter impulse response coefficients are described by the expression

(10.34).

(10.34).

Now from (10.31) we can get the expression for the transfer function

(10.35),

(10.35),

Where

(10.36).

The amplitude characteristics of the calculated filter for various N presented in Figure 10.2.

Fig.10.2

Ripple in the passband and stopband occurs due to the slow convergence of the Fourier series, which, in turn, is due to the presence of a discontinuity of the function at the cutoff frequency of the passband. These pulsations are known as Gibbs pulsations.

From Fig. 10.2 it can be seen that with an increase N the frequency of pulsations increases, and the amplitude decreases both at lower and upper frequencies. However, the amplitude of the last ripple in the passband and the first ripple in the stopband remain practically unchanged. In practice, such effects are often undesirable, which requires finding ways to reduce Gibbs ripples.

Truncated impulse response h(n ) can be represented as the product of the required infinite impulse response and some window functions w (n) of length n (Fig. 10.3).

![]() (10.37).

(10.37).

|

In the considered case of a simple truncation of the Fourier series, we use rectangular window

(10.38).

(10.38).

In this case, the frequency response of the filter can be represented as a complex convolution

(10.39).

(10.39).

This means that will be a "blurred" version of the required characteristic.

The problem is reduced to finding window functions that make it possible to reduce Gibbs ripples at the same filter selectivity. To do this, you must first study the properties of the window function using the example of a rectangular window.

The spectrum of the rectangular window function can be written as

(10.40).

(10.40).

The spectrum of the rectangular window function is shown in Fig. 10.4.

Fig.10.4

Since at , then the width of the main lobe of the spectrum is equal to .

The presence of side lobes in the spectrum of the window function leads to an increase in the Gibbs ripple in the frequency response of the filter. To obtain small ripple in the passband and high attenuation in the stopband, it is necessary that the area bounded by the side lobes is a small fraction of the area bounded by the main lobe.

In turn, the width of the main lobe determines the width of the transition zone of the resulting filter. For high filter selectivity, the width of the main lobe should be as small as possible. As can be seen from the above, the width of the main lobe decreases with increasing filter order.

Thus, the properties of suitable window functions can be formulated as follows:

- the window function must be limited in time;

- the spectrum of the window function should best approximate the frequency-limited function, i.e. have a minimum of energy outside the main lobe;

- the width of the main lobe of the spectrum of the window function should be as small as possible.

The most commonly used window functions are:

1. Rectangular window. Considered above.

2. Hamming window.

(10.41),

(10.41),

Where .

When this window is called the Hann window ( hanning).

3. Blackman window.

(10.42).

(10.42).

4. Bartlett window.

(10.43).

(10.43).

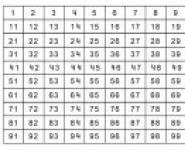

The indicators of filters built using the specified window functions are summarized in Table 10.1.

|

Window |

Main lobe width |

Pulsation factor, % |

||

N=11 |

N=21 |

N=31 |

||

|

Rectangular |

22.34 |

21.89 |

21.80 |

|

|

Hanning |

2.62 |

2.67 |

2.67 |

|

|

Hamming |

1.47 |

0.93 |

0.82 |

|

|

Blackman |

0.08 |

0.12 |

0.12 |

|

The ripple factor is defined as the ratio of the maximum amplitude side lobe to the amplitude of the main lobe in the spectrum of the window function.

The data in Table 10.2 can be used to select the required filter order and the most appropriate window function when designing real filters.

|

transitional |

unevenness transmission (dB) |

Decay in barrage (dB) |

|

|

Rectangular |

|||

|

Hanning |

|||

|

Hamming |

|||

|

Blackman |

As can be seen from Table 10.1, there is a definite relationship between the ripple factor and the width of the main lobe in the spectrum of the window function. The smaller the ripple coefficient, the greater the width of the main lobe, and hence the transition zone in the filter's frequency response. To ensure low ripple in the passband, it is necessary to choose a window with a suitable ripple factor, and to provide the required width of the transition zone with an increased filter order N .

This problem can be solved using the window proposed by Kaiser. The Kaiser window function has the form

(10.44),

(10.44),

where a is an independent parameter, ![]() , I 0 is the zero-order Bessel function of the first kind, defined by the expression

, I 0 is the zero-order Bessel function of the first kind, defined by the expression

(10.45).

(10.45).

An attractive property of the Kaiser window is the possibility smooth change ripple coefficient from small to large values, when changing only one parameter a . In this case, as for other window functions, the width of the main lobe can be controlled by the filter order N .

The main parameters set when developing a real filter are:

Bandwidth - w p ;

Barrier - w a ;

The maximum allowable ripple in the passband - A p ;

Minimum attenuation in the stopband - A a ;

-sampling frequency - w s .

These parameters are illustrated in Figure 10.5. In this case, the maximum ripple in the passband is defined as

![]() (10.46),

(10.46),

and the minimum attenuation in the stopband as

A relatively simple procedure for calculating a filter with a Kaiser window includes the following steps:

1. The impulse response of the filter h (n) is determined, provided the frequency response is ideal

(10.48),

(10.48),

where (10.49).

2. The parameter d is chosen as

![]() (10.50),

(10.50),

Where  (10.51).

(10.51).

3. The true value of A a and A p is calculated according to formulas (10.46), (10.47).

4. The parameter a is selected as

(10.52).

(10.52).

5. The parameter D is selected as

(10.53).

(10.53).

6. The smallest odd value of the filter order is selected from the condition

![]() (10.54),

(10.54),

(10.57)

(10.57)

follows that

Since the samples of the filter's impulse response are the coefficients of its transfer function, condition (10.59) means that the codes of all filter coefficients contain only a fractional part and a sign bit and do not contain an integer part.

The number of digits of the fractional part of the filter coefficients is determined from the condition of satisfying the transfer function of the filter with quantized coefficients, the specified requirements for approaching the reference transfer function with exact values of the coefficients.

The absolute values of the filter input samples are usually normalized so that

If the analysis is carried out for a FIR filter with a linear phase response, then the algorithm for calculating its output signal can be as follows

where are the filter coefficients rounded up to s k.

This algorithm corresponds to the block diagram of the filter, shown in Figure 10.5.

|

There are two ways to implement this algorithm. In the first case, all multiplication operations are performed exactly and there is no rounding of products. In this case, the word length of the products is equal to s in +s k , where s in is the word length of the input signal, and s k is the word length of the filter coefficients. In this case, the block diagram of the filter shown in Fig. 10.5 corresponds exactly to the real filter.

In the second way of implementing the algorithm (10.61), each result of the multiplication operation is rounded, i.e. products are calculated with some error. In this case, it is necessary to change the algorithm (10.61) so as to take into account the error introduced by rounding the products

If the sample values of the output signal of the filter are calculated by the first method (with exact values of the products), then the variance of the output noise is defined as

![]() (10.66),

(10.66),

those. depends on the variance of the rounding noise of the input signal and the values of the filter coefficients. From here you can find the required number of bits of the input signal as

(10.67).

(10.67).

From the known values of s in and s k, one can determine the number of bits required for the fractional part of the output signal code as

If the values of the samples of the output signal are calculated according to the second method, when each product is rounded up to s d bits, then the variance of the rounding noise created by each of the multipliers can be expressed in terms of the word length of the product as

DR in and signal-to-noise ratio at the output of the filter SNR out . The value of the dynamic range of the input signal in decibels is defined as

![]() (10.74),

(10.74),

where A max and A min are the maximum and minimum amplitudes of the filter input signal.

The signal-to-noise ratio at the output of the filter, expressed in decibels, is defined as

![]() (10.75),

(10.75),

determines the root mean square value of the power of the output sinusoidal signal of the filter with amplitude A min , and

(10.77)

determines the noise power at the output of the filter. From (10.75) and (10.76) with A max =1 we obtain an expression for the variance of the output noise of the filter

![]() (10.78).

(10.78).

This filter output noise variance value can be used to calculate the filter input and output signal widths.

NOVOSIBIRSK STATE TECHNICAL UNIVERSITY

FACULTY OF AUTOMATICS AND COMPUTING ENGINEERING

Department of Data Acquisition and Processing Systems

Discipline "Theory and signal processing"

LAB No.10

DIGITAL FILTERS

FINITE IMPULSE RESPONSE

Group: AT-33

Option: 1 Teacher:

Student: Shadrina A.V. Assoc. Shchetinin Yu.I.

Goal of the work: study of methods of analysis and synthesis of filters with a finite impulse response using smoothing window functions.

Completing of the work:

1. Plots of the impulse response of a FIR low-pass filter with a rectangular cutoff frequency window for filter lengths and .

The impulse response of an ideal discrete FIR filter has infinite length and is non-zero for negative values of :

.

.

In order to obtain a physically feasible filter, one should limit the impulse response to a finite number of , and then shift the truncated characteristic to the right by .

The value is the length (size) of the filter, ![]() – filter order.

– filter order.

Matlab Script (labrab101.m)

N = input("Enter filter length N = ");

h = sin(wc.*(n-(N-1)/2))./(pi.*(n-(N-1)/2));

xlabel("Record number, n")

>>subplot(2,1,1)

>>labrab101

Enter filter length N = 15

>> title("Impulse response of FIR filter for N=15")

>>subplot(2,1,2)

>>labrab101

Enter filter length N = 50

>> title("Impulse response of FIR filter for N=50")

Fig.1. Plots of the impulse response of an FIR low-pass filter with a rectangular cutoff frequency window for filter lengths and

A comment: If we consider the frequency response of a digital filter as a Fourier series:  , then the coefficients of this series will be the values of the impulse response of the filter. In this case, the Fourier series was truncated in the first case to , and in the second - to , and then the truncated characteristics were shifted along the readout axis to the right by to obtain a causal filter. At , the width of the main lobe is 2, and at - 1, i.e. as the filter length increases, the main lobe of the impulse response narrows. If we consider the level of side lobes (using ), then with an increase it increased in absolute value from to . Thus, it can be concluded that when using the approximation of the ideal frequency response of the filter by a rectangular window, it is impossible to simultaneously narrow the main lobe (and thereby reduce the transition region) and reduce the levels of the side lobes (reduce ripple in the pass and stop bands of the filter). The only controllable parameter of the rectangular window is its size, with which you can influence the width of the main lobe, however, it does not have much effect on the side lobes.

, then the coefficients of this series will be the values of the impulse response of the filter. In this case, the Fourier series was truncated in the first case to , and in the second - to , and then the truncated characteristics were shifted along the readout axis to the right by to obtain a causal filter. At , the width of the main lobe is 2, and at - 1, i.e. as the filter length increases, the main lobe of the impulse response narrows. If we consider the level of side lobes (using ), then with an increase it increased in absolute value from to . Thus, it can be concluded that when using the approximation of the ideal frequency response of the filter by a rectangular window, it is impossible to simultaneously narrow the main lobe (and thereby reduce the transition region) and reduce the levels of the side lobes (reduce ripple in the pass and stop bands of the filter). The only controllable parameter of the rectangular window is its size, with which you can influence the width of the main lobe, however, it does not have much effect on the side lobes.

2. Calculation of the DTFT of the impulse responses from item 1 using the function . Graphs of their frequency response on a linear scale and in decibels for 512 frequency readings. Passband, transition band and stopband of the filter. The effect of the filter order on the width of the transition band and the level of frequency response ripple in the pass and stop bands.

Matlab Function (DTFT.m)

function = DTFT(x,M)

N = max (M, length(x));

% Reducing FFT to size 2^m

N = 2^(ceil(log(N)/log(2)));

% Compute fft

% Frequency vector

w = 2*pi*((0:(N-1))/N);

w = w - 2*pi*(w>=pi);

% Shift FFT to interval from -pi to +pi

X = fftshift(X);

w = fftshift(w);

Matlab Script (labrab102.m)

h1 = sin(wc.*(n1-(N1-1)/2))./(pi.*(n1-(N1-1)/2));

h2 = sin(wc.*(n2-(N2-1)/2))./(pi.*(n2-(N2-1)/2));

DTFT(h1,512);

DTFT(h2,512);

plot(w./(2*pi),abs(H1)./max(abs(H1)),"r")

xlabel("f, Hz"), ylabel("|H1|/max(|H1|)"), grid

plot(w./(2*pi),abs(H2)./max(abs(H2)),"b")

xlabel("f, Hz"), ylabel("|H2|/max(|H2|)"), grid

plot(w./(2*pi),20*log10(abs(H1)),"r")

title("Frequency response of the FIR low-pass filter with a rectangular window for N = 15")

xlabel("f, Hz"), ylabel("20lg(|H1|), dB"), grid

plot(w./(2*pi),20*log10(abs(H2)),"b")

title("Frequency response of the FIR low-pass filter with a rectangular window for N = 50")

xlabel("f, Hz"), ylabel("20lg(|H2|), dB"), grid

Fig.2. Frequency response plots of a FIR low-pass filter with a rectangular cutoff frequency window for filter lengths and on a linear scale

Fig.3. Frequency response plots of a FIR low-pass filter with a rectangular cutoff frequency window for filter lengths and on a logarithmic scale

A comment:

Table 1. Range of passband, transition region, and stopband for filter lengths and

|

Filter length |

Bandwidth, Hz |

Transition region, Hz |

Stop band, Hz |

||

48 Finite impulse response digital filters. Calculation of FIR filters.Finite impulse response filter (Non-recursive filter, FIR filter) or FIR filter (FIR short for finite impulse response - finite impulse response) - one of the types of linear digital filters, a characteristic feature of which is the limited time of its impulse response (from some point in time it becomes exactly equal to zero). Such a filter is also called non-recursive due to the lack of feedback. The denominator of the transfer function of such a filter is a certain constant. Difference equation describing the relationship between the input and output signals of the filter: where P- filter order, x(n) - input signal, y(n) is the output signal, and b i- filter coefficients. In other words, the value of any output sample is determined by the sum of the scaled values P previous counts. It can be put differently: the value of the filter output at any time is the value of the response to the instantaneous value of the input and the sum of all gradually decaying responses P previous signal samples that still affect the output (after P-counts, the impulse transition function becomes equal to zero, as already mentioned, so all terms after P th will also become zero). Let's write the previous equation in a more capacious form:

In order to find the filter kernel we set x(n) = δ( n) where δ( n) is a delta function. Then the impulse response of the FIR filter can be written as:

The z-transform of the impulse response gives us the transfer function of the FIR filter:

]Properties The FIR filter has a number of useful properties that make it sometimes preferable to use over the IIR filter. Here are some of them: FIR filters are robust. FIR filters do not require feedback when implemented. The phase of FIR filters can be made linear Direct Form FIR Filter FIR filters can be implemented using three elements: a multiplier, an adder, and a delay block. The option shown in the figure is a direct implementation of type 1 FIR filters.

Implementing a direct form FIR filter Program example The following is an example FIR filter program written in C: /* FIR 128-tap filter */ float fir_filter(float input) static float sample; acc = 0.0f; /* Battery */ /* Multiply with accumulation */ for (i = 0; i< 128; i++) { acc += (h[i] * sample[i]); /* Exit */ /* Offset the delayed signal */ for (i = 127; i > 0; i--) sample[i] = sample; 49 Data smoothing. Moving average.50 Data smoothing. Smoothing with parabolas.51 Data smoothing. Spencer smoothing.52 Data smoothing. median filtering.Moving Average, Parabolic Smoothing, Spencer Smoothing, Median Filtering When developing methods for determining the parameters of physical processes that slowly change in time, an important task is to eliminate the influence of noise effects or random interference that are superimposed on the processed signal obtained at the output of the primary converter. To eliminate this effect, you can apply data smoothing. One of the simplest ways of such smoothing is arithmetic averaging. When applied, each -th value discrete function(processed data array) is calculated in accordance with the expression: where is the number of points for arithmetic averaging (odd integer); The value of the function before processing; There are other, quite effective methods of smoothing, for example, by second-degree parabolas in five, seven, nine and eleven points in accordance with the expressions: or parabolas of the fourth degree in seven, nine, eleven and thirteen points: In practical applications, other effective methods give good results, for example, 15-point Spencer smoothing: Substituting into these expressions the complex exponent , where, we can determine the transfer function of the corresponding transformation. For arithmetic average The expression in brackets is a geometric progression with a denominator, so this expression can be written as:

This formula is the transfer characteristic of the low-pass filter and it can be seen from it that the more terms involved in the averaging, the greater the suppression of high-frequency noise components in the signal (see Figure 6.1). However, the semantic concept of frequency in the processing of temporal trends differs from that in signal processing. This is explained by the fact that when studying time trends, it is not their frequency composition that is of interest, but the type of change (increase, decrease, constancy, cyclicality, etc.). The use of so-called heuristic algorithms is also quite effective for data smoothing. One of them is median filtering. In the course of its implementation in a sliding time window of dimension , where is an odd integer, the central element is replaced by the middle element of the sequence, which are ordered, in ascending order of values, elements of the data array of the smoothed signal that fell into the time window. The advantage of median filtering is the ability to remove impulse noise, the duration of which does not exceed, with virtually no distortion of smoothly varying signals. This method of noise suppression does not have a rigorous mathematical justification, however, the simplicity of calculations and the efficiency of the results obtained have led to its widespread use.

Figure 6.1 - Graphs of the transfer characteristic arithmetic averaging operations for m=5, 7, 9, 11 Another interesting smoothing algorithm is median averaging. Its essence is as follows. In a sliding time window, dimension (- odd integer), the elements of the data array are sorted in ascending order, and then the first and last elements are removed from the ordered sequence (<). Центральный элемент временного окна из последовательности сглаживаемых данных заменяется значением, вычисляемым как This method allows you to suppress impulse and radio frequency interference, as well as achieve good signal smoothing.

05.02.2021

Internet

|

.

.