Matrices. Types of matrices

A matrix is a special object in mathematics. It is depicted in the form of a rectangular or square table, composed of a certain number of rows and columns. In mathematics there is a wide variety of types of matrices, varying in size or content. The numbers of its rows and columns are called orders. These objects are used in mathematics to organize the recording of systems linear equations and convenient search for their results. Equations using a matrix are solved using the method of Carl Gauss, Gabriel Cramer, minors and algebraic additions, as well as many other methods. The basic skill when working with matrices is reduction to However, first, let's figure out what types of matrices are distinguished by mathematicians.

Null typeAll components of this type of matrix are zeros. Meanwhile, the number of its rows and columns is completely different.

Square type

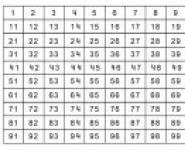

The number of columns and rows of this type of matrix is the same. In other words, it is a “square” shaped table. The number of its columns (or rows) is called the order. Special cases are considered to be the existence of a second-order matrix (2x2 matrix), fourth-order (4x4), tenth-order (10x10), seventeenth-order (17x17) and so on.

Column vector

This is one of the simplest types of matrices, containing only one column, which includes three numerical values. It represents a number of free terms (numbers independent of variables) in systems of linear equations.

View similar to the previous one. Consists of three numerical elements, in turn organized into one line.

Diagonal type

Numerical values in the diagonal form of the matrix take only components of the main diagonal (highlighted green). The main diagonal begins with the element located on the left top corner, and ends with the element in the lower right, respectively. The remaining components are equal to zero. The diagonal type is only a square matrix of some order. Among the diagonal matrices, one can distinguish the scalar one. All its components take the same values.

A subtype of diagonal matrix. All its numerical values are units. Using a single type of matrix table, one performs its basic transformations or finds a matrix inverse to the original one.

Canonical type

The canonical form of the matrix is considered one of the main ones; Reducing to it is often necessary for work. The number of rows and columns in a canonical matrix varies, and it does not necessarily belong to the square type. It is somewhat similar to the identity matrix, but in its case not all components of the main diagonal take on a value equal to one. There can be two or four main diagonal units (it all depends on the length and width of the matrix). Or there may be no units at all (then it is considered zero). The remaining components of the canonical type, as well as the diagonal and unit elements, are equal to zero.

Triangular typeOne of the most important types of matrix, used when searching for its determinant and when performing simple operations. The triangular type comes from the diagonal type, so the matrix is also square. The triangular type of matrix is divided into upper triangular and lower triangular.

In an upper triangular matrix (Fig. 1), only elements that are above the main diagonal take a value equal to zero. The components of the diagonal itself and the part of the matrix located under it contain numerical values.

In the lower triangular matrix (Fig. 2), on the contrary, the elements located in the lower part of the matrix are equal to zero.

The type is necessary to find the rank of a matrix, as well as for elementary operations on them (along with the triangular type). The step matrix is so named because it contains characteristic "steps" of zeros (as shown in the figure). In the step type, a diagonal of zeros is formed (not necessarily the main one), and all elements under this diagonal also have values equal to zero. A prerequisite is the following: if there is a zero row in the step matrix, then the remaining rows below it also do not contain numerical values.

Thus, we examined the most important types of matrices necessary to work with them. Now let's look at the problem of converting the matrix into the required form.

Reducing to triangular formHow to bring a matrix to a triangular form? Most often in tasks you need to transform a matrix into a triangular form in order to find its determinant, otherwise called a determinant. When performing this procedure, it is extremely important to “preserve” the main diagonal of the matrix, because the determinant of a triangular matrix is equal to the product of the components of its main diagonal. Let me also recall alternative methods for finding the determinant. The determinant of the square type is found using special formulas. For example, you can use the triangle method. For other matrices, the method of decomposition by row, column or their elements is used. You can also use the method of minors and algebraic matrix additions.

Let us analyze in detail the process of reducing a matrix to a triangular form using examples of some tasks.

Exercise 1It is necessary to find the determinant of the presented matrix using the method of reducing it to triangular form.

The matrix given to us is a third-order square matrix. Therefore, to transform it into a triangular shape, we will need to zero out two components of the first column and one component of the second.

To bring it to triangular form, we start the transformation from the lower left corner of the matrix - from the number 6. To turn it to zero, multiply the first row by three and subtract it from the last row.

Important! The top row does not change, but remains the same as in the original matrix. There is no need to write a string four times larger than the original one. But the values of the strings whose components need to be set to zero are constantly changing.

Only the last value remains - the element of the third row of the second column. This is the number (-1). To turn it to zero, subtract the second from the first line.

Let's check:

detA = 2 x (-1) x 11 = -22.

This means that the answer to the task is -22.

Task 2It is necessary to find the determinant of the matrix by reducing it to triangular form.

The presented matrix belongs to the square type and is a fourth-order matrix. This means that it is necessary to turn three components of the first column, two components of the second column and one component of the third to zero.

Let's start converting it from the element located in the lower left corner - from the number 4. We need to reverse given number to zero. The easiest way to do this is to multiply the top line by four and then subtract it from the fourth. Let's write down the result of the first stage of transformation.

So the fourth row component is set to zero. Let's move on to the first element of the third line, to the number 3. We perform a similar operation. We multiply the first line by three, subtract it from the third line and write down the result.

We managed to turn to zero all the components of the first column of this square matrix, with the exception of the number 1 - an element of the main diagonal that does not require transformation. Now it is important to preserve the resulting zeros, so we will perform the transformations with rows, not with columns. Let's move on to the second column of the presented matrix.

Let's start again at the bottom - with the element of the second column of the last row. This number is (-7). However, in this case it is more convenient to start with the number (-1) - the element of the second column of the third row. To turn it to zero, subtract the second from the third line. Then we multiply the second line by seven and subtract it from the fourth. We got zero instead of the element located in the fourth row of the second column. Now let's move on to the third column.

In this column, we need to turn only one number to zero - 4. This is not difficult to do: we simply add a third to the last line and see the zero we need.

After all the transformations made, we brought the proposed matrix to a triangular form. Now, to find its determinant, you only need to multiply the resulting elements of the main diagonal. We get: detA = 1 x (-1) x (-4) x 40 = 160. Therefore, the solution is 160.

So, now the question of reducing the matrix to triangular form will not bother you.

Reducing to a stepped formFor elementary operations on matrices, the stepped form is less “in demand” than the triangular one. It is most often used to find the rank of a matrix (i.e., the number of its non-zero rows) or to determine linearly dependent and independent rows. However, the stepped type of matrix is more universal, as it is suitable not only for the square type, but also for all others.

To reduce a matrix to stepwise form, you first need to find its determinant. The above methods are suitable for this. The purpose of finding the determinant is to find out whether it can be converted into a step matrix. If the determinant is greater or less than zero, then you can safely proceed to the task. If it is equal to zero, it will not be possible to reduce the matrix to a stepwise form. In this case, you need to check whether there are any errors in the recording or in the matrix transformations. If there are no such inaccuracies, the task cannot be solved.

Let's look at how to reduce a matrix to a stepwise form using examples of several tasks.

Exercise 1. Find the rank of the given matrix table.

Before us is a third-order square matrix (3x3). We know that to find the rank it is necessary to reduce it to a stepwise form. Therefore, first we need to find the determinant of the matrix. Let's use the triangle method: detA = (1 x 5 x 0) + (2 x 1 x 2) + (6 x 3 x 4) - (1 x 1 x 4) - (2 x 3 x 0) - (6 x 5 x 2) = 12.

Determinant = 12. It is greater than zero, which means that the matrix can be reduced to a stepwise form. Let's start transforming it.

Let's start it with the element of the left column of the third line - the number 2. Multiply the top line by two and subtract it from the third. Thanks to this operation, both the element we need and the number 4 - the element of the second column of the third row - turned to zero.

We see that as a result of the reduction, a triangular matrix was formed. In our case, we cannot continue the transformation, since the remaining components cannot be reduced to zero.

This means that we conclude that the number of rows containing numerical values in this matrix (or its rank) is 3. The answer to the task: 3.

Task 2. Determine the number of linearly independent rows of this matrix.

We need to find strings that cannot be converted to zero by any transformation. In fact, we need to find the number of non-zero rows, or the rank of the presented matrix. To do this, let us simplify it.

We see a matrix that does not belong to the square type. It measures 3x4. Let's also start the reduction with the element of the lower left corner - the number (-1).

Its further transformations are impossible. This means that we conclude that the number of linearly independent lines in it and the answer to the task is 3.

Now reducing the matrix to a stepped form is not an impossible task for you.

Using examples of these tasks, we examined the reduction of a matrix to a triangular form and a stepped form. To make it zero required values matrix tables, in some cases you need to use your imagination and correctly convert their columns or rows. Good luck in mathematics and in working with matrices!

To bring the matrix into a stepped form (Fig. 1.4), you need to perform the following steps.

1. In the first column, select an element other than zero ( leading element). A string with a leading element ( leading line), if it is not the first, rearrange it in place of the first line (type I transformation). If there is no leading element in the first column (all elements are zero), then we exclude this column and continue searching for the leading element in the rest of the matrix. The transformation ends if all columns are eliminated or the remainder of the matrix has all zero elements.

2. Divide all elements of the leading row by the leading element (type II transformation). If the leading line is the last, then the transformation should end there.

3. To each line located below the leading one, add the leading row, multiplied accordingly by such a number that the elements under the leading one are equal to zero (type III transformation).

4. Having excluded from consideration the row and column at the intersection of which the leading element is located, go to step 1, in which all the described actions are applied to the rest of the matrix.

The theorem about the distribution of the line item according to the elements of the row.

The theorem on the expansion of the determinant into elements of a row or column allows us to reduce the calculation of the determinant of order () to the calculation of order determinants.

If the determinant has elements equal to zero, then it is most convenient to expand the determinant into the elements of the row or column that contains the largest number of zeros.

Using the properties of determinants, you can transform the determinant order so that all elements of a certain row or column, except one, become equal to zero. Thus, the calculation of the determinant order, if it is different from zero, will be reduced to the calculation of one determinant order.

Task 3.1. Compute determinant

Solution. By adding the first line to the second line, the first line multiplied by 2 to the third line, and the first line multiplied by -5 to the fourth line, we get

Expanding the determinant into elements of the first column, we have

![]() .

.

In the resulting 3rd order determinant, let us turn to zero all the elements of the first column, except the first. To do this, to the second line we add the first, multiplied by (-1), to the third, multiplied by 5, add the first, multiplied by 8. Since we multiplied the third line by 5, then (so that the determinant does not change) multiply it by . We have

Let us decompose the resulting determinant into the elements of the first column:

Laplace's theorem(1). Theorem about alien additions(2)

1) The determinant is equal to the sum of the products of the elements of any row and their algebraic complements.

2) The sum of the products of elements of any row of a determinant by the algebraic complements of the corresponding elements of its other row is equal to zero (the theorem on multiplication by other algebraic complements).

Every point on the plane with the chosen coordinate system is specified by a pair (α, β) of its coordinates; the numbers α and β can also be understood as the coordinates of a radius vector with an end at this point. Similarly, in space, the triple (α, β, γ) defines a point or vector with coordinates α, β, γ. It is on this that the geometric interpretation of systems of linear equations with two or three unknowns, well known to the reader, is based. Thus, in the case of a system of two linear equations with two unknowns

a 1 x + b 1 y = c 1,

a 2 x + b 2 y = c 2

each of the equations is interpreted as a straight line on the plane (see Fig. 26), and the solution (α, β) is interpreted as the point of intersection of these lines or as a vector with coordinates ap (the figure corresponds to the case when the system has a unique solution).

Rice. 26

Rice. 26

You can do the same with a system of linear equations with three unknowns, interpreting each equation as an equation of a plane in space.

In mathematics and its various applications (in particular, in coding theory), one has to deal with systems of linear equations containing more than three unknowns. A system of linear equations with n unknowns x 1, x 2, ..., x n is a set of equations of the form

a 11 x 1 + a 12 x 2 + ... + a 1n x n = b 1,

a 21 x 1 + a 22 x 2 + ... + a 2n x n = b 2,

. . . . . . . . . . . . . . . . . . . . . . (1)

a m1 x 1 + a m2 x 2 + ... + a mn x n = b m,

where a ij and b i are arbitrary real numbers. The number of equations in the system can be any and is in no way related to the number of unknowns. Coefficients for unknowns a ij have double numbering: the first index i indicates the number of the equation, the second index j - the number of the unknown at which this coefficient stands. Every solution to the system is understood as a set of (real) values of the unknowns (α 1, α 2, ..., α n), turning each equation into a true equality.

Although a direct geometric interpretation of system (1) for n > 3 is no longer possible, it is quite possible and in many respects convenient to extend the geometric language of a space of two or three dimensions to the case of arbitrary n. Further definitions serve this purpose.

Any ordered set of n real numbers (α 1, α 2, ..., α n) is called an n-dimensional arithmetic vector, and the numbers themselves α 1, α 2, ..., α n are the coordinates of this vector.

To designate vectors, as a rule, bold font is used and for vector a with coordinates α 1, α 2, ..., α n the usual notation form is retained:

a = (α 1, α 2, ..., α n).

By analogy with an ordinary plane, the set of all n-dimensional vectors satisfying a linear equation with n unknowns is called a hyperplane in n-dimensional space. With this definition, the set of all solutions to system (1) is nothing more than the intersection of several hyperplanes.

Addition and multiplication of n-dimensional vectors are determined by the same rules as for ordinary vectors. Namely, if

a = (α 1, α 2, ..., α n), b = (β 1, β 2, ..., β n) (2)

Two n-dimensional vectors, then their sum is called a vector

α + β = (α 1 + β 1, α 2 + β 2, ..., α n + β n). (3)

The product of vector a and number λ is the vector

λa = (λα 1, λα 2, ..., λα n). (4)

The set of all n-dimensional arithmetic vectors with the operations of adding vectors and multiplying a vector by a number is called the arithmetic n-dimensional vector space L n.

Using the introduced operations, one can consider arbitrary linear combinations of several vectors, i.e., expressions of the form

λ 1 a 1 + λ 2 a 2 + ... + λ k a k,

where λ i are real numbers. For example, a linear combination of vectors (2) with coefficients λ and μ is a vector

λа + μb = (λα 1 + μβ 1, λα 2 + μβ 2, ..., λα n + μβ n).

In a three-dimensional vector space, a special role is played by the triple of vectors i, j, k (coordinate unit vectors), into which any vector a is decomposed:

a = xi + yj + zk,

where x, y, z are real numbers (coordinates of vector a).

In the n-dimensional case, the following system of vectors plays the same role:

e 1 = (1, 0, 0, ..., 0),

e 2 = (0, 1, 0, ..., 0),

e 3 = (0, 0, 1, ..., 0),

. . . . . . . . . . . . (5)

e n = (0, 0, 0, ..., 1).

Any vector a is, obviously, a linear combination of vectors e 1, e 2, ..., e n:

a = a 1 e 1 + a 2 e 2 + ... + a n e n, (6)

and the coefficients α 1, α 2, ..., α n coincide with the coordinates of vector a.

Denoting by 0 a vector whose all coordinates are equal to zero (in short, the zero vector), we introduce the following important definition:

A system of vectors a 1, a 2, ..., and k is called linearly dependent if there is a linear combination equal to the zero vector

λ 1 a 1 + λ 2 a 2 + ... + λ k a k = 0,

in which at least one of the coefficients h 1, λ 2, ..., λ k is different from zero. IN otherwise the system is called linearly independent.

So, vectors

a 1 = (1, 0, 1, 1), a 2 = (1, 2, 1, 1), and 3 = (2, 2, 2, 2)

are linearly dependent because

a 1 + a 2 - a 3 = 0.

A linear dependence, as can be seen from the definition, is equivalent (for k ≥ 2) to the fact that at least one of the vectors of the system is a linear combination of the others.

If the system consists of two vectors a 1 and a 2, then the linear dependence of the system means that one of the vectors is proportional to the other, say, a 1 = λa 2; in the three-dimensional case this is equivalent to the collinearity of the vectors a 1 and a 2. In the same way, the linear dependence of a system I of three vectors in ordinary space means that these vectors are coplanar. The concept of linear dependence is thus a natural generalization of the concepts of collinearity and coplanarity.

It is easy to verify that the vectors e 1, e 2, ..., e n from system (5) are linearly independent. Consequently, in n-dimensional space there are systems of n linearly independent vectors. It can be shown that every system of more vectors are linearly dependent.

Any system a 1 , a 2 , ..., a n of n linearly independent vectors of an n-dimensional space L n is called its basis.

Any vector a of the space L n is decomposed, and in a unique way, into vectors of an arbitrary basis a 1, a 2, ..., a n:

a = λ 1 a 1 + λ 2 a 2 + ... + λ n a n.

This fact is easily established based on the definition of the basis.

Continuing the analogy with three-dimensional space, it is possible in the n-dimensional case to determine the scalar product a b of vectors, setting

a · b = α 1 β 1 + α 2 β 2 + ... + α n β n .

With this definition, all the basic properties of the scalar product of three-dimensional vectors are preserved. Vectors a and b are called orthogonal if their scalar product is equal to zero:

α 1 β 1 + α 2 β 2 + ... + α n β n = 0.

The theory of linear codes uses another important concept - the concept of subspace. A subset V of a space L n is called a subspace of this space if

1) for any vectors a, b belonging to V, their sum a + b also belongs to V;

2) for any vector a belonging to V, and for any real number λ, the vector λa also belongs to V.

For example, the set of all linear combinations of vectors e 1, e 2 from system (5) will be a subspace of the space L n.

In linear algebra it is proved that in any subspace V there is such a linearly independent system of vectors a 1, a 2, ..., a k that every vector a of the subspace is a linear combination of these vectors:

a = λ 1 a 1 + λ 2 a 2 + ... + λ k a k .

The indicated system of vectors is called the basis of the subspace V.

From the definition of space and subspace it immediately follows that the space L n is a commutative group with respect to the operation of vector addition, and any of its subspace V is a subgroup of this group. In this sense, one can, for example, consider cosets of the space L n with respect to the subspace V.

In conclusion, we emphasize that if in the theory of n-dimensional arithmetic space, instead of real numbers (i.e., elements of the field of real numbers), we consider the elements of an arbitrary field F, then all the definitions and facts given above would remain valid.

In coding theory, an important role is played by the case when the field F is a field of residues Z p , which, as we know, is finite. In this case, the corresponding n-dimensional space is also finite and contains, as is easy to see, p n elements.

The concept of space, like the concepts of group and ring, also allows for an axiomatic definition. For details, we refer Feeder to any linear algebra course.

Linear combination. Linearly dependent and independent vector systems.

linear combination of vectors

Linear combination of vectors ![]() called a vector

called a vector

Where ![]() - linear combination coefficients. If

- linear combination coefficients. If ![]() a combination is said to be trivial if it is non-trivial.

a combination is said to be trivial if it is non-trivial.

Linear dependence and vector independence

System ![]() linearly dependent

linearly dependent

System ![]() linearly independent

linearly independent

Criterion for linear dependence of vectors

In order for vectors ![]() (r > 1) were linearly dependent, it is necessary and sufficient that at least one of these vectors is a linear combination of the others.

(r > 1) were linearly dependent, it is necessary and sufficient that at least one of these vectors is a linear combination of the others.

Dimension of linear space

Linear space V called n-dimensional (has the dimension n), if it contains:

1) exists n linearly independent vectors;

2) any system n+1 vectors are linearly dependent.

Designations: n= dim V;.

A system of vectors is called linearly dependent if there is a non-zero set of numbers such that the linear combination

A system of vectors is called linearly independent if, from the equality to zero of a linear combination

it follows that all coefficients are equal to zero

The question of the linear dependence of vectors in general case reduces to the question of the existence of a non-zero solution for a homogeneous system of linear equations with coefficients equal to the corresponding coordinates of these vectors.

In order to thoroughly understand the concepts of “linear dependence” and “linear independence” of a system of vectors, it is useful to solve problems of the following type:

Linearity. I and II criteria for linearity.

Vector system is linearly dependent if and only if one of the vectors of the system is a linear combination of the remaining vectors of this system.

Proof. Let the system of vectors be linearly dependent. Then there is such a set of coefficients ![]() , that , and at least one coefficient is different from zero. Let's pretend that . Then

, that , and at least one coefficient is different from zero. Let's pretend that . Then

that is, it is a linear combination of the remaining vectors of the system.

Let one of the vectors of the system be a linear combination of the remaining vectors. Let's assume that this is a vector, that is ![]() . It's obvious that . We found that the linear combination of system vectors is equal to zero, and one of the coefficients is different from zero (equal to ).

. It's obvious that . We found that the linear combination of system vectors is equal to zero, and one of the coefficients is different from zero (equal to ).

Proposition 10. 7 If a system of vectors contains a linearly dependent subsystem, then the entire system is linearly dependent.

Proof.

Let the subsystem in the system of vectors ![]() , , is linearly dependent, that is, , and at least one coefficient is different from zero. Then let's make a linear combination. It is obvious that this linear combination is equal to zero, and that among the coefficients there is a non-zero one.

, , is linearly dependent, that is, , and at least one coefficient is different from zero. Then let's make a linear combination. It is obvious that this linear combination is equal to zero, and that among the coefficients there is a non-zero one.

The base of the vector system is the main power.

The base of a nonzero system of vectors is its equivalent linearly independent subsystem. A zero system has no base.

Property 1: The base of a linear independent system coincides with itself.

Example: A system of linearly independent vectors since none of the vectors can be linearly expressed through the others.

Property 2: (Base Criterion) A linearly independent subsystem of a given system is its base if and only if it is maximally linearly independent.

Proof: Given a system Necessity Let the base . Then, by definition, and, if , where , the system is linearly dependent, since it is linearly degenerate through , and therefore is maximally linearly independent. Sufficiency Let the subsystem be maximally linearly independent, then where . linearly dependent linearly degenerates through hence the base of the system.

Property 3: (Main property of the base) Each vector of the system can be expressed through the base in a unique way.

Proof Let the vector be degenerate through the base in two ways, then: , then

Rank of the vector system.

|

Definition: The rank of a non-zero system of vectors in a linear space is the number of vectors of its base. The rank of a null system is, by definition, zero. Properties of rank: 1) The rank of a linearly independent system coincides with the number of its vectors. 2) The rank of a linearly dependent system is less than the number of its vectors. 3) The ranks of equivalent systems coincide -rankrank. 4) The rank of the subsystem is less than or equal to the rank of the system. 5) If both are rankrank, then they have a common base. 6) The rank of the system cannot be changed if a vector is added to it, which is a linear combination of the remaining vectors of the system. 7) The rank of the system cannot be changed if a vector is removed from it, which is a linear combination of the remaining vectors. |

To find the rank of a system of vectors, you need to use the Gaussian method to reduce the system to a triangular or trapezoidal shape.

Equivalent vector systems.

Example:

Let's transform the vector data into a matrix to find the base. We get:

Now, using the Gaussian method, we will transform the matrix to trapezoidal form:

1) In our main matrix, we will cancel the entire first column except the first row, from the second we will subtract the first multiplied by , from the third we will subtract the first multiplied by , and from the fourth we will not subtract anything since the first element of the fourth row, that is, the intersection of the first column and fourth line is equal to zero. We get the matrix:  2) Now in the matrix, let's swap rows 2, 3 and 4 for ease of solution, so that there is one in place of the element. Let's change the fourth line instead of the second, the second instead of the third and the third in place of the fourth. We get the matrix:

2) Now in the matrix, let's swap rows 2, 3 and 4 for ease of solution, so that there is one in place of the element. Let's change the fourth line instead of the second, the second instead of the third and the third in place of the fourth. We get the matrix:  3) In the matrix we cancel all elements under the element . Since the element of our matrix is again equal to zero, we do not subtract anything from the fourth line, but to the third we add the second multiplied by . We get the matrix:

3) In the matrix we cancel all elements under the element . Since the element of our matrix is again equal to zero, we do not subtract anything from the fourth line, but to the third we add the second multiplied by . We get the matrix:  4) Let’s swap rows 3 and 4 in the matrix again. We get the matrix:

4) Let’s swap rows 3 and 4 in the matrix again. We get the matrix:  5) In the matrix, add a third row to the fourth row, multiplied by 5. We obtain a matrix that will have a triangular form:

5) In the matrix, add a third row to the fourth row, multiplied by 5. We obtain a matrix that will have a triangular form:

Systems, their ranks coincide due to the properties of rank and their rank is equal to rank rank

Notes: 1) Unlike the traditional Gaussian method, if all elements in a matrix row are divided by a certain number, we do not have the right to reduce the matrix row due to the properties of the matrix. If we want to reduce a row by a certain number, we will have to reduce the entire matrix by this number. 2) If we get a linearly dependent row, we can remove it from our matrix and replace it with a zero row. Example:  You can immediately see that the second line is expressed through the first if you multiply the first by 2. In this case, we can replace the entire second line with zero. We get:

You can immediately see that the second line is expressed through the first if you multiply the first by 2. In this case, we can replace the entire second line with zero. We get:  As a result, having brought the matrix to either a triangular or trapezoidal form, where it does not have linearly dependent vectors, all non-zero vectors of the matrix will be the base of the matrix, and their number will be the rank.

As a result, having brought the matrix to either a triangular or trapezoidal form, where it does not have linearly dependent vectors, all non-zero vectors of the matrix will be the base of the matrix, and their number will be the rank.

Here is also an example of a system of vectors in the form of a graph: Given a system where , , and . The base of this system will obviously be the vectors and , since vectors are expressed through them. This system in graphical form will look like:

Elementary re-creation. Step type systems.

Elementary matrix transformations are those matrix transformations that result in the preservation of matrix equivalence. Thus, elementary transformations do not change the set of solutions to the system of linear algebraic equations that this matrix represents.

Elementary transformations are used in the Gaussian method to reduce a matrix to a triangular or step form.

Elementary string conversions are called:

In some linear algebra courses, permutation of matrix rows is not distinguished as a separate elementary transformation due to the fact that permutation of any two matrix rows can be obtained by multiplying any matrix row by a constant , and adding another row to any matrix row multiplied by a constant , .

Elementary column transformations are defined similarly.

Elementary transformations reversible.

The notation indicates that the matrix can be obtained from by elementary transformations (or vice versa).

Definition. We will call a step matrix a matrix that has the following properties:

1) if i-th line is zero, then the (I + 1)th row is also zero,

2) if the first ones are non-zero i-th elements and the (I + 1)th rows are located in columns numbered k and R, respectively, then k< R.

Condition 2) requires a mandatory increase in zeros on the left when moving from i-th line to the (I + 1)th line. For example, matrices

A 1 = , A 2 =  , A 3 =

, A 3 =

is stepwise, and the matrices

B 1 =  , V 2 =

, V 2 =  , B 3 =

, B 3 =

are not stepped.

Theorem 5.1. Any matrix can be reduced to an echelon matrix using elementary transformations of the matrix rows.

Let us illustrate this theorem with an example.

A=

The resulting matrix is stepwise.

Definition. The rank of a matrix is the number of non-zero rows in the echelon form of this matrix.

For example, the rank of matrix A in the previous example is 3.

Lecture 6.

Determinants and their properties. Inverse matrix and its calculation.

Second order determinants.

Consider a second order square matrix

A =

Definition. The second-order determinant corresponding to the matrix A is the number calculated by the formula

│A│=  =

=  .

.

Elements a ij are called elements of the determinant │A│, elements a 11 and 22 form the main diagonal, and elements a 12 and 21 form the secondary diagonal.

Example.  = -28 + 6 = -22

= -28 + 6 = -22

Third order determinants.

Consider a third-order square matrix

A =

Definition. The third-order determinant corresponding to matrix A is the number calculated by the formula

│A│=  =

=

To remember which products on the right side of an equality should be taken with a plus sign and which with a minus sign, it is useful to remember a rule called the triangle rule.

=

=  ─

─

Examples:

1)  = - 4 + 0 + 4 – 0 + 2 +6 = 8

= - 4 + 0 + 4 – 0 + 2 +6 = 8

2)  = 1, i.e. │E 3 │= 1.

= 1, i.e. │E 3 │= 1.

Let's consider another way to calculate the third-order determinant.

Definition. The minor of the element a ij of the determinant is the determinant obtained from the given one by deleting the i-th row and j-th column. The algebraic complement A ij of an element a ij of a determinant is its minor M ij taken with the sign (-1) i+ j .

Example. Let's calculate the minor M 23 and the algebraic complement A 23 of the element a 23 in the matrix

A =

Let's calculate the minor M 23:

M 23 =  =

=  = - 6 + 4 = -2

= - 6 + 4 = -2

A 23 = (-1) 2+3 M 23 = 2

Theorem 1. The third-order determinant is equal to the sum of the products of the elements of any row (column) and their algebraic complements.

Doc. A-priory

= (1)

= (1)

Let’s choose, for example, the second line and find the algebraic complement A 21, A 22, A 23:

A 21 = (-1) 2+1  = -(

= -( ) =

) =

A 22 = (-1) 2+2  =

=

A 23 = (-1) 2+3  = - (

= - ( ) =

) =

Let us now transform formula (1)

│A│= (  ) + (

) + ( ) + (

) + ( ) = A 21 + A 22 + A 23

) = A 21 + A 22 + A 23

│A│= A 21 + A 22 + A 23

is called the expansion of the determinant │A│ into the elements of the second row. Similarly, the decomposition can be obtained from the elements of other rows and any column.

Example.

= (by elements of the second column) = 1× (-1) 1+2

= (by elements of the second column) = 1× (-1) 1+2  + 2 × (-1) 2+2

+ 2 × (-1) 2+2  +

+

+ (-1)(-1) 3+2  = - (0 + 15) + 2(-2 +20) + (-6 +0) = -15 +36 – 6 = 15.

= - (0 + 15) + 2(-2 +20) + (-6 +0) = -15 +36 – 6 = 15.

6.3. Determinant of nth order (n О N).

Definition. An nth order determinant corresponding to an nth order matrix

A =

A number is called equal to the sum of the products of the elements of any row (column) by their algebraic complements, i.e.

│A│= A i1 + A i2 + … + A in = A 1j + A 2j + … + A nj

It is easy to see that for n = 2 we obtain a formula for calculating the second-order determinant.

Example.  = (by elements of the 4th row) = 3×(-1) 4+2

= (by elements of the 4th row) = 3×(-1) 4+2  +

+

2×(-1) 4+4  = 3(-6 + 20 – 2 – 32) +2(-6 +16 +60 +2)=3(-20) +2×72 = -60 +144 = 84.

= 3(-6 + 20 – 2 – 32) +2(-6 +16 +60 +2)=3(-20) +2×72 = -60 +144 = 84.

Note that if in a determinant all elements of a row (column), except one, are equal to zero, then when calculating the determinant it is convenient to expand it into the elements of this row (column).

Example.

│E n │=  = 1 × │E n -1 │ = … = │E 3 │= 1

= 1 × │E n -1 │ = … = │E 3 │= 1

Properties of determinants.

Definition. View matrix

or

or

we will call it a triangular matrix.

Property 1. The determinant of a triangular matrix is equal to the product of the elements of the main diagonal, i.e.

=

=  =

=

Property 2. The determinant of a matrix with a zero row or zero column is equal to zero.

Property 3. . When transposing a matrix, the determinant does not change, i.e.

│А│= │А t │.

Property 4. If matrix B is obtained from matrix A by multiplying each Element of a certain row by the number k, then

│B│= k│A│

Property 5.

=

=  =

=

Property 6. If matrix B is obtained from matrix A by rearranging two rows, then │B│= −│A│.

Property 7. The determinant of a matrix with proportional rows is equal to zero, in particular, the determinant of a matrix with two identical rows is equal to zero.

Property 8. The determinant of a matrix does not change if elements of another row of the matrix are added to the elements of one row, multiplied by a certain number.

Comment. Since, by property 3, the determinant of a matrix does not change during transposition, then all properties about the rows of a matrix are also true for the columns.

Property 9. If A and B are square matrices of order n, then │AB│=│A││B│.

Inverse matrix.

Definition. A square matrix A of order n is called inverse if there is a matrix B such that AB = BA = E n. In this case, matrix B is called the inverse of matrix A and is denoted A -1.

Theorem 2. The following statements are true:

1) if matrix A is invertible, then there is exactly one inverse matrix;

2) the inverse matrix has a nonzero determinant;

3) if A and B are inverse matrices of order n, then the matrix AB is invertible, and (AB) -1 =

V -1 ×A -1 .

Proof.

1) Let B and C be matrices inverse to matrix A, i.e. AB = BA = E n and AC = CA = E n. Then B = BE n = B(AC) = (BA)C = E n C = C.

2) Let matrix A be invertible. Then there is a matrix A -1, its inverse, and

By property 9 of the determinant │АА -1 │=│А││А -1 │. Then │A││A -1 │=│E n │, from where

│А││А -1 │= 1.

Therefore, │A│¹ 0.

3) Indeed,

(AB)(B -1 A -1) = (A(BB -1))A -1 = (AE n)A -1 = AA -1 = E n.

(B -1 A -1)(AB) = (B -1 (A -1 A))B = (B -1 E n)B = B -1 B = E n.

Therefore, AB is an invertible matrix, and (AB) -1 = B -1 A -1 .

The following theorem gives a criterion for the existence of an inverse matrix and a method for calculating it.

Theorem 3. A square matrix A is invertible if and only if its determinant is nonzero. If │А│¹ 0, then

A -1 = =

Example. Find the inverse matrix for matrix A =

Solution. │A│= = 6 + 1 = 7.

Since │А│¹ 0, then there is an inverse matrix

A -1 = =

We calculate A 11 = 3, A 12 = 1, A 21 = -1, A 22 = 2.

A -1 =  .

.

Lecture 7.

Systems of linear equations. Compatibility criterion for a system of linear equations. Gauss method for solving systems of linear equations. Cramer's rule and the matrix method for solving systems of linear equations.

Systems of linear equations.

A set of equations of the form

(1)

(1)

is called a system of m linear equations with n unknowns x 1, x 2, ..., x n. The numbers a ij are called system coefficients, and the numbers b i are called free terms.

The solution to system (1) is a set of numbers with 1, c 2,..., c n, when substituting them into system (1) instead of x 1, x 2,..., x n, we obtain the correct numerical equalities.

Solving a system means finding all its solutions or proving that there are none. A system is called consistent if it has at least one solution, and inconsistent if there are no solutions.

Matrix composed of system coefficients

A =

It is called the matrix of system (1). If we add a column of free terms to the system matrix, we obtain the matrix

B =  ,

,

which is called the extended matrix of system (1).

If we denote

X = , C = , then system (1) can be written in the form of a matrix equation AX=C.

In this topic we will consider the concept of a matrix, as well as types of matrices. Since there are a lot of terms in this topic, I will add a brief summary to make it easier to navigate the material.

Definition of a matrix and its element. Notation.Matrix is a table of $m$ rows and $n$ columns. The elements of a matrix can be objects of a completely different nature: numbers, variables or, for example, other matrices. For example, the matrix $\left(\begin(array) (cc) 5 & 3 \\ 0 & -87 \\ 8 & 0 \end(array) \right)$ contains 3 rows and 2 columns; its elements are integers. The matrix $\left(\begin(array) (cccc) a & a^9+2 & 9 & \sin x \\ -9 & 3t^2-4 & u-t & 8\end(array) \right)$ contains 2 rows and 4 columns.

Different ways to write matrices: show\hide

The matrix can be written not only in round, but also in square or double straight brackets. Below is the same matrix in different notation forms:

$$ \left(\begin(array) (cc) 5 & 3 \\ 0 & -87 \\ 8 & 0 \end(array) \right);\;\; \left[ \begin(array) (cc) 5 & 3 \\ 0 & -87 \\ 8 & 0 \end(array) \right]; \;\; \left \Vert \begin(array) (cc) 5 & 3 \\ 0 & -87 \\ 8 & 0 \end(array) \right \Vert $$

The product $m\times n$ is called matrix size. For example, if a matrix contains 5 rows and 3 columns, then we speak of a matrix of size $5\times 3$. The matrix $\left(\begin(array)(cc) 5 & 3\\0 & -87\\8 & 0\end(array)\right)$ has size $3 \times 2$.

Typically, matrices are denoted by capital letters of the Latin alphabet: $A$, $B$, $C$ and so on. For example, $B=\left(\begin(array) (ccc) 5 & 3 \\ 0 & -87 \\ 8 & 0 \end(array) \right)$. Line numbering goes from top to bottom; columns - from left to right. For example, the first row of matrix $B$ contains elements 5 and 3, and the second column contains elements 3, -87, 0.

Elements of matrices are usually denoted in small letters. For example, the elements of the matrix $A$ are denoted by $a_(ij)$. The double index $ij$ contains information about the position of the element in the matrix. The number $i$ is the row number, and the number $j$ is the column number, at the intersection of which is the element $a_(ij)$. For example, at the intersection of the second row and the fifth column of the matrix $A=\left(\begin(array) (cccccc) 51 & 37 & -9 & 0 & 9 & 97 \\ 1 & 2 & 3 & 41 & 59 & 6 \ \ -17 & -15 & -13 & -11 & -8 & -5 \\ 52 & 31 & -4 & -1 & 17 & 90 \end(array) \right)$ element $a_(25)= $59:

In the same way, at the intersection of the first row and the first column we have the element $a_(11)=51$; at the intersection of the third row and the second column - the element $a_(32)=-15$ and so on. Note that the entry $a_(32)$ reads “a three two”, but not “a thirty two”.

To abbreviate the matrix $A$, the size of which is $m\times n$, the notation $A_(m\times n)$ is used. The following notation is often used:

$$ A_(m\times(n))=(a_(ij)) $$

Here $(a_(ij))$ indicates the designation of the elements of the matrix $A$, i.e. says that the elements of the matrix $A$ are denoted as $a_(ij)$. In expanded form, the matrix $A_(m\times n)=(a_(ij))$ can be written as follows:

$$ A_(m\times n)=\left(\begin(array)(cccc) a_(11) & a_(12) & \ldots & a_(1n) \\ a_(21) & a_(22) & \ldots & a_(2n) \\ \ldots & \ldots & \ldots & \ldots \\ a_(m1) & a_(m2) & \ldots & a_(mn) \end(array) \right) $$

Let's introduce another term - equal matrices.

Two matrices same size$A_(m\times n)=(a_(ij))$ and $B_(m\times n)=(b_(ij))$ are called equal, if their corresponding elements are equal, i.e. $a_(ij)=b_(ij)$ for all $i=\overline(1,m)$ and $j=\overline(1,n)$.

Explanation for the entry $i=\overline(1,m)$: show\hide

The notation "$i=\overline(1,m)$" means that the parameter $i$ varies from 1 to m. For example, the notation $i=\overline(1,5)$ indicates that the parameter $i$ takes the values 1, 2, 3, 4, 5.

So, for matrices to be equal, two conditions must be met: coincidence of sizes and equality of the corresponding elements. For example, the matrix $A=\left(\begin(array)(cc) 5 & 3\\0 & -87\\8 & 0\end(array)\right)$ is not equal to the matrix $B=\left(\ begin(array)(cc) 8 & -9\\0 & -87 \end(array)\right)$ because matrix $A$ has size $3\times 2$ and matrix $B$ has size $2\times $2. Also, matrix $A$ is not equal to matrix $C=\left(\begin(array)(cc) 5 & 3\\98 & -87\\8 & 0\end(array)\right)$, since $a_( 21)\neq c_(21)$ (i.e. $0\neq 98$). But for the matrix $F=\left(\begin(array)(cc) 5 & 3\\0 & -87\\8 & 0\end(array)\right)$ we can safely write $A=F$ because both the sizes and the corresponding elements of the matrices $A$ and $F$ coincide.

Example No. 1

Determine the size of the matrix $A=\left(\begin(array) (ccc) -1 & -2 & 1 \\ 5 & 9 & -8 \\ -6 & 8 & 23 \\ 11 & -12 & -5 \ \4 & 0 & -10 \\ \end(array) \right)$. Indicate what the elements $a_(12)$, $a_(33)$, $a_(43)$ are equal to.

This matrix contains 5 rows and 3 columns, so its size is $5\times 3$. You can also use the notation $A_(5\times 3)$ for this matrix.

Element $a_(12)$ is at the intersection of the first row and second column, so $a_(12)=-2$. Element $a_(33)$ is at the intersection of the third row and third column, so $a_(33)=23$. Element $a_(43)$ is at the intersection of the fourth row and third column, so $a_(43)=-5$.

Answer: $a_(12)=-2$, $a_(33)=23$, $a_(43)=-5$.

Types of matrices depending on their size. Main and secondary diagonals. Matrix trace.Let a certain matrix $A_(m\times n)$ be given. If $m=1$ (the matrix consists of one row), then the given matrix is called matrix-row. If $n=1$ (the matrix consists of one column), then such a matrix is called matrix-column. For example, $\left(\begin(array) (ccccc) -1 & -2 & 0 & -9 & 8 \end(array) \right)$ is a row matrix, and $\left(\begin(array) (c) -1 \\ 5 \\ 6 \end(array) \right)$ is a column matrix.

If the matrix $A_(m\times n)$ satisfies the condition $m\neq n$ (i.e., the number of rows is not equal to the number of columns), then it is often said that $A$ is a rectangular matrix. For example, the matrix $\left(\begin(array) (cccc) -1 & -2 & 0 & 9 \\ 5 & 9 & 5 & 1 \end(array) \right)$ has size $2\times 4$, those. contains 2 rows and 4 columns. Since the number of rows is not equal to the number of columns, this matrix is rectangular.

If the matrix $A_(m\times n)$ satisfies the condition $m=n$ (i.e., the number of rows is equal to the number of columns), then $A$ is said to be a square matrix of order $n$. For example, $\left(\begin(array) (cc) -1 & -2 \\ 5 & 9 \end(array) \right)$ is a second-order square matrix; $\left(\begin(array) (ccc) -1 & -2 & 9 \\ 5 & 9 & 8 \\ 1 & 0 & 4 \end(array) \right)$ is a third-order square matrix. IN general view the square matrix $A_(n\times n)$ can be written as follows:

$$ A_(n\times n)=\left(\begin(array)(cccc) a_(11) & a_(12) & \ldots & a_(1n) \\ a_(21) & a_(22) & \ldots & a_(2n) \\ \ldots & \ldots & \ldots & \ldots \\ a_(n1) & a_(n2) & \ldots & a_(nn) \end(array) \right) $$

The elements $a_(11)$, $a_(22)$, $\ldots$, $a_(nn)$ are said to be on main diagonal matrices $A_(n\times n)$. These elements are called main diagonal elements(or just diagonal elements). The elements $a_(1n)$, $a_(2 \; n-1)$, $\ldots$, $a_(n1)$ are on side (minor) diagonal; they are called side diagonal elements. For example, for the matrix $C=\left(\begin(array)(cccc)2&-2&9&1\\5&9&8& 0\\1& 0 & 4 & -7 \\ -4 & -9 & 5 & 6\end(array) \right)$ we have:

The elements $c_(11)=2$, $c_(22)=9$, $c_(33)=4$, $c_(44)=6$ are the main diagonal elements; elements $c_(14)=1$, $c_(23)=8$, $c_(32)=0$, $c_(41)=-4$ are side diagonal elements.

The sum of the main diagonal elements is called followed by the matrix and is denoted by $\Tr A$ (or $\Sp A$):

$$ \Tr A=a_(11)+a_(22)+\ldots+a_(nn) $$

For example, for the matrix $C=\left(\begin(array) (cccc) 2 & -2 & 9 & 1\\5 & 9 & 8 & 0\\1 & 0 & 4 & -7\\-4 & -9 & 5 & 6 \end(array)\right)$ we have:

$$ \Tr C=2+9+4+6=21. $$

The concept of diagonal elements is also used for non-square matrices. For example, for the matrix $B=\left(\begin(array) (ccccc) 2 & -2 & 9 & 1 & 7 \\ 5 & -9 & 8 & 0 & -6 \\ 1 & 0 & 4 & - 7 & -6 \end(array) \right)$ the main diagonal elements will be $b_(11)=2$, $b_(22)=-9$, $b_(33)=4$.

Types of matrices depending on the values of their elements.If all elements of the matrix $A_(m\times n)$ are equal to zero, then such a matrix is called null and is usually denoted by the letter $O$. For example, $\left(\begin(array) (cc) 0 & 0 \\ 0 & 0 \\ 0 & 0 \end(array) \right)$, $\left(\begin(array) (ccc) 0 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \end(array) \right)$ - zero matrices.

Let's consider some non-zero row of the matrix $A$, i.e. a string that contains at least one element other than zero. Leading element of a non-zero string we call its first (counting from left to right) non-zero element. For example, consider the following matrix:

$$W=\left(\begin(array)(cccc) 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 12\\ 0 & -9 & 5 & 9 \end(array)\right)$ $

In the second line the leading element will be the fourth element, i.e. $w_(24)=12$, and in the third line the leading element will be the second element, i.e. $w_(32)=-9$.

The matrix $A_(m\times n)=\left(a_(ij)\right)$ is called stepped, if it satisfies two conditions:

Examples of step matrices:

$$ \left(\begin(array)(cccccc) 0 & 0 & 2 & 0 & -4 & 1\\ 0 & 0 & 0 & 0 & -9 & 0\\ 0 & 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 0 \end(array)\right);\; \left(\begin(array)(cccc) 5 & -2 & 2 & -8\\ 0 & 4 & 0 & 0\\ 0 & 0 & 0 & -10 \end(array)\right). $$

For comparison: matrix $Q=\left(\begin(array)(ccccc) 2 & -2 & 0 & 1 & 9\\0 & 0 & 0 & 7 & 9\\0 & -5 & 0 & 10 & 6\end(array)\right)$ is not a step matrix, since the second condition in the definition of a step matrix is violated. The leading elements in the second and third rows $q_(24)=7$ and $q_(32)=10$ have numbers $k_2=4$ and $k_3=2$. For a step matrix, the condition $k_2\lt(k_3)$ must be satisfied, which is violated in this case. Let me note that if we swap the second and third rows, we get a stepwise matrix: $\left(\begin(array)(ccccc) 2 & -2 & 0 & 1 & 9\\0 & -5 & 0 & 10 & 6 \\0 & 0 & 0 & 7 & 9\end(array)\right)$.

A step matrix is called trapezoidal or trapezoidal, if the leading elements $a_(1k_1)$, $a_(2k_2)$, ..., $a_(rk_r)$ satisfy the conditions $k_1=1$, $k_2=2$,..., $k_r= r$, i.e. the leading ones are the diagonal elements. In general, a trapezoidal matrix can be written as follows:

$$ A_(m\times(n)) =\left(\begin(array) (cccccc) a_(11) & a_(12) & \ldots & a_(1r) & \ldots & a_(1n)\\ 0 & a_(22) & \ldots & a_(2r) & \ldots & a_(2n)\\ \ldots & \ldots & \ldots & \ldots & \ldots & \ldots\\ 0 & 0 & \ldots & a_(rr) & \ldots & a_(rn)\\ 0 & 0 & \ldots & 0 & \ldots & 0\\ \ldots & \ldots & \ldots & \ldots & \ldots & \ldots\\ 0 & 0 & \ldots & 0 & \ldots & 0 \end(array)\right) $$

Examples of trapezoidal matrices:

$$ \left(\begin(array)(cccccc) 4 & 0 & 2 & 0 & -4 & 1\\ 0 & -2 & 0 & 0 & -9 & 0\\ 0 & 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 0 \end(array)\right);\; \left(\begin(array)(cccc) 5 & -2 & 2 & -8\\ 0 & 4 & 0 & 0\\ 0 & 0 & -3 & -10 \end(array)\right). $$

Let's give a few more definitions for square matrices. If all elements of a square matrix located under the main diagonal are equal to zero, then such a matrix is called upper triangular matrix. For example, $\left(\begin(array) (cccc) 2 & -2 & 9 & 1 \\ 0 & 9 & 8 & 0 \\ 0 & 0 & 4 & -7 \\ 0 & 0 & 0 & 6 \end(array) \right)$ is an upper triangular matrix. Note that the definition of an upper triangular matrix does not say anything about the values of the elements located above the main diagonal or on the main diagonal. They can be zero or not - it doesn't matter. For example, $\left(\begin(array) (ccc) 0 & 0 & 9 \\ 0 & 0 & 0\\ 0 & 0 & 0 \end(array) \right)$ is also an upper triangular matrix.

If all elements of a square matrix located above the main diagonal are equal to zero, then such a matrix is called lower triangular matrix. For example, $\left(\begin(array) (cccc) 3 & 0 & 0 & 0 \\ -5 & 1 & 0 & 0 \\ 8 & 2 & 1 & 0 \\ 5 & 4 & 0 & 6 \ end(array) \right)$ - lower triangular matrix. Note that the definition of a lower triangular matrix does not say anything about the values of the elements located under or on the main diagonal. They may be zero or not - it doesn't matter. For example, $\left(\begin(array) (ccc) -5 & 0 & 0 \\ 0 & 0 & 0\\ 0 & 0 & 9 \end(array) \right)$ and $\left(\begin (array) (ccc) 0 & 0 & 0 \\ 0 & 0 & 0\\ 0 & 0 & 0 \end(array) \right)$ are also lower triangular matrices.

The square matrix is called diagonal, if all elements of this matrix that do not lie on the main diagonal are equal to zero. Example: $\left(\begin(array) (cccc) 3 & 0 & 0 & 0 \\ 0 & -2 & 0 & 0 \\ 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 6 \ end(array)\right)$. The elements on the main diagonal can be anything (equal to zero or not) - it doesn't matter.

The diagonal matrix is called single, if all elements of this matrix located on the main diagonal are equal to 1. For example, $\left(\begin(array) (cccc) 1 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & 1 \end(array)\right)$ - fourth-order identity matrix; $\left(\begin(array) (cc) 1 & 0 \\ 0 & 1 \end(array)\right)$ is the second-order identity matrix.

In July 2020, NASA launches an expedition to Mars. The spacecraft will deliver to Mars an electronic medium with the names of all registered expedition participants.

Registration of participants is open. Get your ticket to Mars using this link.

If this post solved your problem or you just liked it, share the link to it with your friends on social networks.

One of these code options needs to be copied and pasted into the code of your web page, preferably between tags and or immediately after the tag. According to the first option, MathJax loads faster and slows down the page less. But the second option automatically monitors and loads the latest versions of MathJax. If you insert the first code, it will need to be updated periodically. If you insert the second code, the pages will load more slowly, but you will not need to constantly monitor MathJax updates.

The easiest way to connect MathJax is in Blogger or WordPress: in the site control panel, add a widget designed to insert third-party JavaScript code, copy the first or second version of the download code presented above into it, and place the widget closer to the beginning of the template (by the way, this is not at all necessary , since the MathJax script is loaded asynchronously). That's all. Now learn the markup syntax of MathML, LaTeX, and ASCIIMathML, and you are ready to insert mathematical formulas into your site's web pages.

Another New Year's Eve... frosty weather and snowflakes on the window glass... All this prompted me to write again about... fractals, and what Wolfram Alpha knows about it. There is an interesting article on this subject, which contains examples of two-dimensional fractal structures. Here we will look at more complex examples of three-dimensional fractals.

A fractal can be visually represented (described) as a geometric figure or body (meaning that both are a set, in this case, a set of points), the details of which have the same shape as the original figure itself. That is, this is a self-similar structure, examining the details of which when magnified, we will see the same shape as without magnification. Whereas in the case of an ordinary geometric figure (not a fractal), upon magnification we will see details that have a simpler shape than the original figure itself. For example, at a high enough magnification, part of an ellipse looks like a straight line segment. This does not happen with fractals: with any increase in them, we will again see the same complex shape, which will be repeated again and again with each increase.

Benoit Mandelbrot, the founder of the science of fractals, wrote in his article Fractals and Art in the Name of Science: “Fractals are geometric shapes that are as complex in their details as in their overall form. That is, if part of the fractal will be enlarged to the size of the whole, it will appear as a whole, either exactly, or perhaps with a slight deformation."