Gtx 295 in modern games. Overview of the dual-chip ZOTAC GeForce GTX295 video card

The new most productive graphics accelerator with a not so noisy cooling system and ... a good bundle.

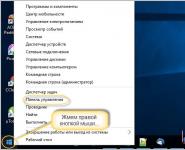

In order to “climb the mountain again”, NVIDIA switched its “top” GT200 GPU to a thinner technological process production - 55 nm. The new GT200b chip architecturally remained the same, but became less hot, which made it possible to release an accelerated version of the single-chip GTX280 - the GTX285 video card (it was discussed in the review ZOTAC GeForce GTX 285 AMP! Edition). But this was not enough to overthrow the Radeon HD4870X2, and simultaneously with the GTX285, a dual-chip one was released, based on two slightly simplified GT200b chips. The resulting accelerator, in the name of which there is no hint of "double-headedness", should provide unsurpassed performance.

To begin with, let's give a comparative table showing the history of the development of two-chip NVIDIA video cards and analogues from the direct competitor AMD-ATI.

| Graphics chip |

GT200-400-B3 |

|||

| Core frequency, MHz | ||||

| Frequency of unified processors, MHz | ||||

| Number of unified processors | ||||

| Number of texture addressing and filtering blocks | ||||

| Number of ROPs | ||||

| Memory size, MB | ||||

| Effective memory frequency, MHz |

2000 |

2250 |

3600 |

|

| Memory type | ||||

| Memory bus width, bit | ||||

| Technical process of production | ||||

| Power consumption, W |

up to 289 |

Let's start by summarizing and refining the GTX 295 specification.

| Manufacturer | |

| Model | ZT-295E3MA-FSP ( GTX 295) |

| Graphics core | NVIDIA GTX 295 (GT200-400-B3) |

| Conveyor | 480 unified streaming |

| Supported APIs | DirectX 10.0 (Shader Model 4.0) OpenGL 2.1 |

| Core (shader domain) frequency, MHz | 576 (1242) |

| Volume (type) of memory, MB | 1792 (GDDR3) |

| Frequency (effective) memory, MHz | 999 (1998 DDR) |

| Memory bus, bit | 2x448 |

| Tire standard | PCI Express 2.0 x16 |

| Maximum Resolution | Up to 2560 x 1600 in Dual-Link DVI mode Up to 2048 x 1536 @ 85Hz over analog VGA Up to 1920x1080 (1080i) via HDMI |

| Outputs | 2x DVI-I (VGA via adapter) 1xHDMI |

| HDCP support HD video decoding |

There is H.264, VC-1, MPEG2 and WMV9 |

| Drivers | Latest drivers can be downloaded from: - support site ; - GPU manufacturer website . |

| Products webpage | http://www.zotac.com/ |

The video card comes in a cardboard box, which is exactly the same as for the GTX 285 AMP! Edition, but has a slightly different design, all in the same black and gold colors. On the front side, in addition to the name of the graphics processor, the presence of 1792 MB of GDDR3 video memory and an 896-bit memory bus, hardware support for the newfangled physical API NVIDIA PhisX and the presence of a built-in HDMI video interface are noted.

Listed on the back of the package general features video cards with a brief explanation of the benefits of using NVIDIA PhisX, NVIDIA SLI, NVIDIA CUDA and 3D Vision.

All this information is briefly duplicated on one of the sides of the box.

The other side of the package lists the exact frequency response and the operating systems supported by the drivers.

A little lower, information is carefully indicated on the minimum requirements for the system in which the “gluttonous” GTX 295 will be installed. So, the future owner of such a powerful graphics accelerator will need a power supply unit with a capacity of at least 680 W, which can provide up to 46 A via the 12 V line. must have the required number of PCI Express power outputs: one 8-pin and one 6-pin.

By the way, the new packaging is much more convenient to use than the previously used ones. It allows you to quickly access the contents, which is convenient both when you first build the system and when you change the configuration, for example, if you need some kind of adapter or maybe a driver disk when you reinstall the system.

The delivery set is quite sufficient for installing and using the accelerator, and in addition to the video adapter itself, it includes:

- disk with drivers and utilities;

- CD with bonus game "Race Driver: GRID";

- disc with 3DMARK VANTAGE;

- paper manuals for quick installation and use of the video card;

- branded sticker on the PC case;

- adapter from 2x peripheral power connectors to 6-pin PCI-Express;

- adapter from 2x peripheral power connectors to 8-pin PCI-Express;

- adapter from DVI to VGA;

- HDMI cable;

- SPDIF output connection cable sound card to the video card.

By its singularity appearance graphics card is obliged to design. This is striking at first glance when you see a practically rectangular accelerator completely enclosed by a casing with a slot through which you can see the turbine of the cooling system, hidden somewhere in the depths.

The reverse side of the video card looks a little simpler. This is due to the fact that, unlike the GeForce 9800 GX2, there is no second half of the casing here. This, it would seem, indicates a greater predisposition of the GTX 295 graphics accelerators to disassembly, but as it turned out later, everything is not so simple and safe.

On top of the video card, almost at the very edge, there are additional power connectors, and due to the rather high power consumption, you need to use one 6-pin and one 8-pin PCI Express.

Next to the power connectors there is a SPDIF digital audio input, which should provide mixing of the audio stream with video data when using the HDMI interface.

Further, a cutout is made in the casing, through which heated air is ejected directly into the system unit. This will clearly worsen the climate inside it, because, given the power consumption of the video card, the cooling system will blow out a fairly hot stream.

And already near the panel of external interfaces there is an NV-Link connector, which will allow you to combine two such powerful accelerators in an uncompromising Quad SLI configuration.

Two DVIs are responsible for image output, which can be converted to VGA with the help of adapters, as well as a high-definition multimedia output HDMI. There are two LEDs next to the top video outputs. The first displays the power status in this moment– green if the level is sufficient and red if one of the connectors is not connected or an attempt was made to power the card from two 6-pin PCI Express. The second one indicates the DVI output to which the master monitor should be connected. A little lower is a ventilation grill through which part of the heated air is blown out.

However, disassembling the video card turned out to be not as difficult as it was in the case of the 9800 GX2. First you need to remove the top casing and ventilation grill from the interface panel, and then just unscrew a dozen and a half spring-loaded screws on each side of the video card.

Inside this "sandwich" is one system cooling, consisting of several copper heat sinks and copper heat pipes, on which a lot of aluminum plates are strung. All this is blown by a sufficiently powerful and, accordingly, not very quiet turbine.

On both sides of the cooling system there are printed circuit boards, each of which represents a half: it carries one video processor with its own video memory and power system, as well as auxiliary chips. Please note that the 6-pin auxiliary power connector is located on the half more saturated with chips, which is logical, because. even up to 75 watts is provided by the PCI Express bus located in the same place.

The boards are interconnected using special flexible SLI bridges. Moreover, they themselves and their connection connectors are very capricious, so we do not recommend opening your expensive video card without special need.

But bridges alone are not enough to ensure that parts of the GTX 295 work in harmony with the rest of the system. In real conditions, the chipset or the increasingly used NVIDIA nForce 200 extension with PCI Express 2.0 support is responsible for the operation of the SLI-bundle of a pair of video cards. It is the NF200-P-SLI PCIe switch that is used in.

The boards also have two NVIO2 chips that are responsible for the video outputs: the first one provides support for a pair of DVI, and the second one for one HDMI.

It is thanks to the presence of the second chip that multi-channel audio is mixed from the SPDIF input to a convenient and promising HDMI output.

The video card is based on two NVIDIA GT200-400-B3 chips. Unfortunately, on printed circuit boards there wasn't enough space for a few more memory chips, so the full-fledged GT200-350 had its memory bus reduced from 512 to 448 bits, which in turn led to a reduction in rasterization channels to 28, like in the GTX260. But on the other hand, there are 240 unified processors, like a full-fledged GTX285. The GPU itself runs at 576 MHz, and the shader pipelines at 1242 MHz.

The total volume of 1792 MB of video memory is typed by Hynix chips H5RS5223CFR-N0C, which at an operating voltage of 2.05 V have a response time of 1.0 ms, i.e. provide operation at an effective frequency of 2000 MHz. At this frequency, they work, without providing a backlog for overclockers.

Cooling System Efficiency

In a closed but well-ventilated test case, we were unable to get the turbine to automatically spin up to maximum speed. True, even with 75% of the cooler's capabilities, it was far from quiet. Under such conditions, one of GPUs, located closer to the turbine, was heated to 79ºC, and the second - to 89ºC. Given the overall power consumption, these are still far from critical overheating values. Thus, we can note the good efficiency of the cooler used. If only it were a little quieter...

At the same time, acoustic comfort was disturbed not only by the cooling system - the power stabilizer on the card also did not work quietly, and its high-frequency whistle cannot be called pleasant. True, in a closed case, the video card became quieter, and if you play with sound, then good speakers will completely hide its noise. It will be worse if you decide to play in the evening with headphones, and next to you someone is already going to rest. But that's exactly the price you pay for a very high performance.

Testing

| CPU | Intel Core 2 Quad Q9550 (LGA775, 2.83GHz, L2 12MB) @3.8GHz |

| motherboards | NForce 790i-Supreme (LGA775, nForce 790i Ultra SLI, DDR3, ATX) GIGABYTE GA-EP45T-DS3R (LGA775, Intel P45, DDR3, ATX) |

| Coolers | Noctua NH-U12P (LGA775, 54.33 CFM, 12.6-19.8 dB) Thermalright SI-128 (LGA775) + VIZO Starlet UVLED120 (62.7 CFM, 31.1 dB) |

| Additional cooling | VIZO Propeller PCL-201 (+1 slot, 16.0-28.3 CFM, 20 dB) |

| RAM | 2x DDR3-1333 1024MB Transcend PC3-10600 |

| Hard drives | Hitachi Deskstar HDS721616PLA380 (160 GB, 16 MB, SATA-300) |

| Power supplies | CHIEFTEC CFT-850G-DF (850W, 140+80mm, 25dB) Seasonic SS-650JT (650W, 120mm, 39.1dB) |

| Frame | Spire SwordFin SP9007B (Full Tower) + Coolink SWiF 1202 (120×120x25, 53 CFM, 24 dB) |

| Monitor | Samsung SyncMaster 757MB (DynaFlat, 2048× [email protected] Hz, MPR II, TCO'99) |

Having in its arsenal the same number of stream processors as a pair of GTX280s, but in terms of other characteristics it looks more like a 2-Way SLI bundle from the GTX260, the dual-chip video card in terms of performance is just between the pairs of these accelerators and only occasionally outperforms all possible competitors. But since it doesn't impose SLI support on the motherboard and should cost less than two GTX280s or GTX285s, it can be considered a really promising high-performance solution for true gamers.

Energy consumption

Having repeatedly made sure during the tests of power supplies that often the system requirements for performance components indicate overestimated recommendations regarding the required power, we decided to check whether the GTX 295 really requires 680 watts. At the same time, let's compare the power consumption of this graphics accelerator with other video cards and their bundles, complete with a quad-core Intel Core 2 Qyad Q9550 overclocked to 3.8 GHz.

| Power consumption, W |

We are grateful to the company ELKO Kiev» official distributor Zotac International in Ukraine for video cards provided for testing. |

I remember that back in 2000 we dreamed of dual-processor accelerators, and even mentally imagined this (based on two Geforce2 GTS, which is in the collage above), because at the beginning of that year everyone was looking forward to a dual-processor monster from 3dfx, but at the same time, advanced users understood that two Geforce2 would be clearly faster. :-))

But let's leave the lyrics and nostalgia and return to our days. Almost six months after the release of the RADEON HD 4870 X2, Nvidia finally decided to release a dual-chip video card based on the GPU of its modern GT2xx architecture. On the one hand, the decision is understandable because if we consider not only single-chip video cards, then the best representatives of the company lagged behind the competing dual-chip AMD solutions, which the market leader cannot afford.

After all, look at what has been happening on the market since August last year? AMD, following its concept of releasing single-chip mid-end solutions and two-chip high-end video cards, and taking advantage of the smaller complexity and size of chips made according to a more advanced technical process, soon after the single-chip versions introduced its two-chip model HD 4870 X2. And starting from August 2008, it was this model that was the most productive (although we will return to the issue of multi-chip rendering features in our materials) video card available on the market.

Nvidia then had no choice but to oppose one HD 4870 X2 system based on two Geforce GTX 280 or GTX 260, and against a pair of HD 4870 X2 3-Way SLI based on three GTX 280. That is, in the absence of the possibility of release a more powerful single-chip solution, such as the GTX 295, suggested itself.

On the other hand, the release of such a video card right now seems somewhat untimely to us. After all, AMD has been the leader for several months now with its RADEON HD 4870 X2, and this information has successfully settled in the minds of users interested in the 3D industry. And according to rumors, in a few months, in the second quarter of this year, new models of video cards from Nvidia are expected to be released based on the updated GPU architecture, which are unlikely to be faster than the GTX 295 in benchmarks.

That is, it turns out that for the HD 4870 X2 the competition is too late, and this two-chip card, as the GeForce 9800 GX2 once did, may interfere with its future solutions. And this model is unlikely to have a positive impact on the financial position of the company and is unlikely to have time the cost of the solution is clearly high, and the price cannot be raised much (and has not been raised, see below). Yes, the Christmas sales season is over. Another thing is if the GTX 295 had been released in November, for example ... Then this model would clearly make more sense. Besides, spring will come soon, along with new single-chip models.

However, the future is unknown to us, let's not get ahead of ourselves. We have outlined our vision of the situation, and let's see how it turns out. We believe that the GTX 295 is more of a fashion solution, designed to show that Nvidia is strong, and not really change the situation in the market, increasing sales and profits. Well, that also makes some sense. Just so users don't wonder again why the next single-chip cards will perform worse in benchmarks compared to the dual-chip GTX 295, as happened earlier with the GTX 280 and 9800 GX2.

Indeed, unlike AMD, which already produces exclusively multi-chip solutions for the upper price range, Nvidia does not seem to be going to abandon the single-chip future of its top video cards. However, let's repeat once again that the solution considered today is designed to take the crown of leadership in benchmarks from AMD RADEON HD 4870 X2, and this is its main goal.

Naturally, it was simply impossible to create such a solution on the basis of 65 nm chips after all, even one Geforce GTX 280 on one GT200 chip (which means 1.4 billion transistors and a core size of about 576 sq. mm) consumes more than 200 W! And since the transition of the GT200 to 55nm technological norms took Nvidia too long, the release of the two-chip solution was also delayed.

So, Geforce GTX 295 is based on two GT200b chips, produced according to the 55 nm process technology, they have no differences from the 65 nm predecessor, except for a smaller chip area and reduced power consumption. But this transition to 55nm allowed the card based on two large and powerful GPUs to consume less than 300W, while maintaining the level of heat dissipation that the updated dual-slot cooling system can handle.

The theoretical part about the GeForce GTX 295 will be short, because these are two ordinary GT200b chips, albeit made according to the new 55 nm production standards, installed on two connected printed circuit boards. The two-chip system works according to SLI technology, PCI Express lines and the corresponding bridge are made on the board. And a significant difference compared to systems based on two Geforce GTX 280 or 260 will be only different operating frequencies of the chip and memory, memory size and bus width, as well as the configuration of GPU units. These are all quantitative, but not qualitative differences.

If you are not yet familiar with the Geforce GTX 200 (GT200) architecture, then you can read all the details about it in the basic review on our website. This is a further development of the G8x / G9x architecture, but some changes have been made to it. Also, before reading this material, we recommend that you carefully read the basic theoretical materials DX Current, DX Next and Longhorn, which describe various aspects of modern hardware graphics accelerators and the architectural features of previous Nvidia and AMD products.

These materials quite accurately predicted the current situation with video chip architectures, and many assumptions about future solutions were justified. Detailed information about the unified architectures of Nvidia G8x/G9x/GT2xx using the example of previous solutions can be found in the following articles:

So, let's assume that all readers are already familiar with the architecture, and consider the detailed characteristics of a dual-chip video card of the Geforce GTX 200 series based on GT200b chips made using a 55 nm process technology.

Graphic accelerator Geforce GTX 295

- Chip Codename GT200b

- 55 nm production technology

- Two chips with 1.4 billion transistors

- Unified architecture with an array of common processors for vertex and pixel streaming, and other kinds of data

- Hardware support for DirectX 10, including shader model - Shader Model 4.0, geometry generation and recording intermediate data from shaders (stream output)

- Two 448-bit memory buses, seven (out of eight) independent 64-bit wide controllers

- Core frequency 576 MHz

- More than double the 1242 MHz ALU frequency

- 2 × 240 scalar floating point ALUs (integer and float formats, support for FP32 and FP64 precision within the IEEE 754(R) standard, two MAD+MUL operations per clock

- 2 × 80 texture addressing and filtering units with support for FP16 and FP32 components in textures

- Possibility of dynamic branching in pixel and vertex shaders

- 2 × 7 wide ROPs (2 × 28 pixels) with support for anti-aliasing modes up to 16 samples per pixel, including with FP16 or FP32 framebuffer format. Each block consists of an array of flexibly configurable ALUs and is responsible for generating and comparing Z, MSAA, blending. Peak subsystem performance up to 224 MSAA samples (+ 224 Z) per clock, in colorless mode (Z only) - 448 samples per clock

- Write results to 8 frame buffers simultaneously (MRT);

- Interfaces (two RAMDAC, two Dual Link DVI, HDMI, DisplayPort, HDTV) are integrated on a separate chip.

Geforce GTX 295 reference graphics card specifications

- Core frequency 576 MHz

- Frequency of universal processors 1242 MHz

- Number of universal processors 480 (2 × 240)

- Number of texture units 160 (2 × 80), blending units 56 (2 × 28)

- Effective memory frequency 2000 (2*1000) MHz

- Memory type GDDR3

- Memory capacity 1792 (896 × 2) megabytes

- Memory bandwidth 2 × 112 GB/s

- Theoretical maximum fill rate is 2 × 16.1 gigapixels per second.

- Theoretical texture sampling rate is 2 × 46.1 gigatexels per second.

- Two DVI-I Dual Link connectors, supports output at resolutions up to 2560x1600

- Single SLI connector

- PCI Express 2.0 bus

- TV-Out, HDTV-Out, HDCP support, HDMI, DisplayPort

- Power consumption up to 289 W (8-pin and 6-pin connectors)

- Dual slot design

- MSRP $499

So, the production of GT200b according to 55 nm technology has now allowed Nvidia to release a very powerful two-chip solution. Which is energy efficient in 2D and 3D modes compared to its main competitor RADEON HD 4870 X2. The new Nvidia graphics card delivers better performance at comparable power consumption. This is all the more unexpected, because GT200b video chips, even being made using the same 55 nm process technology as RV770, have a much larger area and complexity (number of transistors). Either the frequencies of the final revisions of the GT200b were reduced compared to the designed ones, or the chips were specially created for better energy efficiency.

As you can see, Nvidia decided to release a dual-chip card under the same GTX prefix suffix, changing only the model number. Of course, it's up to them to decide, but we see it as more logical to release such a model under some other name, like GX2 290, or G2X 290. Even if it's SLI 290, it would be clearer to people. And the name chosen by the company does not say that the card is dual-chip, and does not emphasize this difference. Which, in our opinion, is not very good from the point of view of the buyer, as it confuses him.

It is necessary to add a couple of words about the forcedly applied amount of video memory. The decision to limit itself to a 448-bit bus and 896 MB of memory for each video chip, apparently, was caused by the need to make the PCB layout simpler. As a result, not quite the usual amount of memory, and more importantly, it is smaller than that of the competing RADEON HD 4870 X2. And although the difference between 896 and 1024 is quite small, and in practice it will not affect performance too much, from a marketing point of view, this is also not very good albeit nominally, but according to one of the numbers (very beloved in marketing!) The solution turns out to be “ worse" than the competitor.

Architecture and features of the solution

It is simply impossible to tell anything interesting here, the GT200b chips are the same GT200 we know, just smaller and more efficient in terms of energy consumption. The GT200 architecture was announced last summer, and given that this is an improved G8x/G9x architecture, even earlier in 2006. The main difference between the G92 chip and the G80 was the 65 nm production technology, the innovations of the GT200 are mainly quantitative, and the GT200b is the same GT200. All this is described in detail in our previous materials.

Earlier, information appeared on the Web that a dual-chip card on the GT200b will consist of two GT200s with the same number of execution units as a pair of Geforce GTX 260. But Nvidia decided to install full-fledged GT200 chips with 240 ALUs each, as well as 80 texture units on the GTX 295. However, the memory configuration is kept from the GTX 260, which means 448-bit bus and 896 MB GDDR3 memory per chip. Just like the GTX 260, each of the two chips in the GTX 295 uses only seven of the eight wide ROPs on the chip, for a total of 56 ROPs.

The chip's clock speeds fully correspond to the frequencies of single Geforce GTX 260. The frequency of the GPU, TMU and ROP units is 576 MHz, and the stream computing processors operate at a frequency of 1242 MHz. GDDR3 memory is clocked at 1000(2000) MHz.

Similar to Nvidia's previous dual-chip solution, the Geforce 9800 GX2, the new Geforce GTX 295 uses a dual-board design that was chosen for its superior performance compared to other solutions. This design involves the installation of two GPUs, each of which has its own PCB. This provides the following benefits: each chip generates heat by heating only one of the PCBs; the cooler cools both chips at the same time, unlike the Geforce 7950 GX2, which uses two cooling devices. The cooler has changed for the better since the 9800 GX2, acquiring new characteristics in order to dissipate almost one and a half times large quantity heat compared to the previous dual-chip model.

The maximum power consumption of the Geforce GTX 295 is 289 W, which is quite comparable with the figure of the competing RADEON HD 4870 X2, equal to 286 W. The card requires two power connectors: 6-pin and 8-pin, and the recommended minimum system power supply for a single Geforce GTX 295 is 680 W.

Let's talk about the features of a multi-chip bundle, although there are not many of them. Between the two GPUs, as in the case of all previous solutions based on a pair of video chips, a switch chip is installed that connects the GPUs. In this case, the nForce 200 (BR-04) chip was used, which supports the required number of lines for three PCI-E ports with support for version 2.0. For communication with each chip, 16 PCI-E 2.0 lines are assigned, and the same number for data transfer between the motherboard and the video card.

Such a PCI-E bridge was installed on the company's previous two-chip solution, and nForce 200 is also known for being offered as an alternative solution for supporting SLI technology in motherboards based on the Intel X58 chipset.

In addition to the fact that Geforce GTX 295 itself works as a two-chip system, the possibilities of SLI technology allow you to combine two such boards in one system. This configuration is called Quad SLI. The modern implementation of Quad SLI for all four chips uses a pure AFR mode, when four frames are processed in parallel. In this case, the frame rate grows quite strongly, up to 80-90% for every doubling of the number of chips.

A separate article is being prepared about the disadvantages of multi-chip rendering, in which we will try to describe in detail all the features associated with the operation of the AFR mode. This applies both to input lags, which are not significantly reduced with a significant increase in average FPS, and to low minimum FPS and frame rate unevenness. All these little things may not be too noticeable on a two-chip system, but on a four-chip one they can already cause more inconvenience - the average frame rate increases, but the game does not become so comfortable.

Support for external interfaces in Geforce GTX 295 differs little from what we saw in previous solutions on the same GPUs. Except that the additional NVIO2 input-output chip available on Nvidia boards, which supports external interfaces placed outside the main one, this time is present in the amount of two pieces.

The Geforce GTX 295 video card has two Dual Link DVI outputs with HDCP support, as well as one HDMI output. DVI outputs output the image from the first GPU, and the second is responsible for the only HDMI. With the latest drivers, when working in SLI mode, output to two DVIs is supported, and to use all three outputs at the same time, this mode must be disabled. To output audio via HDMI, which is traditional for Nvidia solutions, the board has an SPDIF input, to which you want to connect an audio source.

Naturally, the Geforce GTX 295 also supports Nvidia PhysX technology, the active use of which is already beginning to appear in games such as the recently released Cryostasis and the upcoming Mirror's Edge. Moreover, the Geforce GTX 295 can operate in SLI mode , simultaneously processing physical effects and frame construction, and in a mode where one chip is engaged in 3D rendering, and the second one is exclusively PhysX calculations.

At the end of the theoretical part, let me remind readers of the question we asked in the basic article about the RADEON HD 4870 X2. At the time, we wrote that AMD was pointing out the problems inherent in large high-end chips (while they clearly hinted at Nvidia's GT200), and among these problems was too high power consumption of such GPUs. Why then were we surprised at the absence of this advantage in AMD dual-chip solutions (for example, HD 4850 X2) compared to Nvidia's single-chip GTX 280, that now we continue to be perplexed where did the imaginary advantage in power consumption go? Especially in the case of the HD 4870 X2, after the release of a dual-chip solution based on the GT200b, made using the 55 nm process technology. Nvidia chips are one and a half times more complex, and consume no more than RV770 ...

This concludes the theoretical part, we have long known the whole theory about the GT200 architecture and the basics of SLI operation. And now let's move on to the next part of the article, where we are waiting for the practical part of the study of a new solution based on two GT200b chips in synthetic tests, and comparing its performance with the speed of other solutions from Nvidia, as well as a competing AMD video card.

About six months ago, AMD released its most powerful video card AMD Radeon 4870x2, which for a long time became the leader among single video cards. It combined two RV770 chips, very high temperatures, rather high noise levels, and, most importantly, the highest performance in games among single video cards. And only now, at the beginning of 2009, NVIDIA finally responded with the release of a new video card, which was supposed to become a new leader among single solutions. The new video card is based on the 55nm GT200 chip, has 2x240 stream processors, but there is one “but” - a reduced memory bus (448 bits vs. 512 for the GTX280), reduced ROPs (28 vs. 2GHz versus 2.21GHz for the GTX280). Today I want to introduce you, dear readers, to the speed of the new TOP, its advantages and disadvantages, and, of course, to compare the speed of the new video card with its main competitor - AMD 4870x2.

Overview of the Palit video card GeForce GTX 295

The Palit GeForce GTX295 came into our test lab as an OEM product that only included a Windows XP/Vista driver disk. Let's look at the video card itself:

advertising

The video card has, probably, the length of 270mm, which is standard for top-level video cards, is equipped with two DVI-I (dual link) ports and an HDMI port - like its younger sister - GeForce 9800GX2:

But there are some changes: the DVI-I ports are now located on the right, and the hot air exhaust grille has become narrower and longer, which should clearly improve the quality of the video card cooling. Not all of the hot air is thrown out of the case - most of it remains inside the case, as in the case of the 9800GX2, you can see the cooling system radiator fins in the upper part of the video card.

In order to “climb the mountain again”, NVIDIA has switched its “top” GT200 GPU to a thinner manufacturing process - 55 nm. The new GT200b chip architecturally remained the same, but became less hot, which made it possible to release an accelerated version of the single-chip GeForce GTX 280 - the GeForce GTX 285 video card (it was discussed in the review ZOTAC GeForce GTX 285 AMP! Edition). But this was not enough to overthrow the Radeon HD 4870 X2, and simultaneously with the GeForce GTX 285, the dual-chip GeForce GTX 295 was released, based on two slightly simplified GT200b chips. The resulting accelerator, in the name of which there is no hint of "double-headedness", should provide unsurpassed performance.

To begin with, let's give a comparative table showing the history of the development of two-chip NVIDIA video cards and analogues from the direct competitor AMD-ATI.

|

GeForce 9800 GX2 |

GeForce GTX 295 |

Radeon HD 3870 X2 |

Radeon HD 4870 X2 |

|

|

Graphics chip |

GT200-400- B3 |

|||

|

Core frequency, MHz |

||||

|

Frequency of unified processors, MHz |

1 242 |

|||

|

Number of unified processors |

||||

|

Number of texture addressing and filtering blocks |

||||

|

Number of ROPs |

||||

|

Memory size, MB |

||||

|

Effective memory frequency, MHz |

2

000 |

2250 |

||

|

Memory type |

||||

|

Memory bus width, bit |

||||

|

Technical process of production |

||||

|

Power consumption, W |

before289 |

Judging by the characteristics of the GeForce GTX 295, the architecture of the new graphics accelerator is at the junction of the SLI configurations of the GeForce GTX 280 and GeForce GTX 260 with the transition to the 55 nm process technology, which made it possible to obtain power consumption at the level of the competitor's solution. How effective such an "alloy" is, only testing can show, so let's get acquainted with one of the new options.

Video card ZOTAC GeForce GTX 295

Let's start by summarizing and clarifying the ZOTAC GeForce GTX 295 specification.

|

Manufacturer |

|

|

ZT-295E3MA-FSP (GeForce GTX 295) |

|

|

Graphics core |

NVIDIA GeForce GTX 295 (GT200-400-B3) |

|

Conveyor |

480 unified streaming |

|

Supported APIs |

DirectX 10.0 (Shader Model 4.0) |

|

Core (shader domain) frequency, MHz |

|

|

Volume (type) of memory, MB |

|

|

Frequency (effective) memory, MHz |

|

|

Memory bus, bit |

|

|

Tire standard |

PCI Express 2.0 x16 |

|

Maximum Resolution |

Up to 2560 x 1600 in Dual-Link DVI mode |

|

2x DVI-I (VGA via adapter) |

|

|

HDCP support |

There is |

|

Drivers |

Latest drivers can be downloaded from: |

|

Products webpage |

The video card comes in a cardboard box, which is exactly the same as for the ZOTAC GeForce GTX 285 AMP! Edition, but has a slightly different design, all in the same black and gold colors. On the front side, in addition to the name of the graphics processor, the presence of 1792 MB of GDDR3 video memory and an 896-bit memory bus, hardware support for the newfangled physical API NVIDIA PhisX and the presence of a built-in HDMI video interface are noted.

The back of the package lists the general features of the graphics card with a brief explanation of the benefits of using NVIDIA PhisX, NVIDIA SLI, NVIDIA CUDA, and GeForce 3D Vision.

All this information is briefly duplicated on one of the sides of the box.

The other side of the package lists the exact frequency response and the operating systems supported by the drivers.

A little lower, information is carefully indicated on the minimum requirements for the system in which the "gluttonous" ZOTAC GeForce GTX 295 will be installed. So, the future owner of such a powerful graphics accelerator will need a power supply unit with a capacity of at least 680 W, which is capable of providing up to 46 A via the 12 V line. Also, the PSU must have the required number of PCI Express power outputs: one 8-pin and one 6-pin.

By the way, the new packaging is much more convenient to use than the previously used ones. It allows you to quickly access the contents, which is convenient both when you first build the system and when you change the configuration, for example, if you need some kind of adapter or maybe a driver disk when you reinstall the system.

The delivery set is quite sufficient for installing and using the accelerator, and in addition to the video adapter itself, it includes:

- disk with drivers and utilities;

- CD with bonus game "Race Driver: GRID";

- disc with 3DMARK VANTAGE;

- paper manuals for quick installation and use of the video card;

- branded sticker on the PC case;

- adapter from 2x peripheral power connectors to 6-pin PCI-Express;

- adapter from 2x peripheral power connectors to 8-pin PCI-Express;

- adapter from DVI to VGA;

- HDMI cable;

- cable for connecting the SPDIF output of a sound card to a video card.

The appearance of the GeForce GTX 295 video card owes its unusual appearance to the design. This is striking at first glance when you see a practically rectangular accelerator completely enclosed by a casing with a slot through which you can see the turbine of the cooling system, hidden somewhere in the depths.

The reverse side of the video card looks a little simpler. This is due to the fact that, unlike the GeForce 9800 GX2, there is no second half of the casing here. This, it would seem, indicates a greater predisposition of GeForce GTX 295 graphics accelerators to disassembly, but as it turned out later, everything is not so simple and safe.

On top of the video card, almost at the very edge, there are additional power connectors, and due to the rather high power consumption, you need to use one 6-pin and one 8-pin PCI Express.

Next to the power connectors there is a SPDIF digital audio input, which should provide mixing of the audio stream with video data when using the HDMI interface.

Further, a cutout is made in the casing, through which heated air is ejected directly into the system unit. This will clearly worsen the climate inside it, because, given the power consumption of the video card, the cooling system will blow out a fairly hot stream.

And already near the panel of external interfaces there is an NV-Link connector, which will allow you to combine two such powerful accelerators in an uncompromising Quad SLI configuration.

Two DVIs are responsible for image output, which can be converted to VGA with the help of adapters, as well as a high-definition multimedia output HDMI. There are two LEDs next to the top video outputs. The first one displays the current power status - green if the level is sufficient and red if one of the connectors is not connected or an attempt was made to power the card from two 6-pin PCI Express. The second one indicates the DVI output to which the master monitor should be connected. A little lower is a ventilation grill through which part of the heated air is blown out.

Disassembling the video card, however, turned out to be not as difficult as it was in the case of the GeForce 9800 GX2. First you need to remove the top casing and ventilation grill from the interface panel, and then just unscrew a dozen and a half spring-loaded screws on each side of the video card.

Inside this "sandwich" there is a single cooling system, consisting of several copper heat sinks and copper heat pipes, on which a lot of aluminum plates are strung. All this is blown by a sufficiently powerful and, accordingly, not very quiet turbine.

There are printed circuit boards on both sides of the cooling system, each of which represents a half of the GeForce GTX 295: it carries one video processor with its own video memory and power system, as well as auxiliary chips. Please note that the 6-pin auxiliary power connector is located on the half more saturated with chips, which is logical, because. even up to 75 watts is provided by the PCI Express bus located in the same place.

The boards are interconnected using special flexible SLI bridges. Moreover, they themselves and their connection connectors are very capricious, so we do not recommend opening your expensive video card without special need.

But bridges alone are not enough to ensure the coordinated operation of parts of the GeForce GTX 295 with the rest of the system. In real conditions, the chipset or the increasingly used NVIDIA nForce 200 extension with PCI Express 2.0 support is responsible for the operation of the SLI-bundle of a pair of video cards. It is the NF200-P-SLI PCIe switch that is used in the GeForce GTX 295.

The boards also have two NVIO2 chips that are responsible for the video outputs: the first one provides support for a pair of DVI, and the second one for one HDMI.

It is thanks to the presence of the second chip that multi-channel audio is mixed from the SPDIF input to a convenient and promising HDMI output.

The video card is based on two NVIDIA GT200-400-B3 chips. Unfortunately, there was not enough space on the printed circuit boards to accommodate several more memory chips, so the full-fledged GT200-350 had its memory bus reduced from 512 to 448 bits, which in turn led to a reduction in rasterization channels to 28, like in the GeForce GTX 260. But on the other hand, there are 240 unified processors left, like a full-fledged GeForce GTX 285. The graphics processor itself operates at a frequency of 576 MHz, and shader pipelines at 1242 MHz.

The total volume of 1792 MB of video memory is typed by Hynix chips H5RS5223CFR-N0C, which at an operating voltage of 2.05 V have a response time of 1.0 ms, i.e. provide operation at an effective frequency of 2000 MHz. At this frequency, they work, without providing a backlog for overclockers.

Cooling System Efficiency

In a closed but well-ventilated test case, we were unable to get the turbine to automatically spin up to maximum speed. True, even with 75% of the cooler's capabilities, it was far from quiet. In such conditions, one of the GPUs, located closer to the turbine, heated up to 79ºC, and the second - up to 89ºC. Given the overall power consumption, these are still far from critical overheating values. Thus, we can note the good efficiency of the cooler used. If only it were a little quieter...

At the same time, acoustic comfort was disturbed not only by the cooling system - the power stabilizer on the card also did not work quietly, and its high-frequency whistle cannot be called pleasant. True, in a closed case, the video card became quieter, and if you play with sound, then good speakers will completely hide its noise. It will be worse if you decide to play in the evening with headphones, and next to you someone is already going to rest. But that's exactly the price you pay for a very high performance.

When testing, we used the Stand for testing Video cards No. 2

| CPU | Intel Core 2 Quad Q9550 (LGA775, 2.83GHz, L2 12MB) @3.8GHz |

| motherboards | ZOTAC NForce 790i-Supreme (LGA775, nForce 790i Ultra SLI, DDR3, ATX)GIGABYTE GA-EP45T-DS3R (LGA775, Intel P45, DDR3, ATX) |

| Coolers | Noctua NH-U12P (LGA775, 54.33 CFM, 12.6-19.8 dB) Thermalright SI-128 (LGA775) + VIZO Starlet UVLED120 (62.7 CFM, 31.1 dB) |

| Additional cooling | VIZO Propeller PCL-201 (+1 slot, 16.0-28.3 CFM, 20 dB) |

| RAM | 2x DDR3-1333 1024MB Kingston PC3-10600 (KVR1333D3N9/1G) |

| Hard drives | Hitachi Deskstar HDS721616PLA380 (160 GB, 16 MB, SATA-300) |

| Power supplies | Seasonic M12D-850 (850W, 120mm, 20dB) Seasonic SS-650JT (650W, 120mm, 39.1dB) |

| Frame | Spire SwordFin SP9007B (Full Tower) + Coolink SWiF 1202 (120x120x25, 53 CFM, 24 dB) |

| Monitor | Samsung SyncMaster 757MB (DynaFlat, [email protected] Hz, MPR II, TCO"99) |

Choose what you want to compare GeForce GTX 295 1792MB DDR3 ZOTAC to

Having in its arsenal the same number of stream processors as a pair of GeForce GTX 280, but in other characteristics more like a 2-Way SLI bundle from the GeForce GTX 260, the dual-chip GeForce GTX 295 video card is located just between the pairs of these accelerators and only occasionally overtakes all possible competitors. But since it does not impose SLI support on the motherboard and should cost less than two GeForce GTX 280 or GeForce GTX 285, it can be considered a really promising high-performance solution for true gamers.

Energy consumption

Having repeatedly made sure during the tests of power supplies that the system requirements for performance components often indicate overestimated recommendations regarding the required power, we decided to check whether the GeForce GTX 295 really requires 680 watts. At the same time, let's compare the power consumption of this graphics accelerator with other video cards and their bundles, complete with a quad-core Intel Core 2 Quad Q9550 overclocked to 3.8 GHz.

|

Power consumption, W |

GeForce GTX 295 |

GeForce GTX 260 SLI |

GeForce GTX 260 3-Way SLI |

||

|

Crysis Warhead, Max Quality, 2048x1536, AA4x/AF16x |

|||||

|

Call of Juarez, Max Quality, Max Quality, 2048x1536, AA4x/AF16x |

|||||

|

FurMark, stability, 1600x1200, AA8x |

|||||

|

idle mode |

The results obtained allow us to refute the recommendation to use very powerful power supplies to provide energy to the GeForce GTX 295. In other words, manufacturers are clearly reinsuring themselves in case of using low-quality power supplies with overestimated passport characteristics. While a good 500 W power supply from a reliable manufacturer will provide enough power for such a powerful video card.

Overclocking

To overclock the video card, we used the RivaTuner utility, while the case was opened to improve the flow of fresh air to the video card. In addition, the VIZO Propeller cooler provided an improved supply of fresh air directly to the video card turbine, and a household fan standing next to the system unit “blew” the air heated by the video card to the side, preventing it from getting inside the case again.

As a result of overclocking, the frequency of the raster and shader domains was raised to 670 and 1440 MHz, which is 94 MHz (+16.3%) and 198 MHz (+15.9%) respectively higher than the default values. The video memory worked at an effective frequency of 2214 MHz, which is 216 MHz (+10.8%) higher than the nominal one. Let's see how overclocking a video card affects performance.

|

Test package |

Standard Frequencies |

Overclocked graphics card |

Productivity increase, % |

|

|

Crisys Warhead, Maximum Quality, NO AA/AF, fps |

||||

|

Far Cry 2, Maximum Quality, NO AA/AF, fps |

||||

|

Far Cry 2, Maximum Quality, AA4x/AF16x, fps |

||||

Overclocking an already productive video card made it possible to get up to 13% more performance. But even at very high resolutions and at maximum image quality settings, it will be hard to notice, because. and without overclocking, the GeForce GTX 295 provides sufficient frame rates in all games.

conclusions

The GeForce GTX 295 graphics card provides a qualitative step forward in terms of offering more attractive dual-chip accelerators for true connoisseurs of computer games. This solution really provides a very high level of performance, sometimes allowing it to be called the fastest 3D accelerator today. At the same time, it has moderate power consumption and a not so noisy cooling system. On the other hand, there are still practically no games in which, at maximum image quality settings and at very high resolutions, “top-end” single-chip video cards do not provide gaming frame rates. Therefore, you will have to think twice before buying such an expensive graphics accelerator that does not always provide a noticeable performance jump. It will probably be more appropriate to take a GeForce GTX 285 or more powerful processor, or a more capable motherboard and more, faster RAM, or maybe just a more spacious, well-ventilated case and another large hard drive. But in any case, you will have to put up with the noisy power regulator of the new GeForce GTX 285 and GeForce GTX 295, although this defect may be corrected in future revisions of video cards.

As for the ZOTAC GeForce GTX 295, this video card completely repeats the reference accelerator, and its distinctive positive features include only a very good bundle and, perhaps, a slightly lower cost than its competitors.

Advantages:

- very high performance in gaming applications;

- support for DirectX 10.0 (Shader Model 4.0) and OpenGL 2.1;

- support NVIDIA technologies CUDA and NVIDIA PhysX

- technology supportQuadSLI;

- excellent kit.

Flaws:

- perceptible squeak of the power stabilizer.

Peculiarities:

- medium-noise and relatively efficient cooling system;

- increased requirements for the power supply;

- relatively high cost.

We are grateful to the company ELKO Kiev» official distributor Zotac International in Ukraine for video cards provided for testing.

Article read 30911 times

| Subscribe to our channels | |||||

|

|

|

||||

At the beginning of the current year within the framework of the international exhibition CES 2009 company NVIDIA introduced two newest accelerators, which are still the most productive in their classes to this day. We are, of course, talking about a single-chip GeForce GTX 285 and dual processor flagship GeForce GTX 295, which have become an excellent tool in the competitive struggle with ATI, whose four thousandth series of accelerators turned out to be extremely successful, having managed to regain the trust of users. Nevertheless, the green giant was able to find the strength in himself and answer his eternal rival. Since the official release GeForce GTX 295 Almost half a year has already passed, and the World Wide Web has long been filled with dozens of various reviews and tests, published mainly in the middle of winter, because it was then that the vast majority of samples managed to get to the test laboratories, therefore, almost everything is known about this video card. But I would like to return to this issue again, even after such a long period of time. Cause? It is actually banal - these are drivers that play an important role in the overall performance level of a video card. It was ATI that was noted for competent software optimization, whose latest software has become a real gift for overclockers and extreme people, which means that it makes sense to revise the results of previous tests. So, we have a Gainward GTX 295 and our task is quite simple - to determine whether there is GeForce GTX 295 the fastest or still lost the palm Radeon HD 4870X2?

Introduction

Quite recently, the flagship from NVIDIA has undergone a number of design changes, as a result of which the video card has "lost" exactly one plate, and the second one actually accommodates all the necessary components. This step was justified, first of all, from an economic point of view, which in turn should help vendors reduce production costs and offer a new product at a better price - and this is already a significant trump card in the price war with AMD. The two-slot turbine has given way to a complex two-section heatsink with a single fan in the center, but the thickness of the accelerator has remained the same and occupies the same two slots. At the time of writing, the "single-deck" versions of the accelerators did not have time to reach retail stores, and moreover, according to the information we have, the vast majority of vendors are experiencing difficulties in obtaining the necessary PCBs, which means that the first batches will not bring the expected price reduction. Sooner or later, this situation will normalize and we hope we will have the opportunity to get acquainted with the new revision of the accelerator.

Design and packaging

Before proceeding to the study of the instance, I would like to briefly recall its main technical characteristics:

- Two GT200b graphics chips (55nm);

- 240 stream processors per core;

- "Double" 448-bit bus;

- 1792 MB GDDR3 memory;

- Support for DirectX 10.0, Shader Model 4.0, CUDA, PhysX technologies.

NVIDIA engineers decided not to reinvent the wheel and used a well-established scheme for constructing a two-chip video card, copying the design from the GeForce 9800 GX2. On the internal parts of the textolite, all microprocessors, memory chips, power elements and key controllers are soldered, protected by a massive cooling system. We will be able to see the topology of the placement of elements a little later, but first we will study the appearance of the accelerator, the packaging and the delivery package.

The video card came to us in the traditional Gainward packaging - a medium-sized cardboard box with branding for its own GeForce series - a winged spirit depicted against the backdrop of a mythical dilapidated city:

.jpg)

On the back of the package you can see the benefits of new technologies from NVIDIA, a brief description of which is present in almost any vendor - apparently this is one of the requirements of the green giant that must be met.

.jpg)

Inside we find the accelerator itself, sealed in an antistatic package, an adapter from a DVI digital output to an analog D-Sub, an S/PDIF cable, a driver disk paired with the ExperTool proprietary overclocking utility, as well as a brief instruction manual. The photo did not include two adapters from a standard PATA connector to 6-pin and 8-pin PCIe, but they are also included in the kit. An extremely modest set, but at the same time without frills, otherwise the already considerable cost of the product would be even higher.

.jpg)

And here is the video card itself. As it should be for status things, it has considerable weight, which it obliges installed system cooling. The metal casing is rubberized, although it is not immediately clear what kind of material was applied to the accelerator cover. Since we have a completely reference model in front of us, in order to stand out from the “crowd” of similar devices, the manufacturer placed a branded sticker with his name on the left side of the card.

.jpg)

The back side does not contain critically heated elements and therefore is not protected by a stiffening plate and, in terms of compatibility, by a heat sink, but it would definitely not interfere there. The high temperature of the board is indicated by a yellow triangle - another Gainward warning that should not be ignored. For example, in the range of EVGA there is a proprietary backplate for an additional heat sink, although it is sold separately. However, it can be easily installed on any two-plate GeForce GTX 295.

.jpg)

During operation, part of the heated air will remain inside the case (unless, of course, you have a case), but some of its mass will also be removed outside it, for which special slots are provided on the rear plug. An HDMI connector is located next to them, and two DVI ports are located on the floor below, therefore, in total, the video signal can be output to three displays. There are also two LEDs that signal the normal or unstable operation of the video adapter.

.jpg)

Now let's analyze our device. I would like to note right away that this should be done with extreme caution, since there is a possibility of damage to the fragile crystals of some chips and this must be remembered. To dismantle the cooling system, you need only one screwdriver and a hex nozzle to remove the DVI port mount, and if you don’t bother with the quality of the nozzle, then there may be options with a screwdriver. I would like to pay tribute to the assemblers - all the screws were twisted to glory, which caused a few problems. Prior to this, the author of the article used a simple Chinese screwdriver (about 30 cents), but its cross was safely erased on the very first screw. Then a more expensive Polish tool was purchased (as much as a whole one and a half dollars), which was enough for three screws - this time the tip crumbled. Surprisingly, screwdriver number three (even more expensive) did the trick, saving me some money. We will get acquainted directly with the cooling system later, but for now the following photo:

.jpg)

Two halves of the fastest graphics card to date. The assembly of all the elements is quite dense and we can only admire the NVIDIA engineers who managed to "squeeze" everything you need onto one PCB.

.jpg)

Each graphics chip is labeled as G200-400-B3, ie. now we have a 55nm G200b with 240 shader processors.

.jpg)

The required amount of memory (1792 MB) is made up of 14 chips manufactured by Hynix with the marking HR5S5223CFR-N0C corresponding to the GDDR3 standard with an effective frequency of 2000 MHz.

.jpg)

Both boards are interconnected by two flexible cables of different lengths, so it will be difficult to confuse them, especially since each of them has a special key that indicates the orientation in the connector. To the right of the connectors, you can see the nForce 200 chip, which serves to organize the internal SLI mode.

.jpg)

To power the accelerator, there are two PCIe connectors for 8 and 6 pins located on different PCBs. According to the developers, during maximum load, the accelerator can consume up to 289 W, while it is recommended to use a PSU with a power of more than 680 W. In practice, this number is overestimated by an order of magnitude and on average 220-230 watts is enough for a card. For example, a 550 W power supply was installed in our test bench, and during active operation neither it nor the accelerator showed signs of emergency operation, therefore, NVIDIA is reinsured in this way. Now about the cooling system.

.jpg)

The G200b graphics chip contacts the heat spreader through a layer of rather thick gray thermal paste, while memory chips and other power elements transfer heat through special fibrous pads. The weight of the radiator is quite impressive, in principle, like any top-end accelerator. Before assembling, do not forget to replace the thermal interface with the GPU, this is not critical for other components.

.jpg)

At the moment, there is not a single alternative air cooler for the reference GeForce GTX 295 on the market, which is due to the complex design of each of the PCBs, and it is unlikely that manufacturers will be able to offer a new solution, especially considering the fact that dual-platter video cards have been discontinued. Only CBO owners can have a reason for joy - there is simply a gigantic mass of compatible water blocks on the market from almost all the leading companies in this industry.

On this visual inspection Let's finish and proceed to the practical part by checking the level of performance in popular test packages and modern video games. Chosen as an opponent Radeon HD 4870X2(our copy was presented by the development from gigabyte), introduced last summer and holding a leading position until the beginning of 2009.

.jpg)

Whether the new ATI drivers helped outpace the NVIDIA accelerator or vice versa, threw it back, we now have to find out.

Test system configuration and software used

The following set of components and devices was used as the main test bench:

- Intel processor Core 2 Duo E8500 (E0);

- Motherboard Asus Maximus II Formula (BIOS 2104);

- RAM Mushkin Redline XP2-8000 (2*2 GB);

- Sumsung HD103UJ hard drive (1TB capacity);

- be quiet! Straight Power BQT E5-550W;

- Case Cooler Master Cosmos S.

The processor was cooled by a closed loop liquid cooling system. The temperature in the room did not exceed 24 degrees Celsius. The CPU frequency was boosted to 4.5 GHz and did not change throughout the test. Memory timings were set as 5-5-5-12.

Software:

Based on 32-bit operating system Microsoft Windows Vista SP1. The latest driver packages have also been installed: ForceWare 185.85 WHQL for video card NVIDIA and Catalyst 9.6 for accelerator from ATI. All settings were used by default.

Benchmarks:

Games:

Crysis Warhead

Far Cry 2

world in conflict

S.T.A.L.K.E.R.: Clear Sky

Devil May Cry 4

The first thing I wanted to check was the level of heat dissipation, for which the utility was launched FurMark v1.6.5, capable of warming up the video adapter as much as possible. The accelerator's cooling system reacts quite quickly to temperature rise, and after a couple of minutes the noise of the turbine becomes annoying. The heating level did not exceed 92 degrees Celsius. Well, quite an expected result - you have to pay for the record speed with decent noise and considerable temperature.

Let's move on to synthetic tests. Let's start with 3DMark06 from futuremark. All package settings were used by default. First, the results of two accelerators in nominal terms:

.jpg)

.jpg)

Then with increased clock speeds:

.jpg)

.jpg)

We do the same procedure with 3D Mark Vantage, by determining the speed of video cards with factory settings:

.jpg)

.jpg)

... and in forced mode:

.jpg)

.jpg)

We summarize the data obtained in two small tables for clarity.

In 3DMark06, there is an obvious advantage of the Radeon HD 4870X2, which outperforms its rival even at face value, and increases its lead with increasing clock frequencies. Quite a different situation with 3DMark Vantage. The accelerator from ATI lagged behind the GeForce GTX 295 almost several times, losing its positions virtually without a fight. Overclocking also did not help much, which cannot be said about NVIDIA - the video card based on its chips showed a much better result.

Games. We have selected five popular video games, most of which are very demanding on system resources and, in particular, on the PC graphics subsystem.

Crysis Warhead

Of course, a gaming masterpiece, at maximum settings capable of bringing even the most progressive computers to their knees. The utility was used to determine the performance Crysis Warhead Benchmark Tool with picture quality settings options Enthusiast. All gaming applications took place in the environment DX10.

The leadership of the video card produced by Gainward is difficult to dispute, in all test modes, the flagship NVIDIA confidently outperformed the Radeon HD 4870X2, yielding only 1-2 "minimal" frames at a resolution of 1280x1024 both with and without anti-aliasing / anisotropic filtering, which is very insignificant and not significant competitor's advantage. We also note the results of the overclocked GeForce GTX 295 - the increase in its performance is an order of magnitude better in any of the modes.

Far Cry 2

This game has a built-in benchmark and it only remains for us to determine the leader in this application. The quality settings were set to Ultra High, and the scripted scene was “run” three times, after which the result was averaged, and the system gave the minimum, average, and maximum FPS values. In this case, we were interested in the smallest and largest readings, which we entered in the corresponding tables:

And the result was really interesting. The ATI veteran was able to outperform even an overclocked NVIDIA video card at its nominal frequencies. This suggests, perhaps, a premature conclusion: the top-end GeForce GTX 295 will not dominate in all gaming applications, and the Canadian developer has something to put in opposition to his eternal competitor. It is also worth paying attention to the number of frames per second at different resolutions and detail settings - they are almost equal and the difference is from tenths to 6 frames per second. Unfortunately, we did not have the opportunity to test the accelerators on a monitor with a diagonal of more than 22 inches, which definitely led to a decrease in FPS, and most likely we would have been able to determine the winner of the test.

world in conflict

The maximum possible settings and the integrated performance test of the video adapter should show the advantage of one of the dual-processor video cards.

In almost every resolution, the maximum number of FPS varied at the level of 61-63 frames per second, regardless of the installed accelerator, which cannot be said about its minimum values. The novelty from Gainward was able to demonstrate 30 fps at best, while for the Radeon HD 4870X2, 52 fps can be considered the worst achievement. Note that at 1680x1050 the ATI video card feels much more comfortable than at 1280x1024 even with anti-aliasing and anisotropic filtering enabled, although theoretically it should be the other way around. However, one more round can be given to the Canadian developers, their drivers are really on top.

S.T.A.L.K.E.R.: Clear Sky

On the official website of this cult game, a special benchmark is available for free download, with the help of which you can find out how quickly your video card will draw the endless landscapes of the virtual Chernobyl zone and even help you compare the result with data from an ever-forming online database. Based on the fourth scene called "Sun Shafts", which loads the video subsystem to the maximum. For the accelerator from ATI, the mode DirectX 10.1.

What do we see? And here we see the complete dominance of the GeForce GTX 295 in all resolutions and anti-aliasing modes. The minimum frame rate can drop to an uncomfortable 17 frames per second, and this trend will continue if you increase the diagonal of the monitor, say, to 24 inches with a resolution of 1920x1200 pixels. To avoid this, you can slightly loosen the quality settings, which will help accelerators to give a more acceptable amount of FPS.

Devil May Cry 4

The last one for today will be a three-dimensional third-person shooter Devil May Cry 4. In the benchmark we needed, the Super High mode was activated and the required anti-aliasing and filtering values were set. It consists of four scripted scenes, upon completion of which a table is formed from the average FPS values of each of them. The only drawback is the inability to know the average and maximum number of frames per second. The fourth scene was taken as a basis, it was painfully full of various freaks and other evil spirits, for the drawing of which considerable resources of the tested video cards were required.

The speed of the GeForce GTX 295 is again on top and does not allow the Radeon HD 4870X2 to snatch victory in any of the modes, even with factory settings against the increased frequencies of the opponent represented by ATI. It's nice to see that the "playability" is up to the mark and does not fall below 160 frames per second. Both cards responded well to overclocking, especially the accelerator based on NVIDIA chips.

Conclusion

At the beginning of our review, we asked a simple question - is the GeForce GTX 295 the fastest desktop video card today, or has ATI made an incredible leap and managed to get ahead of the leader? Ironically, it is still very difficult to give a clear answer. NVIDIA's two-chip flagship is very strong in the synthetic 3DMark Vantage, but is inferior in the more popular 3DMark06 among overclockers, in which the Radeon HD 4870X2 is the undisputed leader in this situation. Game tests also did not reveal the favorite of the competition, but most of them were won by the development of the green giant. We note the excellent level of software optimization for video cards, especially for latest versions Catalyst drivers, which forced many enthusiasts to revise the results of the tests, and extreme sportsmen to update the results on the bot. I would also like to say a few words about noise. Frankly, his level leaves much to be desired. Top-end accelerators with record-breaking speed and no less record-breaking heat release make the turbines rotate at a speed of more than 2000 rpm, and this is already comparable to the sound of a small vacuum cleaner. In our subjective opinion, ATI's accelerator is somewhat quieter in 2D, but gets noisier in tests and video games.

Advantages :

- Excellent overclocking potential;

- Excellent performance;

- Relatively low power consumption for a top-end accelerator;

- Good optimization drivers.

Flaws:

- High level heating, but still less than the card from ATI;

- Noise under heavy load;

- Modest package.

Yes, the cost of a novelty from NVIDIA can scare away the most ardent supporters of the company, since not everyone can afford to pay about five hundred US dollars. But if you need record speed and uncompromising performance, then this is your video card - it will justify every cent spent on it, but do not forget that the rest of the components (processor, motherboard, RAM) must also correspond to the proper level. We will continue to monitor the development of the market for modern accelerators and will try to promptly inform our readers about the vectors of its development and priorities. Stay with us - the most interesting is yet to come! :)

Site administrationwebsite expresses gratitude to the following companies for the equipment provided for testing: