A blog about WordPress plugin settings and search engine optimization for beginner webmasters. A quick way to check the indexing of pages in Yandex and Google When there was indexing in Yandex

Site indexing is the most important, necessary and primary detail in the implementation of its optimization. After all, it is precisely because of the presence of an index that search engines can respond to all user requests extremely quickly and accurately.

What is site indexing?

Site indexing is the process of adding information about the content (content) of the site to the database of search engines. The index is the database. search engines. In order for the site to be indexed and appear in the search results, a special search bot must visit it. The entire resource, page by page, is examined by the bot according to a certain algorithm. As a result, finding and indexing links, images, articles, etc. At the same time, in the search results, those sites will be higher in the list, the authority of which is higher compared to the rest.

There are 2 options for indexing the PS site:

- Self-determination by the search robot of fresh pages or this resource - this method good when available active links from other already indexed sites to yours. Otherwise, you can wait for the search robot indefinitely;

- Entering the URL to the site in the search engine form intended for this manually- this option allows the new site to "queue up" for indexing, which will take quite a long time. The method is simple, free and requires entering the address of only the main page of the resource. This procedure can be performed through the Yandex and Google webmaster panel.

How to prepare a site for indexing?

It should be noted right away that it is highly undesirable to lay out a site at the development stage. Search engines can index incomplete pages with incorrect information, spelling errors, etc. As a result, this will negatively affect the site's ranking and the issuance of information from this resource in the search.

Now let's list the points that should not be forgotten at the stage of preparing a resource for indexing:

- indexing restrictions apply to flash files, so it is better to create a site using HTML;

- data type like JavaScript is also not indexed by search robots, in this regard, site navigation should be duplicated with text links, and all important information, which should be indexed, do not write in Java Script;

- remove all non-working internal links so that each link leads to a real page of your resource;

- the structure of the site should allow you to easily navigate from the bottom pages to the main page and back;

- it is better to move unnecessary and secondary information and blocks to the bottom of the page, and also hide them from bots with special tags.

How often does indexing take place?

Site indexing, depending on a number of reasons, can take from several hours to several weeks, up to a whole month. Indexing update, or search engine ups occur at different intervals. According to statistics, on average, Yandex indexes new pages and sites for a period of 1 to 4 weeks, and Google manages for a period of up to 7 days.

But with proper preliminary preparation of the created resource, these terms can be reduced to a minimum. After all, in fact, all PS indexing algorithms and the logic of their work come down to giving the most accurate and up-to-date answer to a user's request. Accordingly, the more regularly quality content appears on your resource, the faster it will be indexed.

Methods for accelerating indexing

First you need to “notify” the search engines that you have created a new resource, as mentioned in the paragraph above. Also, many people recommend adding a new site to social bookmarking systems, but I don’t do that. This really made it possible to speed up indexing a few years ago, since search robots often “visit” such resources, but, in my opinion, now it’s better to put a link from a popular social networks. Soon they will notice a link to your resource and index it. A similar effect can be achieved with direct links to a new site from already indexed resources.

After several pages have already been indexed and the site has begun to develop, you can try to “feed” the search bot to speed up indexing. To do this, you need to periodically publish new content at approximately equal intervals of time (for example, every day, 1-2 articles). Of course, the content must be unique, high-quality, competent and not oversaturated with key phrases. I also recommend creating an XML sitemap, which will be discussed below, and adding it to the webmaster panel of both search engines.

robots.txt and sitemap files

The robots txt text file includes instructions for search engine bots. At the same time, it makes it possible to prohibit the indexing of selected pages of the site for a given search engine. If you do it manually, then it is important that the name given file was written only in capital letters and was located in the root directory of the site, most CMS generate it yourself or with the help of plugins.

Sitemap or site map is a page containing a complete model of the site structure to help "lost users". In this case, you can move from page to page without using site navigation. It is advisable to create such a map in XML format for search engines and include it in the robots.txt file to improve indexing.

You can learn more about these files detailed information in the relevant sections by clicking on the links.

How to prevent a site from being indexed?

You can manage, including prohibiting a site or a separate page from being indexed, using the robots.txt file already mentioned above. To do this, create on your PC Text Document with this name, place it in the root folder of the site and write in the file from which search engine you want to hide the site. In addition, you can hide site content from Google or Yandex bots using the * sign. This instruction in robots.txt will prohibit indexing by all search engines.

User-agent: * Disallow: /

For WordPress sites, you can disable site indexing through the control panel. To do this, in the site visibility settings, check the box "Recommend to search engines not to index the site." At the same time, Yandex, most likely, will listen to your wishes, but with Google it is not necessary, but some problems may arise.

For a number of reasons, search engines do not index all pages of the site or, conversely, add unwanted ones to the index. As a result, it is almost impossible to find a site that has the same number of pages in Yandex and Google.

If the discrepancy does not exceed 10%, then not everyone pays attention to this. But this position is true for the media and information sites, when the loss of a small part of the pages does not affect the overall traffic. But for online stores and other commercial sites, the absence of product pages in the search (even one out of ten) is a loss of income.

Therefore, it is important to check the indexing of pages in Yandex and Google at least once a month, compare the results, identify which pages are missing in the search, and take action.

Problem with monitoring indexing

View indexed pages is not difficult. You can do this by uploading reports in the webmaster panels:

- (“Indexing” / “Pages in search” / “All pages” / “Download XLS / CSV table”);

Tool Features:

- simultaneous check of indexed pages in Yandex and Google (or in one PS);

- the ability to check all site URLs at once by ;

- there is no limit on the number of URLs.

Peculiarities:

- work "in the cloud" - no need to download and install software or plugins;

- uploading reports in XLSX format;

- notification by mail about the end of data collection;

- storage of reports for an unlimited time on the PromoPult server.

Everything is very simple with Google. You need to add your site to webmaster tools at https://www.google.com/webmasters/tools/, then select the added site, thus getting into the Search Console of your site. Next, in the left menu, select the “Scanning” section, and in it the “View as Googlebot” item.

On the page that opens, enter the address in the empty field new page, which we want to quickly index (taking into account the already entered domain name of the site) and click the “Scan” button to the right. We are waiting for the page to be scanned and appear at the top of the table of addresses previously scanned in this way. Next, click on the "Add to Index" button.

Hooray, your new page is instantly indexed by Google! In a couple of minutes you will be able to find it in the Google search results.

Fast indexing of pages in Yandex

AT new version webmaster tools became available similar tool to add new pages to the index. Accordingly, your site must also be previously added to Yandex Webmaster. You can also get there by selecting the desired site in the webmaster, then go to the "Indexing" section, select the "Page Recrawl" item. In the window that opens, enter the addresses of new pages that we want to quickly index (using a link on one line).

Unlike Google, indexing in Yandex does not happen instantly yet, but it tries to strive for it. By the above actions, you will inform the Yandex robot about the new page. And it will be indexed within half an hour or an hour - this is how my practice shows personally. Perhaps the page indexing speed in Yandex depends on a number of parameters (on the reputation of your domain, account, and/or others). In most cases, this can be stopped.

If you see that the pages of your site are poorly indexed by Yandex, that is, a few general recommendations on how to deal with this:

- The best, but also the most difficult recommendation is to install the Yandex speedbot on your site. To do this, it is desirable to add to the site every day fresh materials. Preferably 2-3 or more materials. And add them not all at once, but after a while, for example, in the morning, afternoon and evening. It would be even better to follow approximately the same publication schedule (approximately maintain the same time for adding new materials). Also, many people recommend creating an RSS feed of the site so that search robots can read updates directly from it.

- Naturally, not everyone will be able to add new materials to the site in such volumes - it's good if you can add 2-3 materials per week. In this case, you can not particularly dream about the speed of Yandex, but try to drive new pages into the index in other ways. The most effective of which is considered to be posting links to new pages in upgraded Twitter accounts. By using special programs like Twidium Accounter, you can “pump” the number of twitter accounts you need and use them to quickly drive new pages of the site into the search engine index. If you do not have the opportunity to post links to the upgraded Twitter accounts on your own, you can buy such posts through special exchanges. One post with your link on average will cost from 3-4 rubles and more (depending on the coolness of the chosen account). But this option will be quite expensive.

- The third option for quick indexing is to use the http://getbot.guru/ service, which for just 3 rubles will help you achieve the desired effect with a guaranteed result. Well suited for sites with a rare schedule for adding new publications. There are also cheaper rates. Details and differences between them are best viewed on the website of the service itself. Personally, I services this service very satisfied as an indexing accelerator.

Of course, you can also add new publications to social bookmarking, which theoretically should also contribute to the rapid indexing of the site. But the effectiveness of such an addition will also depend on the level of your accounts. If you have little activity on them and you use your accounts only for such spam, then there will be practically no useful output.

P.S. with extensive experience is always up-to-date - contact us!

Site indexing in search engines is important for every webmaster. Indeed, for the qualitative promotion of the project, it is necessary to monitor its indexing. I will describe the process of checking indexing in Yandex.

Indexing in Yandex

The Yandex robot scans websites day after day in search of something “delicious”. Collects in the top of the issue those sites and pages that, in his opinion, deserve it the most. Well, or just Yandex wanted it that way, who knows 🙂

We, as real webmasters, will adhere to the theory that the better the site is made, the higher its positions and more traffic.

There are several ways to check the indexing of a site in Yandex:

- using Yandex Webmaster;

- using search engine operators;

- using extensions and plugins;

- using online services.

Indexing site pages in Yandex Webmaster

To understand what the search engine has dug up on our site, you need to go to our favorite Yandex Webmaster in the “Indexing” section.

Crawl statistics in Yandex Webmaster

First, let's go to the "Bypass Statistics" item. The section allows you to find out which pages of your site the robot crawls. You can identify addresses that the robot could not load due to the unavailability of the server on which the site is located, or due to errors in the content of the pages themselves.

The section contains information about the pages:

- new - pages that have recently appeared on the site or the robot has just bypassed them;

- changed - pages that the Yandex search engine used to see, but they have changed;

- crawl history - the number of pages that Yandex crawled, taking into account the server response code (200, 301, 404, and others).

The graph shows new ( green color) and changed (blue color) pages.

And this is the bypass history graph.

This item displays the pages that Yandex has found.

N/a - the URL is not known to the robot, i.e. the robot had never met her before.

What conclusions can be drawn from the screen:

- Yandex did not find the address /xenforo/xenforostyles/, which, in fact, is logical, because this page no longer exists.

- Yandex found the address /bystrye-ssylki-v-yandex-webmaster/, which is also quite logical, because page is new.

So, in my case, Yandex Webmaster reflects what I expected to see: what is not needed - Yandex removed, and what is needed - Yandex added. So with the bypass everything is fine with me, there are no blockages.

Pages in search

Search results are constantly changing - new sites are added, old ones are deleted, places in the results are adjusted, and so on.

You can use the information in the "Pages in search" section:

- to track changes in the number of pages in Yandex;

- to keep track of added and excluded pages;

- to find out the reasons for exclusion of the site from the search results;

- to obtain information about the date the site was visited by a search engine;

- for information about changes in search results.

To check the indexing of pages, this section is needed. Here Yandex Webmaster shows the pages added to the search results. If all your pages are added to the section (a new one will be added within a week), then everything is in order with the pages.

Checking the number of pages in the Yandex index using operators

In addition to Yandex Webmaster, you can check page indexing using operators directly in the search itself.

We will use two operators:

- "site" - search through all subdomains and pages of the specified site;

- "host" - search through pages hosted on this host.

Let's use the "site" operator. Note that there is no space between the operator and the site. 18 pages are in Yandex search.

Let's use the "host" operator. 19 pages indexed by Yandex.

Checking Indexing with Plugins and Extensions

Check site indexing using services

There are a lot of such services. I'll show you two.

Serphunt

Serphunt is an online website analysis service. They have a useful tool for checking page indexing.

At the same time, you can check up to 100 pages of the site using two search engines - Yandex and Google.

Click "Start scan" and after a few seconds we get the result:

If there are problems with indexing, first of all, you need to check robots.txt and sitemap.xml.

Any search engine has a voluminous database where all sites and new pages are entered. This base is called "index". Until the robot bypasses the html document, analyzes it and adds it to the index, it will not appear in the search results. It can only be accessed via the link.

What does "indexing" mean?

Better than a Yandex indexing specialist, no one will tell you about this:

Indexing is a process during which the pages of the site are bypassed by the search robot and include (or not include) these pages in the search engine index. The search bot scans all content, conducts a semantic analysis of text content, the quality of links, audio and video files. Based on all this, the search engine draws conclusions and makes the site a ranking.

As long as the site is out of the index, no one will know about it, except for those to whom you can distribute direct links. That is, the resource is available for viewing, but it is not in the search engine.

What is the index for?

The site needs to get into visibility in order to advance, grow and develop. A web resource that does not appear in any PS is useless and does not benefit either users or its owner.

In general, here is the full video from the school of Yandex webmasters, if you watch it in full, you will become practically a specialist in the issue of indexing:

What determines indexing speed

The main points that determine how quickly your site can get into the area of attention of search robots:

- Domain age (the older Domain name, the more bots favor him).

- Hosting (PS absolutely do not like and often ignore free hosting).

- CMS, purity and validity of the code.

- Page refresh rate.

What is crawl budget

Each site has a crawl budget - that is, the number of pages, more than which it cannot get into the index. If the site KB is 1000 pages, then even if you have ten thousand pages, there will only be a thousand in the index. The size of this budget depends on how authoritative and useful your site is. And if you have a problem of such a nature that the pages do not get into the index, then as an option, you need, no matter how trite it may sound, to improve the site!

Site indexing

When creating a new site, you need to correctly fill out the robots.txt file, which tells search engines whether the resource can be indexed, which pages to crawl and which not to touch.

The file is created in txt format and placed in the root folder of the site. The right robots is a separate issue. This file primarily determines what and how the bots will analyze on your site.

Usually, it takes from a couple of weeks to a couple of months for search engines to evaluate a new site and enter it into the database.

Spiders carefully scan each permitted html document, determining the appropriate subject for a new young resource. This action is not carried out in one day. With each new bypass, PSs will add more and more html documents to their database. Moreover, from time to time the content will be re-evaluated, as a result of which the places of pages in the search results may change.

The robots meta tag and, to some extent, canonical also help manage indexing. When checking the structure and solving problems with indexing, you should always look for their presence.

Google indexes pages first top level. When a new site with a certain structure should be indexed, the first one to be indexed is main page. After that, without knowing the structure of the site, the search engine will index what is closer to the slash. Later directories with two slashes are indexed. This means that even if the links in the content are high, they will not necessarily be indexed first. It is important to structure the structure optimally so that important sections are not left behind. large quantity slashes, otherwise Google will think that this is a low-level page.

Page indexing

When Yandex and Google have already got acquainted with the site and "accepted" it to their search base, the bots will return to the resource to scan new, added materials. The more often and regularly the content is updated, the more closely the spiders will follow it.

They say that the PDS pinger plugin for Yandex search helps for indexing - https://site.yandex.ru/cms-plugins/. To do this, you must first install Yandex search on your site. But I didn't feel much benefit from it.

When the resource is well indexed, it is already much easier to display separate, new pages in the search. Nevertheless, the analysis does not always proceed uniformly and with the same speed for all simultaneously updated html documents. The most visited and promoted resource categories always win.

What sources of information do search engines have about urls?

Once upon a time, I attracted a fast robot to a competitor who did not renew the domain, so that it would be lowered in the search results - this did not give any result.

How to check indexing

Visibility check html documents carried out differently for Google and Yandex. But in general, it is nothing complicated. Even a beginner can do it.

Checking in Yandex

The system offers three basic operators to check how many html documents are in the index.

The "site:" operator shows absolutely all the pages of the resource that have already entered the database.

Entered in the search bar like this: site:site

Operator "host:" - allows you to see indexed pages from domains and subdomains within the hosting.

Entered in the search bar as follows: host:site

The "url:" operator shows the specific page being requested.

Entered in the search bar as follows: url:site/obo-mne

Checking indexing with these commands always gives accurate results and is the most in a simple way resource visibility analysis.

Google verification

PS Google allows you to check the visibility of a site using only one command of the form site:site.

But Google has one peculiarity: it handles the command with and without www entered differently. Yandex, on the other hand, does not make such a difference and gives exactly the same results, both with www and without them.

Checking by operators is the most “old-fashioned” way, but for this purpose I use the RDS Bar browser plugin.

Verification with Webmaster

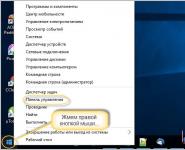

AT Google services Webmaster and Yandex Webmaster you can also see how many pages are in the PS database. To do this, you need to be registered in these systems and add your site to them. You can access them by following the links:

The bottom line is this - just drive in the page addresses, and the service gives you the results:

It does not check very quickly - it will have to wait 3 minutes there, but there are few complaints about the free tool. Just put it in the background window and go about your business, in a few minutes the results will be ready.

Is it possible to speed up indexing?

You can influence the speed of loading html documents by search robots. To do this, follow the following recommendations:

- Increase the number of social signals by encouraging users to share links in their profiles. And you can take tweets from live accounts in Prospero (klout 50+). If you make your twitter whitelist, consider that you have received a powerful weapon to speed up indexing;

- More often add new materials;

- You can start using the cheapest direct queries in your subject;

- Enter the address of a new page in addurilki immediately after its publication.

High behavioral factors on the site also have a positive effect on the speed of updating pages in the search. Therefore, do not forget about the quality and usefulness of content for people. A site that users really like is sure to be liked by search robots.

In general, everything is very easy in Google - you can add a page to the index within a few minutes by scanning in the webmaster panel (item Scan / see how Googlebot / add to the index). In the same way, you can quickly reindex the necessary pages.

I heard more stories about dudes who sent urls by Yandex mail so that they get into the index faster. In my opinion, this is nonsense.

If there is a direct problem, and all the previous tips did not help, it remains to move on to heavy artillery.

- Set up Last-modified headers (so that the robot checks for updates only documents that have really changed since its last call);

- We remove garbage from the search engine index (this garbage can be found using Comparser);

- We hide all unnecessary/junk documents from the robot;

- Doing additional files Sitemap.xml Usually robots read up to 50,000 pages from this file, if you have more pages, you need to make more sitemaps;

- Setting up the server.