Characteristics of operating systems table. Windows operating system

Depending on the implemented architectural solutions, the characteristics of operating systems are:

Portability - refers to the ability to run on CISC AND RISC processors.

· Multitasking - using a single processor to run multiple applications.

· Multiprocessing - implies the presence of several processors that can simultaneously execute many threads, one for each processor present in the computer.

· Scalability - the ability to automatically take advantage of the added processors. So, to speed up the application, the operating system can automatically connect additional identical processors.

Client-server architecture - involves the connection of a single-user workstation general purpose(client) to a multi-user general-purpose server to distribute the data processing load between them. The object that sends the message is the client, and the object that receives and responds to the message is the server.

· Extensibility - provided by an open modular architecture that allows you to add new modules to all levels of the operating system.

· Reliability and fault tolerance - characterize the ability to protect the operating system and applications from destruction.

· Compatibility means continued support for applications developed for MSDOS operating systems such as Windows, OS/2.

· A multi-level security system to protect information, applications from destruction, unauthorized access, unskilled user actions.

Classification of operating systems

Operating systems can be classified according to various criteria:

· By the number of tasks being solved in parallel on the computer OS share:

- single-tasking (for example, MS DOS);

- multitasking(OS/2, UNIX, Windows, Linux).

Multitasking operating systems provide the simultaneous solution of several tasks and manage the allocation of resources they share (processor, RAM, files, and external devices).

· Number of concurrent workers users :

- single user (for example, MS DOS, Windows 3.x);

- multiuser (network Unix, Linux, Windows 2000).

The main difference between multi-user systems and single-user systems is the ability to work in a computer network.

· For user interfaces:

- command interface (for example, MS DOS);

- GUI (for example, Windows).

· By the number of bits of the address bus of computers, to which the OS is oriented,

- on 16-bit (MS DOS);

- 32-bit (Windows 2000) and

- 64-bit (Windows 2003).

In the sector software and operating systems, the leading position is occupied by IBM, Microsoft, UNISYS, Novell.

MS DOS operating system(Microsoft) appeared in 1981. Today, this operating system is installed on the vast majority of personal computers. Since 1996, MS DOS has been distributed in as Windows 95 - 32-bit multi-tasking and multi-threaded operating system with a graphical interface and advanced networking capabilities.

The most traditional OS comparison is carried out according to the following characteristics of the information processing process:

memory management (maximum addressable space, memory types, technical indicators of memory usage);

· functionality auxiliary programs (utilities) as part of the operating system;

The presence of disk compression;

Possibility of archiving files;

support for multitasking;

support for network software;

availability of high-quality documentation;

conditions and complexity of the installation process.

Network Operating Systems - a set of programs that provides processing, transmission and storage of data in the network. The network OS provides users with various kinds of network services (file management, Email, network management process, etc.), supports work in subscriber systems. Network operating systems use architecture client-server or peer-to-peer architecture. Initially, network operating systems supported only local area networks (LANs), now these operating systems are extended to local area network associations.

Operating system - it is a program that starts immediately. Among all system programs with which computer users have to deal, operating systems occupy a special place.

The operating system (OS) controls the computer, runs programs, provides data protection, performs various service functions at the request of the user and programs. Each program uses the services of the OS, and therefore can only run under the control of the OS that provides services for it. Thus, the choice of OS is very important, as it determines which programs you will be able to run on your computer. The choice of OS also affects the performance of your work, the degree of data protection, the necessary hardware, etc. However, the choice of operating system also depends on specifications(configuration) of the computer. The more modern the operating system, the more features it provides and the more visual, but also the more it makes demands on the computer ( clock frequency processor, RAM and disk memory, availability and capacity of additional cards and devices).

The main reason for the need for an OS is that elementary operations for working with computer devices and managing its resources are very low-level operations, so the actions that a user and application programs need consist of several hundred or thousands of such elementary operations.

The operating system hides these complex and unnecessary details from the user and provides him with a convenient interface for work. It performs various support activities such as copying and printing files.

The OS loads all programs into RAM, transfers control to them at the beginning of their work, performs various activities at the request of running programs and releases the RAM occupied by programs when they complete.

Basic system I / O (BIOS, Basic Input / Output System), located in permanent memory computer. This part of the OS is "embedded" in the PC.

Its purpose is to perform the simplest and most versatile OS services associated with I / O. The basic input-output system also contains a test of the functioning of the computer, which checks the operation of the memory and devices of the computer when it is turned on. In addition, the basic input/output system contains the operating system boot caller.

The OS loader is a very short program found in the first sector of every OS floppy disk. The function of this program is to read two more OS modules into memory, which complete the boot process.

The OS bootloader on the hard disk consists of two parts. The first part of the bootloader is in the first sector hard drive, it selects which hard drive partition to continue booting from. The second part of the bootloader is located in the first sector of the same partition, it reads OS modules into memory and transfers control to them.

Disk files IO.SYS and MSDOS.SYS (they may be named differently, for example, IBMBIO.COM and IBMDOS.COM for PC DOS, DRBIOS.SYS and DRDOS.SYS for DR DOS - the names change depending on the OS version).

They are loaded into memory by the OS loader and remain permanent in the computer's memory. The IO.SYS file is an addition to the basic I/O system in ROM. The MSDOS.SYS file implements the basic high-level OS services.

The main tasks of the OS are as follows:

- 1. increase in computer throughput (due to the organization of continuous processing of the flow of tasks with automatic transition from one task to another and the efficient distribution of computer resources over several tasks);

- 2. Reducing the response time of the system to user requests by users of responses from a computer 4

- 3. simplified work of software developers and computer maintenance staff (by providing them with a significant number of programming languages and various service programs).

Operating systems can be classified according to the following indicators:

- 1. number of users: single-user OS (Ms-DOS, Windows) and multi-user OS (VM, UNIX);

- 2. access: batch (OS 360), interactive (Windows, UNIX), real-time systems (QNX, Neutrino, RSX);

- 3. number of tasks to be solved: single-tasking (MS-DOS) and multi-tasking operating systems (Windows, UNIX).

The operating system is designed to perform the following main (closely related) functions:

- 1. data management;

- 2. task management (tasks, processes);

- 3. communication with the human operator.

In various operating systems, these functions are implemented on a different scale and with the help of different technical, software, information methods and tools.

Structurally, the OS is a set of programs that control the operation of a computer, identify application programs and data, and communicate between the machine and the operator. The OS improves the performance of the computing complex due to the flexible organization of the flow of tasks through the machine, uniform loading of equipment, optimal use all computer resources, the standard organization of storage in a machine of large data arrays with a variety of ways to access them.

The system software also includes service programs that are designed to check the health of computer units, detect and localize device failures and eliminate their impact on the operation as a whole.

Computer system software is designed to implement the adaptability of user programs to changes in the composition of computer resources. High performance The computing system is provided by the OS due to the use of batch processing and multiprogramming modes and the availability of special software tools for performing labor-intensive operations of information input-output.

Among the most famous first control programs are the complexes SAGE, SABER, MERCURE, implemented on a computer of the second generation. For IBM/360 computers, operating systems were developed that provide batch data processing technology and real-time operation, as well as the implementation of multi-machine and multi-processor systems.

The first functionally complete OS was OS/360. The development and implementation of the OS made it possible to delimit the functions of operators, administrators, programmers, users, as well as significantly (by tens and hundreds of times) increase the performance of computers and the degree of loading of technical means. OS/360/370/375 versions - MFT (fixed task multiprogramming), MVT (variable task), SVS (virtual memory system), SVM (system virtual machines) - successively replaced each other and largely determined modern ideas about the role of OS in the general hierarchy of data management systems and tasks in data processing on a computer.

Early versions of OS / 360 were focused on batch processing of information - the input stream of tasks (ML, MD or punched cards) was prepared in advance and entered for processing in a continuous mode. Subsequently, OS/360/375 extensions appeared that allowed for interactive data processing from user terminals, the latest version (OS SVM) actually provided the user with a "virtual personal computer" with the full power of the IBM/360/375 computing unit. OS of other families.

OS programs constantly occupy the amount of memory set during the system configuration. The remaining parts of the OS are called from as needed. external memory on MD.

The OS ensures the implementation of the following processes in the computer system:

- 1. task processing;

- 2. system operation in the mode of dialogue and time slicing;

- 3. work in the system in real time as part of multiprocessor and multimachine complexes;

- 4. communication of the operator with the system;

- 5. logging the progress of computational work;

- 6. processing data received via communication channels;

- 7. functioning of input-output devices;

- 8. use of a wide range of debugging tools and program testing;

- 9. planning the passage of tasks in accordance with their priorities;

- 10. record keeping and control over the use of data, programs and computer resources.

The main components of the OS are control and processing programs. Control programs control the operation of the computer system, providing, in turn, automatic change of tasks to maintain continuous operation of the computer when switching from one program to another without operator intervention.

The control program determines the order of execution of processing programs and provides the necessary set of services for their execution. Main functions: sequential or priority execution of each work (task management); storage, search and maintenance of data, regardless of their organization and storage method (data management). computer operational computing

Task management programs read the input streams of tasks, process them depending on the priority, initiate the simultaneous execution of several tasks; call procedures; keep a system log.

Data management programs provide ways of organizing, identifying, storing, cataloging, and retrieving processed data. These programs manage I/O with various organization, combining records into blocks and dividing blocks into records, handling volume labels and datasets.

The failover management programs handle interrupts from the control system, log processor and external device failures, generate failure log entries, analyze the possibility of a task failing, and put the system into a standby state if the task cannot be completed.

System configuration. An application program in the OS can receive from the OS in the course of its operation the characteristics of a particular implementation of the system in which it operates: the name, version and edition of the OS, the type and technical characteristics of the computer. The OS usually has localization tools that allow you to configure the system for a specific national (local) data representation: representation of decimal fractions, monetary values, dates and times.

Send your good work in the knowledge base is simple. Use the form below

Students, graduate students, young scientists who use the knowledge base in their studies and work will be very grateful to you.

Posted on http://www.allbest.ru/

ROSZHELDOR

Federal State Budgetary Educational Institution

higher professional education

Rostov State Transport University

(FGBOU VPO RGUPS)

Liskinsky College of Railway Transport named after I.V. Kovaleva

(LTZhT - branch of RGUPS)

essay

by discipline

INFORMATICS

Operating systems

Completed by: student of group DK-22

option 18 Oleinikova Victoria

2014

operating system operating program

Introduction

1.3 Overview of file systems

Conclusion

Introduction

Among all the system programs that computer users have to deal with, operating systems occupy a special place.

An operating system is a program that starts right away. Among all the system programs that computer users have to deal with, operating systems occupy a special place.

The operating system (OS) controls the computer, runs programs, provides data protection, performs various service functions at the request of the user and programs. Each program uses the services of the OS, and therefore can only run under the control of the OS that provides services for it. Thus, the choice of OS is very important, as it determines which programs you will be able to run on your computer. The choice of OS also affects the performance of your work, the degree of data protection, the necessary hardware, etc. However, the choice of operating system also depends on the technical characteristics (configuration) of the computer. The more modern the operating system, the more features it provides and the more visual, but also the more it imposes on the computer (processor clock speed, RAM and disk memory, the presence and capacity of additional cards and devices).

The main reason for the need for an OS is that elementary operations for working with computer devices and managing its resources are very low-level operations, so the actions that a user and application programs need consist of several hundred or thousands of such elementary operations.

The operating system hides these complex and unnecessary details from the user and provides him with a convenient interface for work. It performs various support activities such as copying and printing files.

The OS loads all programs into RAM, transfers control to them at the beginning of their work, performs various actions at the request of executing programs, and frees the RAM occupied by programs when they complete.

1. The concept of the operating system

The operating system is a complex of system and service software. On the one hand, it relies on the basic computer software included in its BIOS (basic input / output system), on the other hand, it itself is a support for higher-level software - application and most service applications. Operating system applications are called programs designed to work under the control of this system.

An operating system is a program that loads when you turn on your computer. It produces a dialogue with the user, manages the computer, its resources (RAM, disk space, etc.), launches other (application) programs for execution. The operating system provides the user and application programs with a convenient way to communicate (interface) with computer devices.

The operating system has several main functions:

1. The graphical interface is a convenient shell that the user works with.

2. Multitasking - includes the ability to simultaneously or sequentially work with several applications at once, exchange data between applications, as well as the ability to sharing software, hardware, network and other resources of the computing system by several applications.

3. The kernel (command interpreter) is a "translator" from the program language to the language of machine codes.

4. Drivers are specialized programs for managing various devices included in the computer.

5. File system - designed to store data on disks and provide access to them. Data about where a particular file is recorded on the disk is stored in the system area of the disk in special file allocation tables (FAT tables).

6. Bit depth - on this moment there are: 16-bit operating systems (Dos, Windows 3.1, Windows 3.11), 32-bit operating systems (Windows98, Windows 2000, WindowsMe), 64-bit operating systems ( Windows XP, Windows Vista).

In addition to the main (basic) functions, operating systems can provide various additional functions. The specific choice of operating system is determined by the set of provided functions and specific requirements for the workplace. Other features of operating systems may include the following:

· Possibility to support functioning of a local computer network without special software;

· Providing access to basic Internet services by means integrated into the operating system;

· Possibility to create system means Internet server, its maintenance and management, including remote through a remote connection;

· Availability of means of protecting data from unauthorized access, viewing and modification;

· Possibility of registration working environment operating system, including media-related tools;

Possibility to ensure comfortable alternate work of different users on one personal computer while maintaining the personal settings of the working environment of each of them;

Possibility of automatic execution of computer and operating system maintenance operations according to a specified schedule or under control remote server;

· Ability to work with a computer for persons with physical disabilities associated with the organs of vision, hearing and others.

Figure 1 Integrated tool in Windows operating systems for Internet access

1.1 Purpose and classification of operating systems

Purpose OC:

Organization of the computing process in a computing system;

Rational distribution of computing resources between individual tasks to be solved;

Providing users with numerous service tools that facilitate the process of programming and debugging tasks.

The operating system plays the role of a kind of interface (Interface - a set of hardware and software necessary to connect peripheral devices to a personal electronic computer (PC)) between the user and the aircraft, i.e. The OS provides the user with a virtual aircraft. This means that the OS largely forms the user's idea of the capabilities of the aircraft, the convenience of working with it, and its throughput. Different operating systems on the same technical means can provide the user with different opportunities for organizing a computing process or automated data processing. In CS software, the operating system occupies a central position, since it plans and controls the entire computing process. Any of the software components must work under OS control.

This operating system must:

Be universally recognized and used as a standard system on many computers;

Work with all computer devices, including those released a long time ago;

Ensure the launch of the most different programs written by different people and at different times;

Provide facilities for checking, configuring, maintaining a computer system.

Modern operating systems are multitasking, that is, the user can run several applications at the same time, observing the result of each of them. This is possible due to the design of the OS and the functionality of modern processors - it is not for nothing that operating systems are written for the processor, and not vice versa. Modern processor is not a single-core, but a dual-core and even a quad-core solution, which increases its performance many times over. This is used by the operating system, optimally distributing processor resources between all running processes.

The main characteristics of the operating system are the stability of its operation and resistance to various threats - external (viruses) and internal (hardware failures and conflicts).

OS classification:

Depending on the processor control algorithm, operating systems are divided into:

Single-tasking and multi-tasking

Single and Multiplayer

Uniprocessor and multiprocessor systems

Local and network.

Operating systems are divided into two classes according to the number of simultaneously executing tasks:

Single-tasking (MS DOS)

Multitasking (OS/2, Unix, Windows)

In single-tasking systems, peripheral device management tools, file management tools, and user communication tools are used. Multitasking operating systems use all the features that are typical for single-tasking, and, in addition, manage the sharing of shared resources: processor, RAM, files, and external devices.

Depending on the areas of use, multitasking operating systems are divided into three types:

Batch Processing Systems (OS EC)

Time-sharing systems (Unix, Linux, Windows)

Real Time Systems (RT11)

Figure 2 Unix Desktop Screenshot

1.2 The composition of the operating system and the purpose of the components

The most important advantage of most operating systems is modularity. This property allows you to combine certain logically related groups of functions in each module. If it becomes necessary to replace or expand such a group of functions, this can be done by replacing or modifying only one module, rather than the entire system. Most OS consists of the following main modules: basic input-output system (BIOS - BasicInputOutputSystem); operating system loader (BootRecord); OS kernel; device drivers; command processor; external commands (files). The Basic Input/Output System (BIOS) is a set of firmware that implements basic low-level (elementary) I/O operations. They are stored in the computer's ROM and are written there during the manufacture of the motherboard.

This system is, in fact, "embedded" in the computer and is both its hardware and part of the operating system. The first function of the BIOS is to automatically test the main components of the computer when it is turned on. If an error is detected, an appropriate message is displayed on the screen and / or a sound signal. Next, the BIOS calls the block bootstrap operating system located on the disk (this operation is performed immediately after testing is completed). Having loaded this block into RAM, the BIOS transfers control to it, and it, in turn, loads other OS modules. Another important BIOS feature is interrupt service. When certain events occur (pressing a key on the keyboard, clicking the mouse, an error in the program, etc.), one of the standard BIOS routines is called to handle the situation that has arisen. The operating system loader is a short program located in the first sector of any boot disk(floppy disk or operating system disk). The function of this program is to read into the memory of the main disk files OS and the transfer of further control of the computer. The OS kernel implements basic services, is loaded into RAM and stays there all the time.

1.3 Overview of file systems

File system FAT

FAT is the simplest file system supported by Windows NT. basis file system FAT is a file allocation table that is placed at the very beginning of a volume. Two copies of this table are kept on disk in case of corruption. In addition, the file allocation table and the root directory must be stored in a specific location on the disk (in order to correctly determine the location of the download files).

A disk formatted in the FAT file system is divided into clusters, the size of which depends on the size of the volume. Simultaneously with the creation of the file, an entry is created in the directory and the number of the first cluster containing the data is set. Such an entry in the file allocation table indicates that this is the last cluster of the file, or points to the next cluster.

Updating the file allocation table is very important and time consuming. If the file allocation table is not updated regularly, data loss may result. The duration of the operation is explained by the need to move the reading heads to the logical zero track of the disk with each update of the FAT table.

The FAT directory has no defined structure and files are written to the first found free space on disk. In addition, the FAT file system only supports four file attributes: System, Hidden, Read-Only, and Archive.

File system HPFS

The HPFS file system was first used for the OS/2 1.2 operating system to provide access to the large disks that were on the market at the time. In addition, there is a need to expand existing system names, organizational and security improvements to meet the growing needs of the network server market. The HPFS file system supports the FAT directory structure and adds sorting of files by name. The file name can contain up to 254 double-byte characters. The file consists of "data" and special attributes, which creates additional features to support other types of filenames and improve security. In addition, the smallest block for data storage is now equal to the size of the physical sector (512 bytes), which helps to reduce wasted disk space.

Entries in the directory of the HPFS file system contain more information than in FAT. Along with file attributes, information about creation and modification, as well as the date and time of access, is stored here. The entries in the HPFS directory do not point to the first cluster of the file, but to the FNODE. FNODE can contain file data, pointers to file data, or other structures pointing to file data.

HPFS tries to place file data in contiguous sectors whenever possible. This leads to an increase in the speed of sequential processing of the file.

HPFS divides the disk into blocks of 8 MB each and always tries to write the file within the same block. For each block, 2 KB is reserved for the allocation table, which contains information about the written and free sectors within the block. Blocking leads to better performance, since the disk head to determine where to save the file must return not to the logical beginning of the disk (usually this is cylinder zero), but to the allocation table of the nearest block.

File system NTFS

From the user's point of view, the NTFS file system organizes files into directories and sorts them in the same way as HPFS. However, unlike FAT and HPFS, there are no special objects on the disk and there is no dependence on the features of the installed hardware (for example, a 512-byte sector). In addition, there are no special data stores on the disk (FAT tables and HPFS superblocks).

The purpose of the NTFS file system is the following.

Delivering the reliability that is essential for high performance systems and file servers.

Providing the platform with additional functionality.

Support for POSIX requirements.

Elimination of restrictions specific to FAT and HPFS file systems.

Figure 3 Structure of the FAT file system

2. Characteristics of modern operating systems

Year after year, the structure and capabilities of operating systems evolve. Recently, new operating systems and new versions of already existing operating systems have included some structural elements that have made great changes in the nature of these systems. Modern operating systems meet the requirements of constantly evolving hardware and software. They are able to manage faster multiprocessor systems, faster networking devices, and an ever-increasing variety of storage devices. Of the applications that have influenced the design of operating systems, multimedia applications, Internet access tools, and the client / server model should be noted.

The steady growth of requirements for operating systems leads not only to improvements in their architecture, but also to the emergence of new ways of organizing them. A wide variety of approaches and building blocks have been tried in experimental and commercial operating systems, most of which can be grouped into the following categories.

microkernel architecture.

Multithreading.

Symmetric multiprocessing.

distributed operating systems.

Object-oriented design.

A distinctive feature of most operating systems today is a large monolithic kernel. The operating system kernel provides most of its capabilities, including scheduling, file system manipulation, network functions, operation of various device drivers, memory management and many others. Typically, a monolithic kernel is implemented as a single process, all elements of which use the same address space. In the microkernel architecture, only a few of the most important functions, which include working with address spaces, providing interaction between processes (interprocess communication - IPC), and basic planning. Other operating system services are provided by processes sometimes referred to as servers. These processes run in user mode and the microkernel treats them just like other applications.

This approach allows you to divide the task of operating system development into kernel development and server development. Servers can be customized for specific application or environment requirements.

Allocation of the microkernel in the structure of the system simplifies the implementation of the system, ensures its flexibility, and also fits well into a distributed environment.

Multithreading is a technology in which the process executing an application is divided into several simultaneously executing threads. Following are the main differences between thread and process.

Flow: A dispatchable unit of work that includes a processor context (which includes the contents of the program counter and stack pointer), as well as its own stack area (for organizing subroutine calls and storing local data). Thread commands are executed sequentially; a thread can be interrupted when the processor switches to another thread.

Process: A collection of one or more threads, and the system resources associated with those threads (such as a region of memory containing code and data, open files, various devices). This concept is very close to the concept of a running program. By splitting the application into multiple threads, the programmer gets all the benefits of the application's modularity and the ability to manage application-related temporal events.

Multithreading is very useful for applications that perform several independent tasks that do not require sequential execution. An example of such an application is a database server that accepts and processes multiple client requests at the same time. If multiple threads are being processed within the same process, switching between different threads has less CPU overhead than switching between different processes. In addition, threads are useful in structuring the processes that are part of the operating system kernel, as described in later chapters.

Until recently, all single-user personal computers and workstations contained a single general-purpose virtual microprocessor. As a result of ever-increasing performance requirements and decreasing costs of microprocessors, manufacturers have shifted to producing computers with multiple processors.

Symmetric multiprocessing (SMP) technology is used to improve efficiency and reliability.

This term refers to the hardware architecture of a computer, as well as the way the operating system behaves according to that architectural feature. Symmetric multiprocessing can be defined as a standalone computer system with the following characteristics.

The system has multiple processors.

These processors, connected to each other by a communication bus or some other circuitry, share the same main memory and the same I/O devices.

All processors can perform the same functions (hence the name symmetrical processing).

An operating system running on a system with symmetric multiprocessing distributes processes or threads among all processors. Multiprocessor systems have several potential advantages over uniprocessor systems, including the following.

Performance. If a job that a computer needs to run can be arranged so that parts of that job run in parallel, this will result in better performance than a single processor system with the same type of processor. The position formulated above is illustrated in fig. 2.12. In multitasking mode, only one process can be running at the same time, while the rest of the processes are forced to wait for their turn. In a multiprocessor system, multiple processes can run simultaneously, each running on a separate processor.

Reliability. With symmetric multiprocessing, the failure of one of the processors will not bring the machine to a halt, because all processors can perform the same functions. After such a failure, the system will continue to operate, although its performance will decrease slightly.

Building. By adding additional processors to the system, the user can increase its performance.

Scalability. Manufacturers can offer their products in a variety of price and performance configurations designed to work with different numbers of processors.

It is important to note that the benefits listed above are potential rather than guaranteed. To properly realize the potential contained in multiprocessor computing systems, the operating system must provide an adequate set of tools and capabilities.

Figure 4 Multitasking and multiprocessing

It is common to see multithreading and multiprocessing discussed together, but the two concepts are independent. Multithreading is a useful concept for structuring application and kernel processes, even on a single processor machine. On the other hand, a multi-processor system can have advantages over a single-processor system even if the processes are not split into multiple threads, because it is possible to run multiple processes at the same time on such a system. However, both of these possibilities are in good agreement with each other, and their joint use can have a noticeable effect.

A tempting feature of multiprocessor systems is that the presence of several processors is transparent to the user - the operating system is responsible for distributing threads between processors and for synchronizing different processes. This book discusses the scheduling and synchronization mechanisms that are used to make all processes and processors visible to the user in the form unified system. Another task more high level-- representation in the form of a single cluster system of several separate computers. In this case, we are dealing with a set of computers, each with its own primary and secondary memory and its own I/O modules. A distributed operating system creates the appearance of a single space of primary and secondary memory, as well as a single file system. Although the popularity of clusters is steadily growing and more and more clustered products appear on the market, modern distributed operating systems still lag behind single- and multi-processor systems in development. You will get acquainted with such systems in the sixth part of the book.

One of the latest innovations in the design of operating systems has been the use of object-oriented technologies. Object-oriented design helps to clean up the process of adding additional modules to the main small kernel. At the operating system level, an object-oriented structure allows programmers to customize the operating system without violating its integrity. In addition, this approach facilitates the development of distributed tools and full-fledged distributed operating systems.

Conclusion

Windows is the most common operating system, and for most users, it is the most suitable due to its simplicity, good interface, acceptable performance, and a huge number of application programs for her.

I have had the opportunity to work with Microsoft operating systems from Windows 2000 to Windows versions 8, in my opinion, the most successful OS is Windows 7, which has better protection than Windowsxp, a more thoughtful interface and many other little things that make this OS more attractive. Of course, the question arises, what about Windows 8, yes it is a newer OS, but its interface is more adapted for mobile devices With touch screen, that is why it is not so popular yet, but as I heard, Microsof released an update for Windows 8, Windows 8.1, in which they decided to return a little to the desktop familiar to users.

List of sources used

1. Lecciopedia-library of lecture material. [Electronic resource] Access mode: http://lektsiopedia.org.

2. OS Journal [Electronic]. Access mode: http://www.ossite.ru/.

3. OSys.ru - operating systems [Electronic resource]. Access mode: http://osys.ru/.

4. Computer science. [Electronic resource]: textbook L.Z. Shautsukova. Access mode: http://book.kbsu.ru/.

5. Mikheeva E.V. Titova O.I. Informatics: Textbook for students of institutions environments. Prof. education. M.: Academy, 2010.

6. Informatics and information and communication technologies at school [Electronic resource]: textbook / comp. Popova O.V. Access mode: http://www.klyaksa.net/htm/kopilka/uchp/p6.htm.

Hosted on Allbest.ru

Similar Documents

Purpose and main functions of operating systems. Loading programs to be executed into RAM. Servicing all I/O operations. Evolution, classification of operating systems. Formation of payroll, sorting by department.

term paper, added 03/17/2009

Purpose, classification, composition and purpose of operating system components. Development of complex information systems, software packages and individual applications. Characteristics of operating systems Windows, Linux, Android, Solaris, Symbian OS and Mac OS.

term paper, added 11/19/2014

The study of the features of the operating system, a set of programs that control the operation of application programs and system applications. Descriptions of the architecture and software of modern operating systems. Advantages of the Assembler programming language.

presentation, added 04/22/2014

The concept of the operating system. History of its creation and development. Varieties of modern operating systems. The main functions of the general and special purpose OS. Computing and operating systems, their functions. operating system generation.

term paper, added 06/18/2009

MS-DOS operating system: history and characteristics. An overview of the standard programs of the Windows operating system. Ways to run programs. Service Windows applications and their purpose: task manager, checking, cleaning, defragmenting and archiving the disk.

abstract, added 01/06/2015

The concept of the operating system as a basic complex computer programs, providing control of computer hardware, working with files, input and output of data, execution of utilities. The history of the development of operating systems of the Windows family.

term paper, added 01/10/2012

The concept of the shell of the operating system, their varieties, purpose and differences from each other. Features of use operating shells on a personal computer, making it clear and simple to perform basic operations on files, directories.

term paper, added 03/29/2014

Features of the current stage of development of operating systems. Purpose of operating systems, their main types. Operating systems of minicomputers. The principle of operation of a matrix printer, the design and reproduction of arbitrary characters for them.

term paper, added 06/23/2011

Evolution and classification of OS. network operating systems. Memory management. Modern concepts and technologies for designing operating systems. Operating room family UNIX systems. Novell network products. Microsoft Network OS.

creative work, added 11/07/2007

Linux is one of the most popular free operating systems. Working with a basic limited set of programs by default. Characteristics of the main programs that expand the capabilities of the operating Linux systems for the user.

To determine operational characteristics, first of all, a decision matrix is compiled, which is based on a study of a cohort of patients, consisting of two groups - healthy and patients with an accurately verified (reference) diagnosis of the disease (Table 1).

Table 9.1.

Decision matrix for calculating the operational characteristics of diagnostic methods

The operational characteristics of the diagnostic method include:

1. sensitivity (Se, sensitivity),

2. specificity (Sp, specificity),

3. accuracy (Ac, accuracy), or diagnostic efficiency

4. positive predictive value (+VP, positive predictive value),

5. negative predictive value (-VP, negative predictive value).

Some of the above criteria for the information content of radiation diagnostics are not constant. They depend on the prevalence of the disease, or prevalence.

Prevalence (Ps) is the probability of a certain disease, or more simply, its frequency of occurrence among the studied group of people (cohort) or the population as a whole. Incident (In) should be distinguished from prevalence - the probability of a new disease in the group of people under consideration for a certain period of time, more often for one year.

Sensitivity (Se) is the proportion of correct positive test results among all patients. Determined by the formula:

where Se is sensitivity, TP is true positive cases, D+ is the number of patients with disease.

Sensitivity a priori shows what will be the proportion of patients in whom this study will give a positive result. The higher the sensitivity of the test, the more often the disease will be detected with its help, so, therefore, it is more effective. At the same time, if such a highly sensitive test is negative, then the presence of the disease is unlikely. Therefore, they should be used to exclude diseases. Because of this, highly sensitive tests are often referred to as identifiers.

to narrow the range of suspected diseases. It should also be noted that a highly sensitive test gives a lot of "false alarms", which requires additional financial costs for further examination.

Specificity (Sp) is the proportion of correct negative test results among healthy patients. This indicator is determined by the formula

where Sp - specificity, TN - true negative cases, D- - healthy patients.

Having determined the specificity, one can a priori assume what proportion of healthy individuals in whom this study will give a negative result. The higher the specificity of the method, the more reliably the disease is confirmed with its help, so, therefore, it is more effective. Highly specific tests are called discriminators in diagnostics. Highly specific methods are effective at the second stage of diagnosis, when the range of suspected diseases is narrowed and it is necessary to prove the presence of the disease with great certainty. A negative factor of a highly specific test is the fact that its use is accompanied by a very significant number of missed diseases.

A very important practical conclusion follows from the foregoing, which is that in medical diagnostics, a test is desirable that would be a priori both highly specific and highly sensitive. However, in reality, this cannot be achieved, since an increase in the sensitivity of a test will inevitably be accompanied by a loss of its specificity, and, conversely, an increase in the specificity of a test is associated with a decrease in its sensitivity. The conclusion follows from this: in order to create an optimal diagnostic system, it is necessary to find a compromise between indicators of sensitivity and specificity, in which the financial costs of the survey will optimally reflect the balance between the risks of "false alarms" and missing diseases.

Accuracy (Ac), or the informative value of a diagnostic test. is the proportion of correct test results among all examined patients. It is determined by the formula:

where Ac - accuracy, TP - true positive solutions, TN - true negative solutions, D+ - all healthy patients, D- - all sick patients.

Accuracy, therefore, reflects how many correct answers were obtained as a result of testing this test.

For a correct understanding of the diagnostic effectiveness of methods, the criteria of a posteriori probability play an important role - the predictability of positive and negative results. It is these criteria that show what is the probability of a disease (or its absence) with a known result of the study. It is easy to see that the a posteriori are more important than the a priori.

The positive predictive value (+VP) is the proportion of correctly positive cases among all positive test values. This indicator is determined by the formula

![]()

where +PV - positive predictive value, TP - true positive cases, FN - false negative cases.

The predictive value of a positive result, therefore, directly indicates how likely the disease is with positive results of a diagnostic study.

Negative predictive value (-VP) is the proportion of true negatives among all negatives. The criterion is determined by the formula

where -PV - negative predictive value, TN - true negative cases, FP - false positive cases.

This indicator, therefore, shows how likely it is that the patient is healthy if the results of the radiological examination are negative.

Let us explain the methodology for calculating the operational characteristics of a diagnostic test using the following example.

Suppose a new method of digital fluorography is being developed. It should be assessed its informativeness in the diagnosis of lung diseases. For this purpose, patients with an impeccably and accurately established diagnosis of this disease are selected. Let's assume that a total of 100 patients of each group were selected, i.e. two cohorts of observations were compiled. In the first group of tuberculosis patients, the fluorographic test was positive in 88 patients, and in 12 people it was negative. Of the second group of patients, 94 people were recognized as healthy, 6 patients had a suspicion of tuberculosis, and they were sent for further examination. Based on the data obtained, a decision matrix is compiled (Table 9.2).

Table 9.2

Distribution of patients according to their disease and test results

The results of calculations according to the data presented in the table allow you to determine the diagnostic information content, i.e., determine the sensitivity (Se), specificity (Sp), accuracy (Ac), the probability of positive (+VP) and negative responses (-VP):

Thus, the operational characteristics of this method will be as follows: sensitivity - 88%, specificity - 96%, accuracy - 92%, positive predictive value - 96%, negative predictive value - 89%.

If such operational characteristics of tests as sensitivity, specificity and accuracy do not significantly depend on the incidence of the disease, then the predictive value of results, both positive and negative, is directly related to prevalence. The higher the prevalence of the disease, the higher the predictive value of a positive result and the lower the predictive value of a negative test. Indeed, it is well known that the overdiagnosis of a doctor working in a specialized hospital is always higher than that of the same doctor working in a general polyclinic. Naturally, it is assumed that the qualifications of both specialists are equivalent.

There is a mutual influence of the characteristics of radiation tests. So, the higher the sensitivity of the ray method, the higher the predictive value of its negative result. The predictive value of a positive result of an X-ray study mainly depends on its specificity. Low specific methods are accompanied by the occurrence of a large number of false positive solutions. This leads to a decrease in the predictive value of positive results of radiological examination.

The above criteria for diagnostic informativeness are based on the principles of dichotomous decisions: "yes" - "no", "norm" - "pathology". However, it is well known that in practical work it is not always possible for a doctor to classify the data received according to a similar scheme. In some cases, the specialist may have other conclusions, such as, for example, “the disease is most likely present” or “the disease is most likely absent”. Similar nuances in the acceptance of medical opinions reflect other characteristics of informativeness - the likelihood ratio (likelihood ratio).

The likelihood ratio of a positive result (+Lr) shows how many times the probability of obtaining a positive result is higher in patients than in healthy people. Relevant

Thus, the likelihood ratio of a negative result (-Lr) shows how many times the probability of obtaining a negative result in healthy patients is higher compared to patients. These criteria for the information content of diagnostics are determined, based on the table above, according to the following formulas:

![]()

In medical practice, it is often necessary to use several diagnostic methods. The use of several radiation studies can be performed in two ways: in parallel and in series.

The parallel use of tests is often used in the diagnosis of emergency conditions of the patient, i.e. in cases where it is necessary to carry out the most comprehensive diagnostic procedures in a short time. Parallel application of tests provides their greater sensitivity, and, consequently, a higher predictive value of a negative result. However, the specificity and predictive value of a positive result is reduced.

Consistent application of tests is performed when clarifying the diagnosis, to detail the patient's condition and the nature of the pathological process. With the consistent use of diagnostic tests, the sensitivity and predictive value of negative test results decrease, but at the same time, the specificity and predictive value of a positive result increase.

Thus, a combination of various research methods, a change in the order of their execution change the totality of the operational characteristics of each test separately and the overall predictive value of their results. An important conclusion of evidence-based medicine follows from the foregoing: the prognostic characteristics of any test cannot be automatically transferred to all medical institutions without taking into account the prevalence and a number of other circumstances.

Giving an assessment of the diagnostic effectiveness of a research method, they usually indicate the total number of erroneous conclusions: the fewer of them, the more effective the method. However, as already noted, it is unrealistic to simultaneously reduce the number of false positive and false negative errors, since they are interconnected. In addition, it is generally accepted that errors of the first type - false positives - are not as dangerous as errors of the second type - false negatives. This is especially true for the detection of infectious and oncological diseases: missing the disease is many times more dangerous than diagnosing it in a healthy person.

In cases where the results of a diagnostic study are quantified, they are classified into normal and pathological conditionally. Part of the test values taken as the norm will be observed in patients, and, conversely, in the pathology zone there will be some changes in healthy people. This is understandable: after all, the boundary between health and the initial stage of the disease is always conditional. And yet, in practical work, analyzing the digital indicators of a diagnostic study, the doctor is forced to make alternative decisions: to attribute this patient to a group of healthy or sick people. In doing so, he uses the separating value of the applied test.

Changing the boundary between the norm and pathology is always accompanied by a change in the operational characteristics of the method. If more stringent requirements are imposed on the method, i.e. the border between the norm and pathology is established at high test values, the number of false negative conclusions (missing diseases) increases, which leads to an increase in the specificity of the test, but at the same time to a decrease in its sensitivity. If it is advisable to soften the requirements for the test, the border between the norm and pathology is shifted towards normal values, which is accompanied by an increase in the number of false positive conclusions (false alarms) and at the same time a decrease in the number of false negatives (missing diseases). This increases the sensitivity of the method, but reduces its specificity.

Thus, when conducting diagnostic studies and evaluating their results quantitatively, the doctor is always in a situation of choice: either he sacrifices sensitivity in order to increase specificity, or, on the contrary, he prefers specificity at the expense of sensitivity reduction. How to act correctly in each specific case depends on many factors: the social significance of the disease, its nature, the patient's condition and, no less important, the psychological characteristics of the doctor's personality.

The most important conclusion for modern medical diagnostics follows from the foregoing. The quantitative mathematical method, no matter how perfect the mathematical apparatus or technical means, its results always have a limited, applied value, obeying the doctor's logical thinking and correlating with a specific clinical and social situation.

The theory of evidence-based medicine has shown that the distinction between groups of patients for health reasons into normal and pathological conditions is conditional and depends on the point of separation of these conditions, depending on the subjective qualities of the researcher - his determination or caution, as well as other prerequisites - external and internal. On fig. 9.2 the system of the coordinates reflecting decision-making in medicine is presented. The y-axis is an indicator of morbidity, and the abscissa is for making diagnostic decisions, i.e. . It is noteworthy that the curves of the Poisson distribution, reflecting the totality of the norm and pathology, are mutually superimposed on each other. This forms a graphical distribution of correct and erroneous solutions in diagnostics - both positive and negative: exact hits, omissions, false alarms.

Fig.9.2. Relationship between test results and decision criteria. PI - true positive results,

TR - true negative, LP - false positive, LO - false negative

Point X on the decision axis is the point at which outcomes are divided into positive and negative. To the left of this axis are correctly negative solutions and omissions of the disease, to the right of the axis are correctly positive solutions and false alarms. The relationship of these indicators forms graphic representation about the operational characteristics of the research method. The characterological features of the doctor's personality are superimposed on this picture. If the doctor is cautious, the decision-making axis shifts to the left, if decisive - to the right. Correspondingly, the relationship of the operational characteristics of the applied diagnostic test changes. The interval d denotes the value of the disease recognition criterion.

Operating system(OS) is a set of programs that provides management of computer resources and processes that use these resources in computing. Process is a sequence of actions prescribed by the program. Resource is any logical or hardware component COMPUTER. The main resources are CPU time and RAM. Resources may belong to one or more external computers that are accessed by the operating system using a computer network.

resource management consists of two functions: simplification of access to a resource and distribution of resources between processes competing for them. To solve the first problem, operating systems support custom and software interface s . To solve the second, operating systems use different virtual memory and processor management algorithms.

Operating systems are characterized by the main features:

the number of users simultaneously served by the system (single-user and multi-user);

the number of simultaneously running processes (single-tasking and multitasking);

The type of computing system used (uniprocessor, multiprocessor, network, distributed).

Example. operating room Windows system 98 is multi-tasking, Linux is multi-user, MS-DOS is single-tasking and therefore single-user. Windows NT and Linux operating systems can support multiprocessor computers. The Novell NetWare operating system is a network operating system; Windows NT and Linux also have built-in networking tools.

User and program interfaces. To simplify access to computer resources, operating systems support user and program interfaces. The user interface is a set of commands and services that make it easier for the user to work with the computer. A programming interface is a set of procedures that make it easier for a programmer to control a computer.

Rice. 1. Operating system interfaces

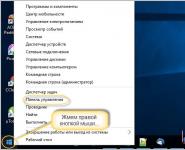

Example. Windows provides the user with GUI, which is (from the user's point of view) a set of rules for visual computer control. In addition to the main graphical interface, the user is also provided with a command interface, that is, a set of commands of a certain format. To do this, in the system menu there is an item "Run". The set of system functions in Windows is called API (Application Programming Interface). This set contains more than a thousand procedures for solving various system problems. The Linux operating system also has two options for controlling the computer, but, as a rule, commands are preferred.

Processor time and memory organization. To organize a multitasking mode, the OS must somehow distribute the processor time between simultaneously running programs. The so-called preemptive multitasking mode is usually used. In preemptive mode, each program runs continuously for a strictly defined period of time (time slice), after which the processor switches to another program. Since the time quantum is very small, with sufficient processor performance, the illusion of simultaneous operation of all programs is created.

One of the main tasks of the operating system is memory management. When the main memory is low, all data that is not currently in use is written to a special paging file. The memory represented by the swap file is called external page memory. The combination of main and external page memory is called virtual memory. However, to the programmer, virtual memory looks like a single entity, that is, it is considered as an unordered collection of bytes. In this case, we say that linear memory addressing is used.

Example. Windows and Linux operating systems use linear addressing virtual memory. The MS-DOS operating system used non-linear addressing of main memory. The main memory had a complex structure that had to be taken into account when programming. Swap files were not supported by MS-DOS.

operating system structure. Modern operating systems, as a rule, have a multi-level structure. Works directly with hardware nucleus operating system. The core is a program or set related programs that use the hardware features of the computer. Thus, the kernel is a machine-dependent part of the operating system. The kernel defines the programming interface. On the second level are standard programs operating system and shell, which work with the kernel and provide a user interface. They try to make second-level programs machine-independent. Ideally, replacing the kernel is equivalent to changing the version of the operating system.

|

Rice. 2. Levels of the Linux operating system

File system. Any data is stored in the external memory of the computer in the form of files. Files need to be managed: create, delete, copy, modify, etc. Such tools are provided to the user in the form of user and program interfaces by the OS. The way files are organized and managed is called a file system. The file system determines, for example, which characters can be used for a filename, which maximum size file, what is the name of the root directory, etc. The way files are organized affects the speed of access to desired file, file storage security, etc.

The same OS can work simultaneously with several file systems. As a rule, the functions of the file system are implemented by means of the operating system kernel.

Example. There are several types of file systems used for PCs:

FAT16 - used in Windows95, OS \ 2, MS-DOS;

FAT32 and VFAT - used in Windows95;

NTFS - used in Windows NT;

HPFS - used in OS\2;

Linux Native, Linux Swap - used in Linux OS.

The FAT file system is the simplest. The name of the root directory is always of the form: A:\, B:\, C:\, etc. The file name consists of three parts: the path, the actual name, and the extension. The path is the name of the directory where the file is located. The extension indicates the file type. For example, the full name of the file is C:\Windows\System\gdi.exe, the path is C:\Windows\System\, the extension is exe, and the actual name is gdi. According to the FAT rules, the file name itself can contain from 1 to 8 characters, and the name extension, separated from the name by a dot, can contain up to 3 characters. When naming files, uppercase and lowercase letters are not distinguished. The full name of the file includes the name of the logical device on which the file is located and the name of the directory in which the file is located. The system stores information about the size of the file and the date it was created.

In terms of data organization, VFAT resembles FAT. However, it allows you to use long file names: names up to 255 characters, full names up to 260. The system also allows you to store the date of the last access to the file, which creates additional opportunities for fighting viruses.

The file system can be implemented as a driver, with which all programs that read or write information to external devices communicate through the operating system. The file system may include information storage security. For example, the NTFS file system has tools for automatically correcting errors and replacing bad sectors. A special mechanism monitors and records all actions performed on magnetic disks, so in the event of a failure, the integrity of the information is restored automatically. In addition, the file system may have means of protecting information from unauthorized access.

The client-server model. An important feature of modern operating systems is that the client-server model is the basis for the interaction between the application program and the OS. All calls of the user program (client) to the OS are processed special program(server). This uses a mechanism similar to calling a remote procedure, which makes it easy to move from interaction between processes within the same computer to a distributed system.

Plug and play technology. Plug and play (PnP technology) is a way of interaction between OS and external devices. The operating system polls all peripheral devices and must receive a specific response from each device, from which it can be determined which device is connected and which driver is required for its normal operation. The purpose of using this technology is to simplify the connection of new external devices. The user should be spared the complicated work of setting up external device requiring high qualifications.

Service systems – software, which changes and supplements the user and program interfaces of the OS. Service systems are divided into operating environments, shells, and utilities.

Operating environment- a system that changes and supplements both the user and software interface. The operating environment creates for the user and application programs the illusion of working in a full-fledged OS. The appearance of an operating environment usually means that the operating system used does not fully meet the requirements of practice.

|

Rice. 3. The role of the operating environment

Data protection– this is a very big problem. As part of the operation of the OS, information protection means mainly ensuring the integrity of information and protection against unauthorized access. Integrity is largely the responsibility of the file system, while tamper protection is the responsibility of the kernel. The usual mechanism for such protection is the use of passwords and privilege levels. For each user, the boundaries of access to files and the priority of its programs are determined. The system administrator has the highest priority.

Network facilities and distributed systems. An integral part of modern operating systems are tools that allow you to communicate through a computer network with applications running on other computers. To do this, the OS mainly solves two problems: providing access to files on remote computers and the ability to run the program on a remote computer.

The first task is most naturally solved by using the so-called network file system, which organizes the user's work with deleted files as if these files were on the user's own magnetic disk.

The second task is solved using the remote procedure call mechanism, which is implemented by means of the kernel and also hides from the user the difference between local and remote programs.

The availability of resources for managing resources of remote computers is the basis for creating distributed computing systems. A distributed computing system is a collection of several connected computers that work independently, but perform a common task. Such a system can be considered as a multiprocessor.

shell- a system that changes the user interface. The shell creates an interface for the user that is different from that of the operating system itself. The task of the shell is to simplify some commonly used actions with the operating system. However, the shell will not replace the OS, and therefore the professional user must also learn the command interface of the OS itself.

Utilities have a highly specialized purpose and each perform its own function. Utilities run in their respective shell environments and provide users with Additional services(mostly disk and file maintenance). Most often it is:

Disk maintenance (formatting, ensuring the safety of information, the possibility of its recovery in case of failure, etc.);

Maintenance of files and directories (search, viewing, etc.);

Creating and updating archives;

Providing information about computer resources, disk space occupancy, distribution of RAM between programs;

Printing text and other files in various modes and formats;

Protection against computer viruses.

|

Rice. 4. The role of the OS shell

Tool systems is a software product that provides the development of information and software. Tool systems include: programming systems, rapid application development systems and database management systems (DBMS).

Programming system is designed to develop application programs using some programming language. Its composition includes:

compiler and/or interpreter;

link editor

· development environment;

library of standard routines;

documentation.

A compiler is a program that converts a source program into an object module, that is, a file consisting of machine instructions. An interpreter is a program that directly executes the instructions of a programming language.

A linker is a program that assembles multiple object files into a single executable file.

Integrated development environment - a set of programs that includes text editor, a software project file management tool, a program debugger that automates the entire program development process.

Library of standard routines - a set of object modules organized in special files provided by the manufacturer of the programming system. In such libraries, there are usually text input-output routines, standard mathematical functions, file management programs. Object modules from the standard library are usually automatically linked by the linker to custom object modules.

|

Rice. 5. Stages of program development

Rapid Application Development Systems are an evolution of conventional programming systems. In RAD systems, the programming process itself is largely automated. The programmer does not write the text of the program itself, but, with the help of some visual manipulations, indicates to the system which tasks should be performed by the program. After that, the RAD system itself generates the text of the program.

Database management system is a universal software tool designed to organize the storage and processing of logically interconnected data and provide quick access to them. One of the important capabilities of a computer is the storage and processing of large amounts of information, and modern computers accumulate not only text and graphic documents (drawings, drawings, photographs, maps), but also Web pages. global network Internet, sound and video files. The creation of databases provides data integration and the ability to centrally manage them. Information is collected in databases, organized according to certain rules, which provide general principles for describing, storing and manipulating data so that various users and programs can work with them.

DBMS enable programmers and systems analysts to rapidly develop better software data processing, and end users to directly manage the data. The DBMS should provide the user with data search, modification and storage, online access, protection of data integrity from hardware failures and software errors, differentiation of rights and protection against unauthorized access, support for the joint work of several users with data. There are universal database management systems used for various applications. When setting up universal DBMS for specific applications, they must have the appropriate tools. The process of customizing a DBMS for a specific application is called system generation. Universal DBMS include, for example Microsoft systems Access, Microsoft Visual FoxPro, Borland dBase, Borland Paradox, Oracle.

Telecommunication technologies of data processing. An important feature of many operating systems is their ability to communicate with each other via a network, which allows computers to communicate with each other as if within local computer networks(LAN) and the global Internet.

Modern operating systems, both newly created and updated versions existing, support a full set of protocols for operation in local and global computer networks. At the moment, the global computer industry is developing very rapidly. The performance of systems is increasing, and consequently, the possibilities of processing large amounts of data are increasing. Operating systems of the MS-DOS class can no longer cope with such a flow of data and cannot fully use resources modern computers. Therefore, it is not widely used anywhere else. Everyone is trying to move to more advanced operating systems, such as Unix, Windows, Linux or Mac OS.

If we define the OS in the words of the user, then operating system can be called main program, which is loaded first when the computer is turned on and thanks to which communication between the computer and the person becomes possible. The task of the OS is to provide the convenience of working with a computer for a human user. The OS controls all devices connected to the computer, providing access to them to other programs. In addition, the OS is a kind of buffer-transmitter between computer hardware and other programs, it takes over the command signals that other programs send, and "translates" them into a language understandable to the machine.

It turns out that each OS consists of at least three mandatory parts:

First - nucleus , command interpreter , "translator" from the program language to the "iron", the language of machine codes.

The second is specialized programs for controlling various devices that make up the computer. Such programs are called drivers - i.e. "drivers", managers. This also includes the so-called "system libraries" used both by the operating system itself and by the programs included in it.

And finally, the third part is a convenient shell that the user communicates with - interface . A kind of beautiful wrapper that packs a boring and not interesting core for the user. Comparison with packaging is also successful because it is what they pay attention to when choosing an operating system - the core, the main part of the OS, is remembered only later. That is why such an unstable and unreliable OS from the point of view of the kernel, like Windows 98/ME, enjoyed such a stunning success - thanks to a beautiful interface wrapper.

Today, the graphical interface is an invariable attribute of any operating system, be it Windows XP, Windows NT or Mac OS (the operating system for Apple computers Macintosh). The operating systems of the first generations did not have a graphical, but text interface, i.e., commands were given to the computer not by clicking on the pictogram, but by entering commands from the keyboard. For example, today to run a text editing program Microsoft Word just click on the icon of this program on the Desktop Windows table. And earlier, when working in the previous generation OS - DOS, it was necessary to enter a command like

C:\WORD\word.exe mybook.doc.

OS are classified according to:

The number of concurrent users: single player (designed to serve one customer) and multiplayer (designed to work with a group of users simultaneously at different terminals). An example of the first is Windows 95/98, and the second is Windows NT. For home use you need a single user OS, and for local network office or enterprise needs a multi-user OS;

The number of processes simultaneously running under the control of the system: single-tasking , multitasking. Single-tasking operating systems (DOS) can perform no more than one task at the same time, and multitasking OSs are able to support the parallel execution of several programs that exist within the same computing system, dividing the computer power between them. For example, the user can enter text into word document while listening to music from your favorite CD, while the computer will copy the file from the Internet at the same time. In principle, the number of tasks that your OS can perform is not limited by anything other than processor power and RAM capacity;

Number of supported processors: uniprocessor , multiprocessor (support the mode of distribution of resources of several processors for solving a particular task);

bitness of the operating system code:

Ø 16-bit (DOS, Windows 3.1),

Ø 32-bit (Windows 95 - Windows XP),

Ø 64-bit (Windows Vista);

The bitness of the OS cannot exceed the bitness of the processor;

interface type: command

(text) and object-oriented

(as a rule, graphic);

type of user access to the computer:

Ø With batch processing - from the programs to be executed, a package of tasks is formed, entered into the computer and executed in order of priority, with possible consideration of priority),

Ø time-sharing - simultaneous interactive (interactive) mode of access to the computer of several users on different terminals is provided, to which the resources of the machine are allocated in turn, which is coordinated by the OS in accordance with the specified service discipline),

Ø real time - provide a certain guaranteed response time of the machine to the user's request with the control of any events, processes or objects external to the computer. OS RT is mainly used in the automation of areas such as oil and gas production and transportation, process control in metallurgy and mechanical engineering, chemical process control, water supply, energy, robot control. Of these, the QNX RT OS stands out with its complete set of tools that the user is accustomed to when working with the UNIX family OS.